Welcome back to the Visualization for Machine Learning Lab!

Week 12: Visualization for NLP

Miscellaneous

- Proposal grades and feedback are on Brightspace

- Check PDFs for comments

- Longer comments may need to be viewed in a PDF reader (like Acrobat) rather than the browser

- Presentations 5/2

- Written report due sometime during finals week (will get back to you with a date on Monday)

- Homework 4 (OPTIONAL - extra credit) on Brightspace

- Amount of extra credit TBD

- Due April 19th

NLP + Vis Applications

- These slides are adapted from a half-day tutorial at the 2023 EMNLP Conference by Shafiq Joty (Salesforce AI Research and Nanyang Technological University), Enamul Hoque (York University), and Jesse Vig (Salesforce AI Research)

- You can find their full tutorial slides here

What Can NLP + Vis Be Used For?

- Visual text analytics

- Natural language interfaces for visualizations

- Text generations for visualizations

- Automatic visual story generation

- Visualization retrieval and recommendation

- Etc.

What Can NLP + Vis Be Used For?

- We will first divide these applications into two categories:

- Natural language as input

- Natural language as output

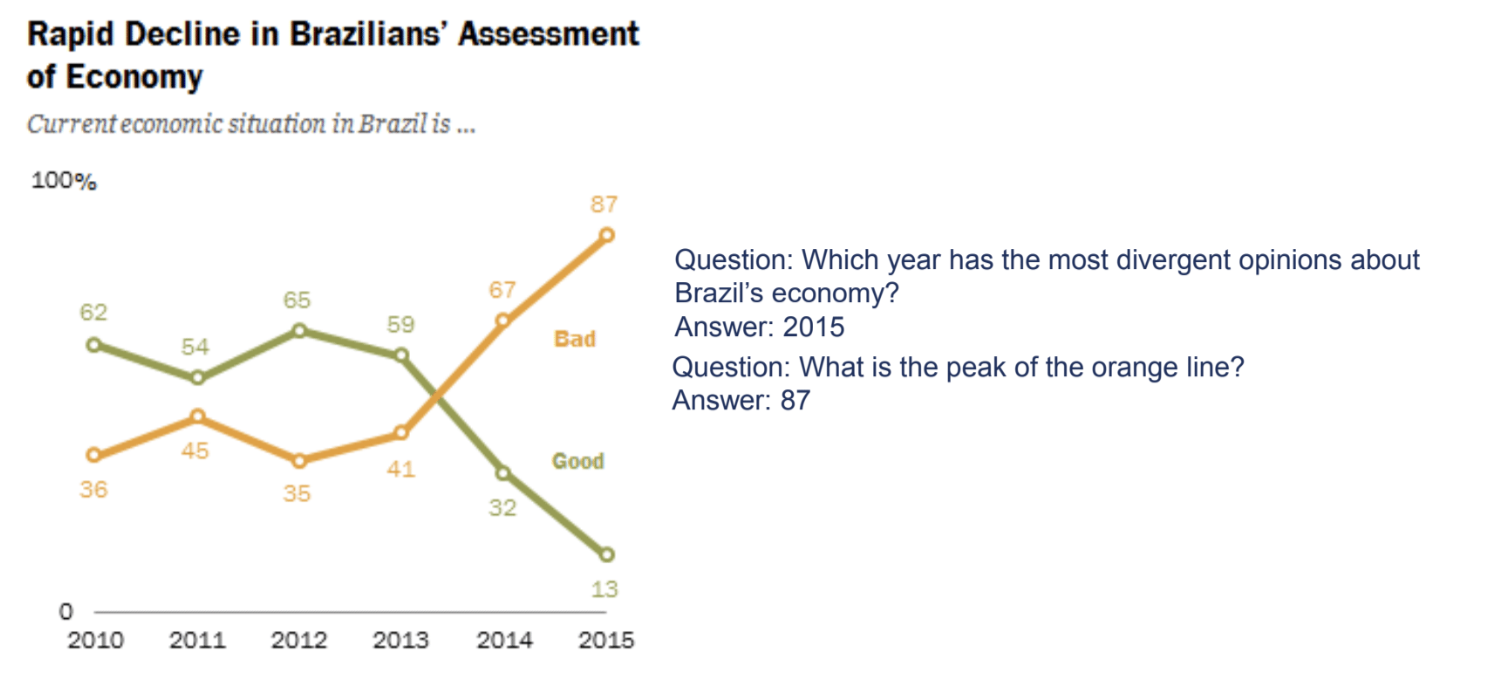

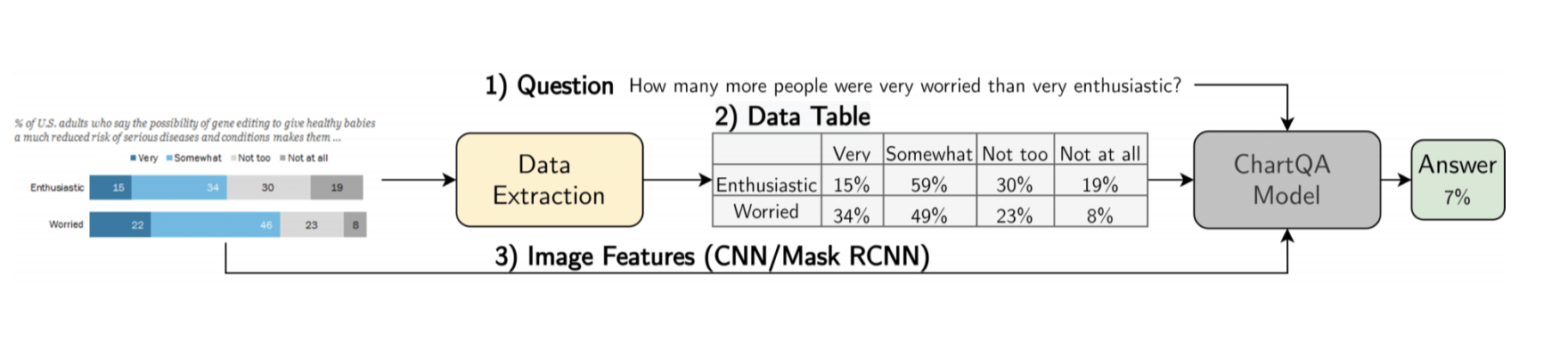

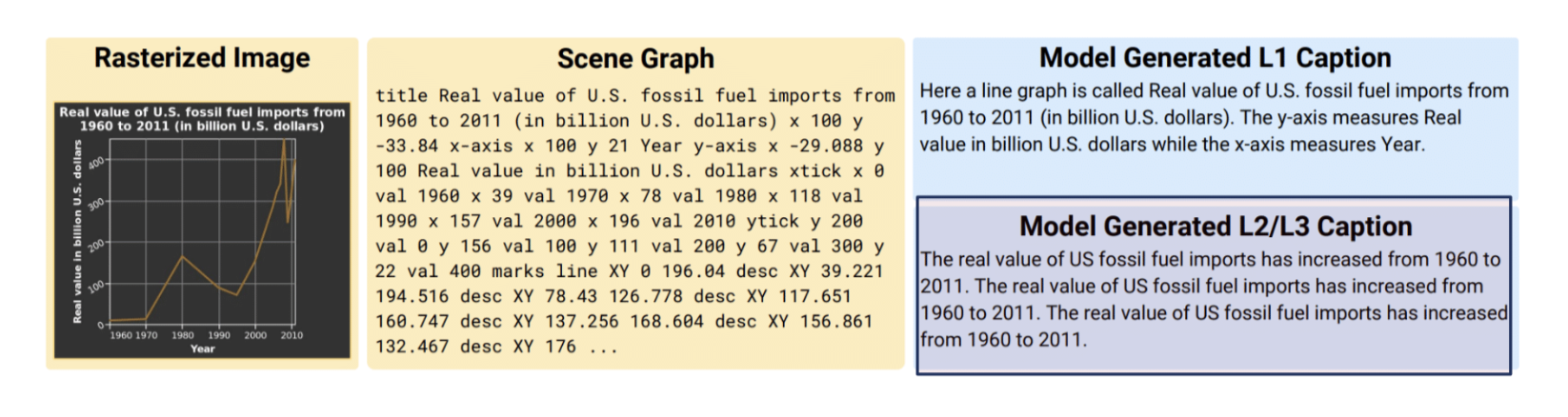

NL as Input: ChartQA

Ref: Masry et al. (2022)

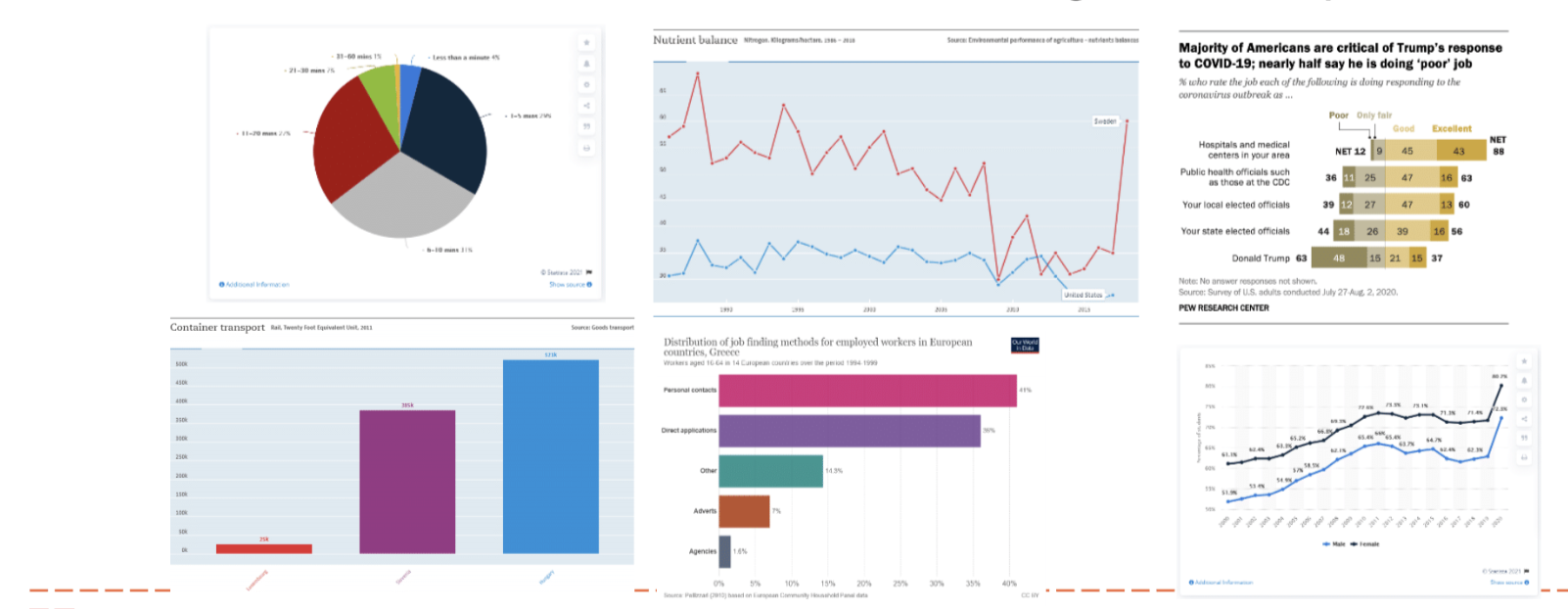

ChartQA Dataset

- Real-world charts crawled from various online sources

- 9.6k human-authored and 23.1K Machine-generated questions

![]()

Ref: Masry et al. (2022)

ChartQA Approach

Ref: Masry et al. (2022)

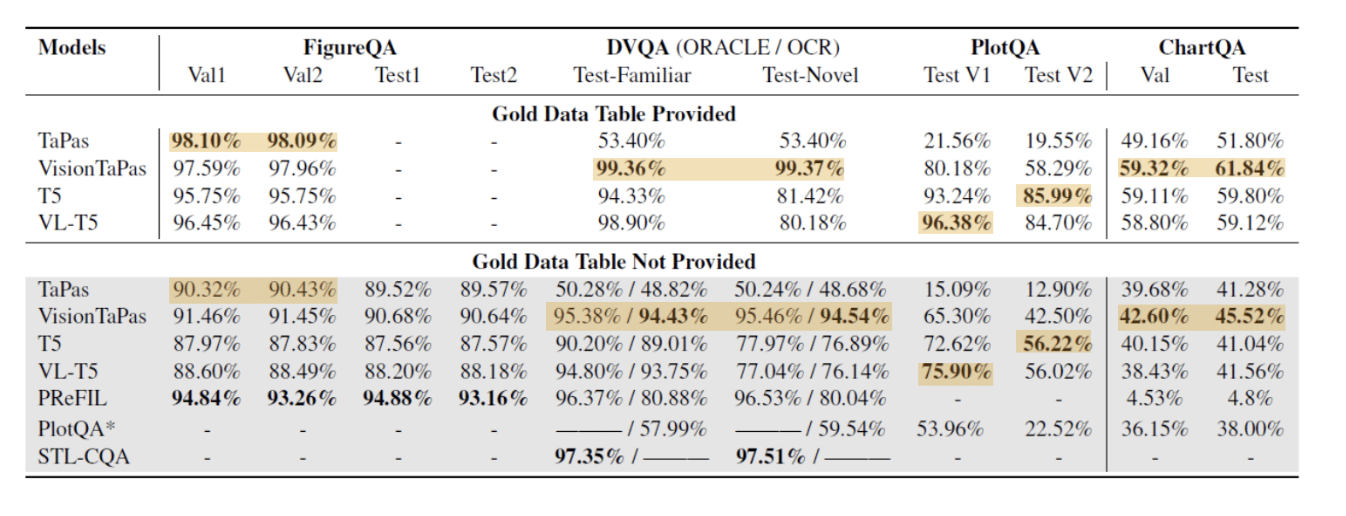

ChartQA Evaluation

- VisionTaPas achieves SOTA performance.

- Lower accuracies in authors’ dataset compared to previous datasets (mainly due to the human-written visual and logical reasoning questions)

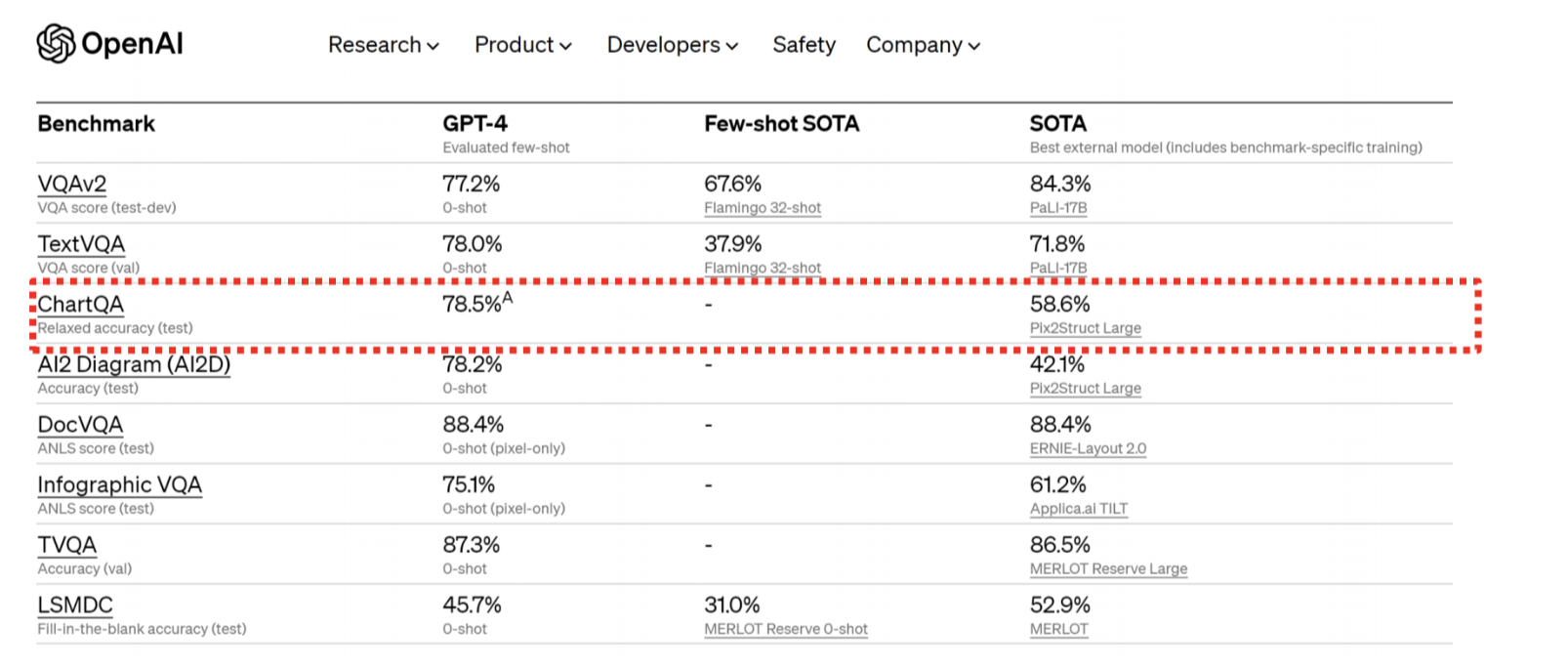

OpenAI’s Study of GPT-4 on ChartQA Benchmark

Ref: https://openai.com/research/gpt-4

NL as Input: Multimodal Inputs for Visualizations

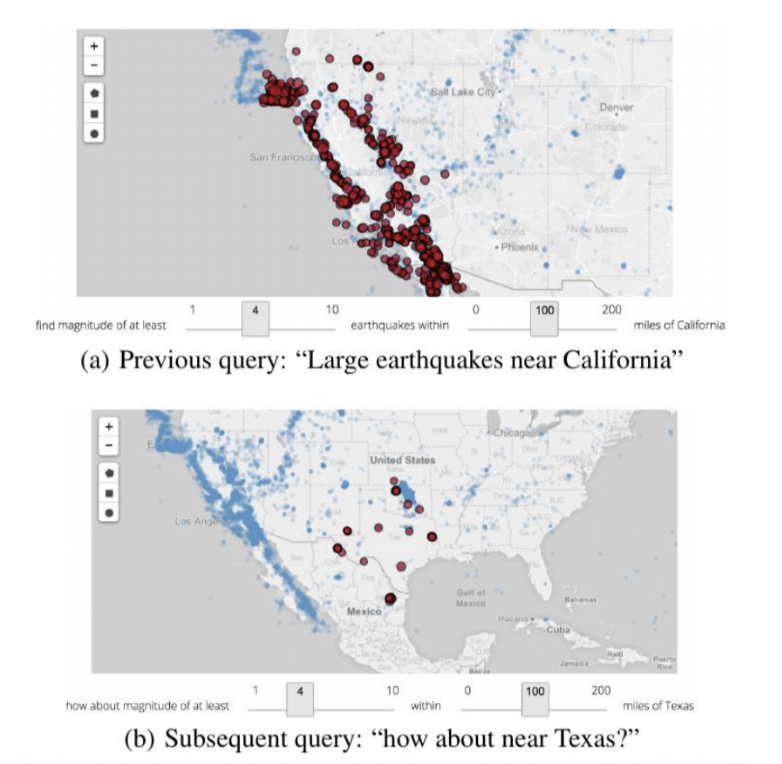

- Ambiguity Widgets: Eviza (Setlur et al., 2016)

- Allows users to rectify queries

![]()

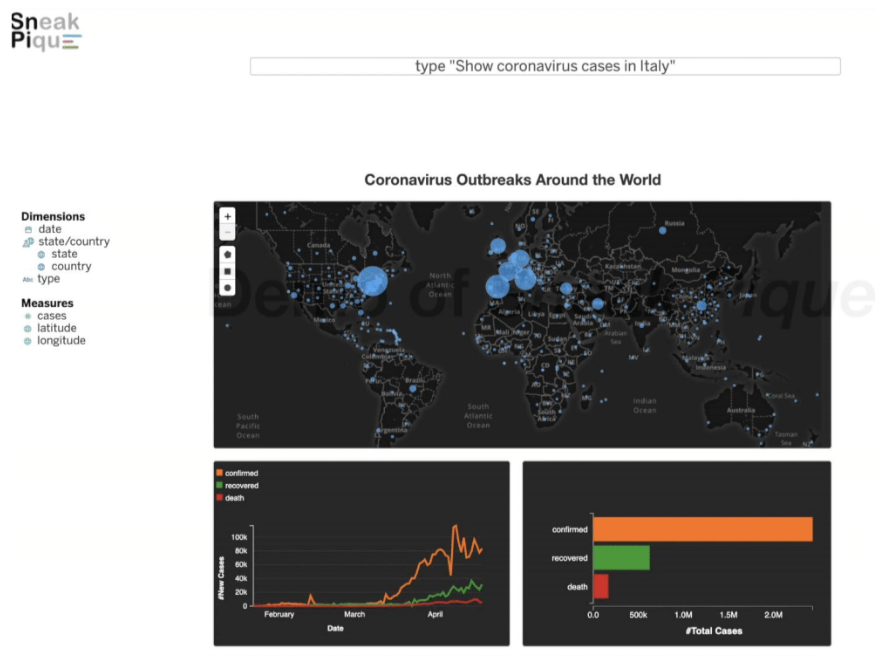

NL as Input: Multimodal Inputs for Visualizations

- Query completion through text and interactive vis: Sneak Pique (Setlur et al., 2020)

Ref: Setlur et al. (2020)

NL as Input: Multimodal Inputs for Visualizations

Ref: Setlur et al. (2020)

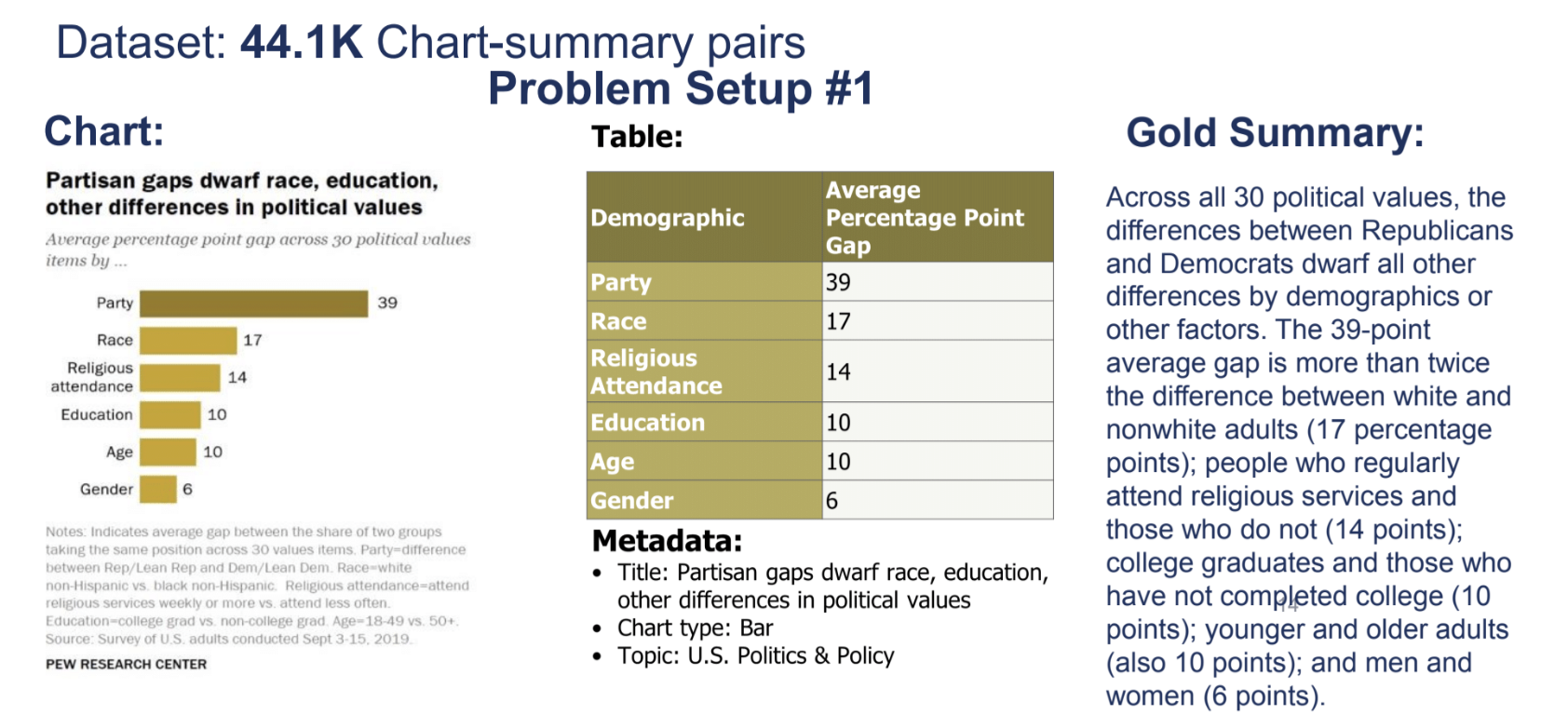

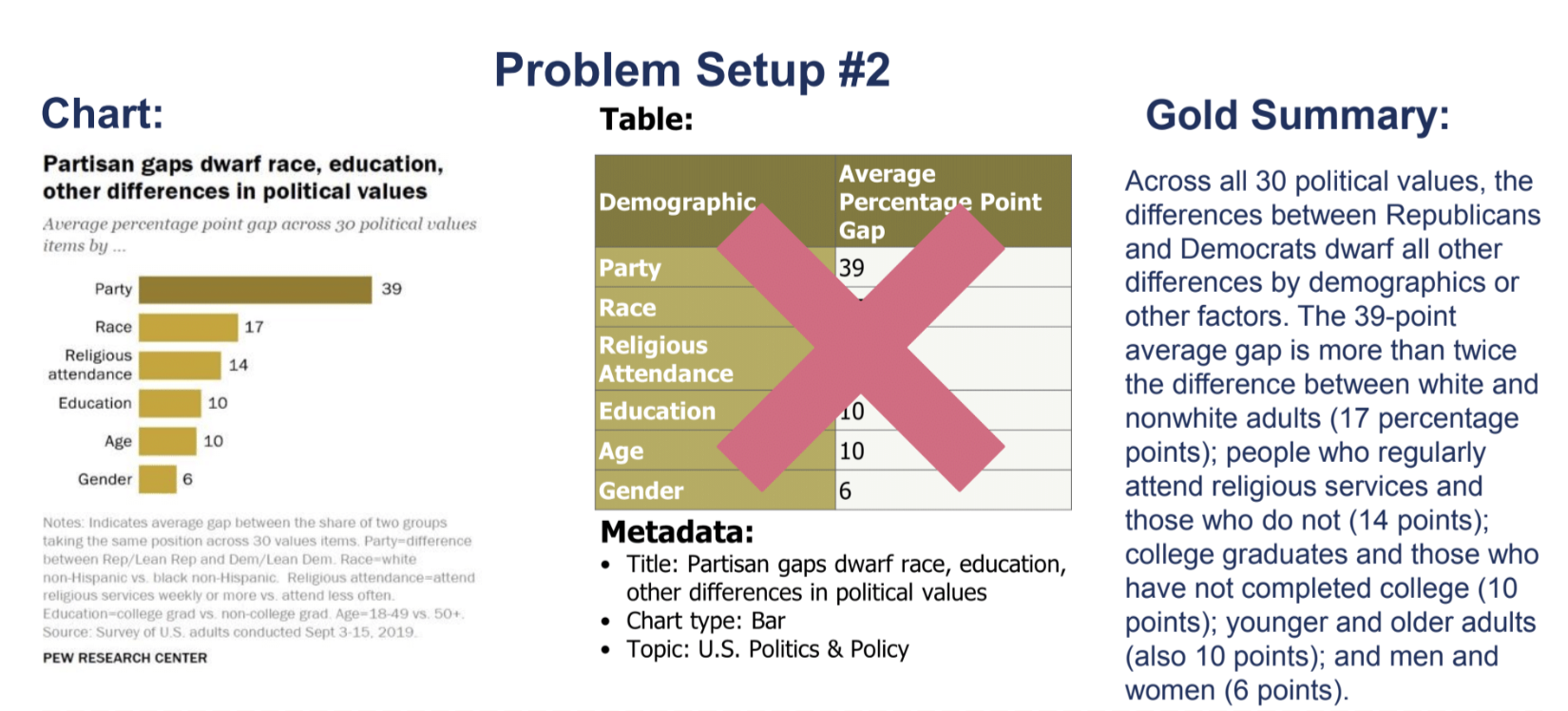

NL as Output: Chart-to-Text

Ref: Kantharaj, Leong, et al. (2022)

NL as Output: Chart-to-Text

Ref: Kantharaj, Leong, et al. (2022)

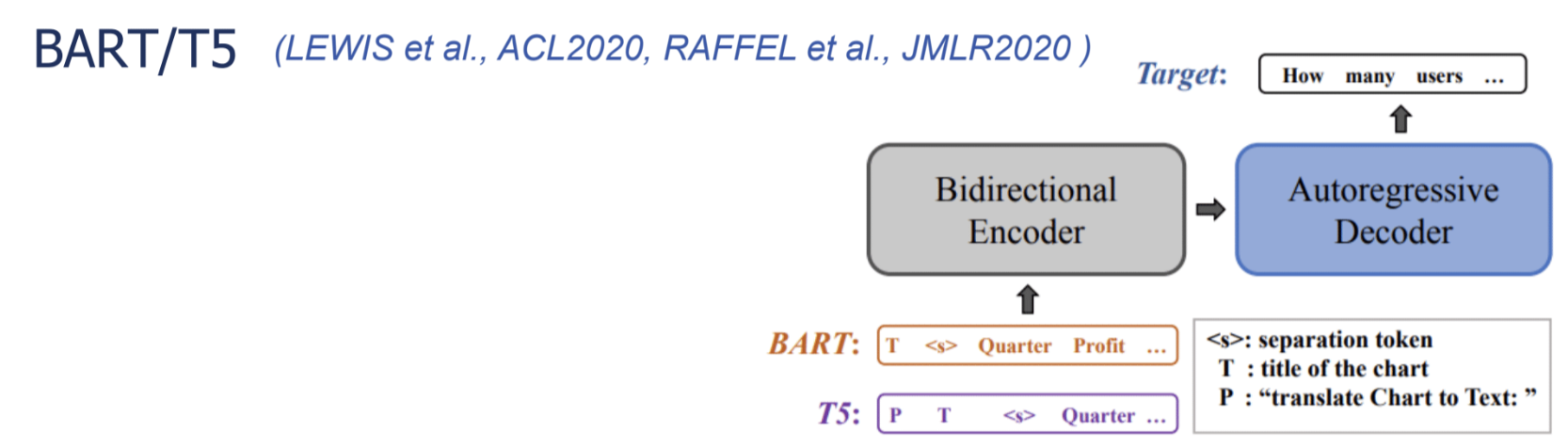

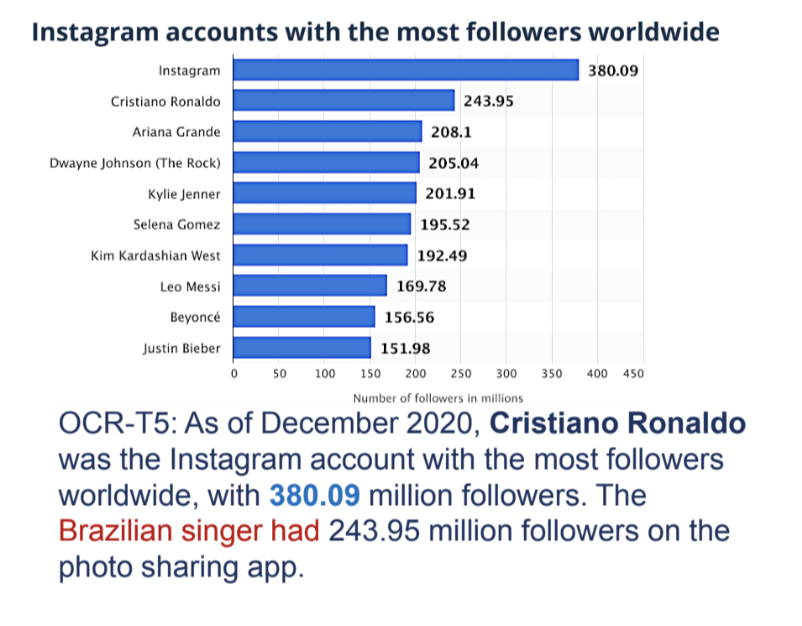

Chart-to-Text Example Models

- Full fine-tuning BART/T5 on authors’ datasets

- Setup 1: Linearizes the table as the input

- Setup 2: Send OCR text from the chart image as the input

- Prefix to T5: “translate Chart to Text:”

Ref: Kantharaj, Leong, et al. (2022)

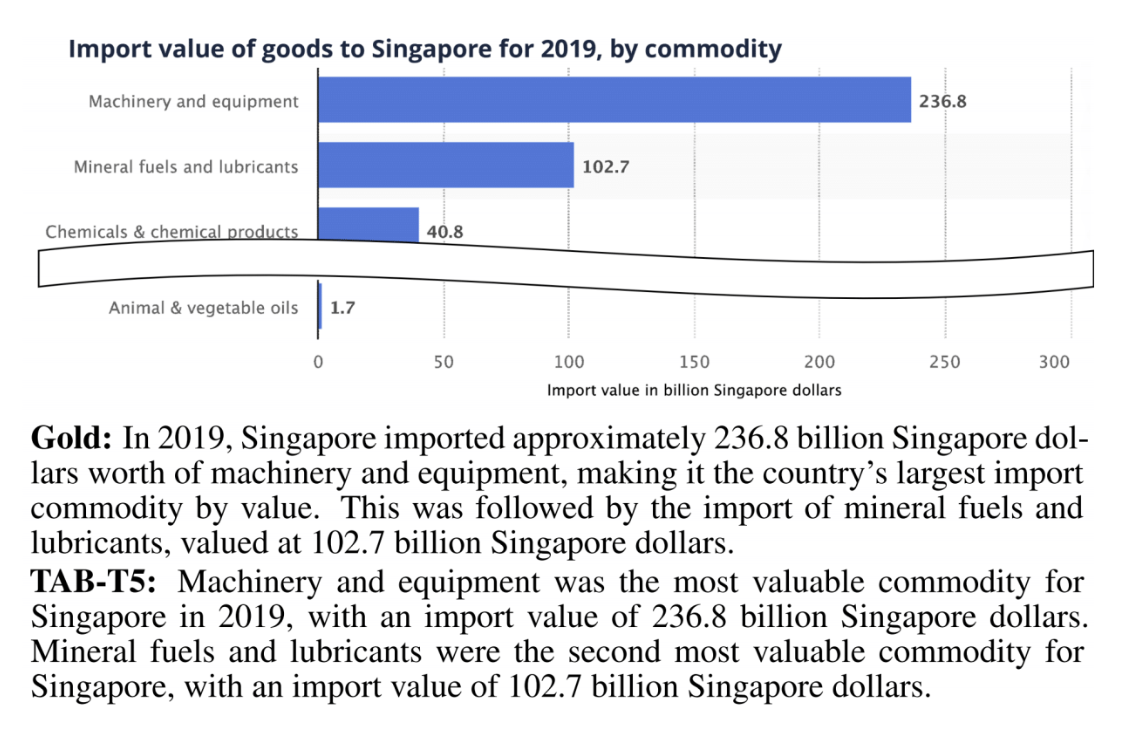

Chart-to-Text Sample Output

Ref: Kantharaj, Leong, et al. (2022)

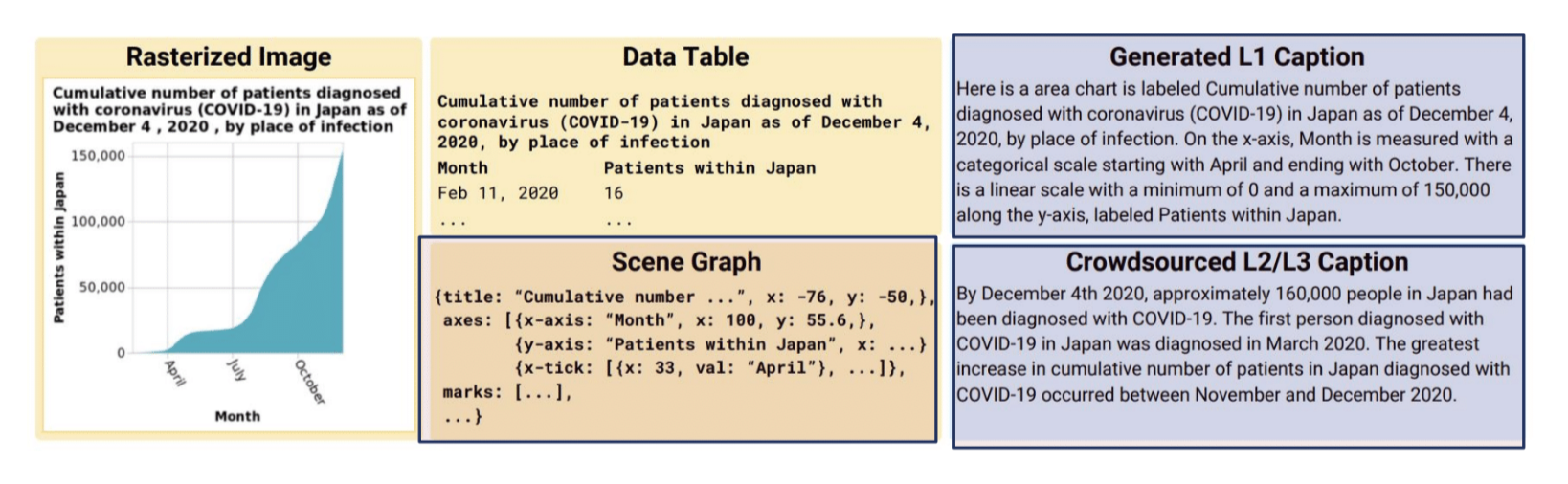

VisText

- 12.4K Charts with generated + crowd-sourced captions

- Scene graphs with a hierarchical representation of a chart’s visual elements

Ref: Tang, Boggust, and Satyanarayan (2023)

VisText Sample Output

- Correctly identifies upword trends, but repeats this claim twice

Ref: Tang, Boggust, and Satyanarayan (2023)

NL as Output: Open-Ended Question Answering with Charts

Ref: Kantharaj, Do, et al. (2022)

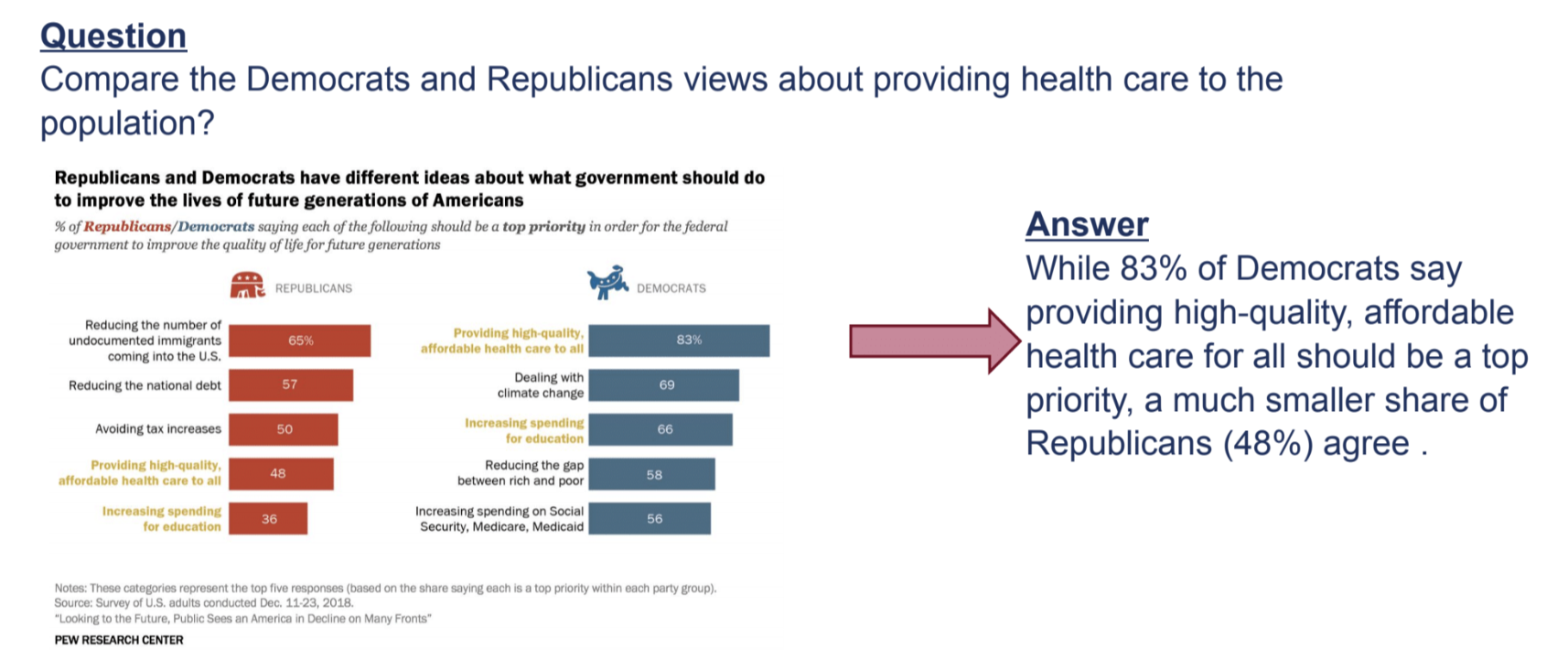

Combining Language and Visualizations as Output

- Roles of natural language

- Generating explanatory answer

- Explaining the answer

Combining Language and Visualizations as Output

- An example of combining text and vis as a multimodal output

Ref: Shi et al. (2020)

Combining Language and Visualizations as Output

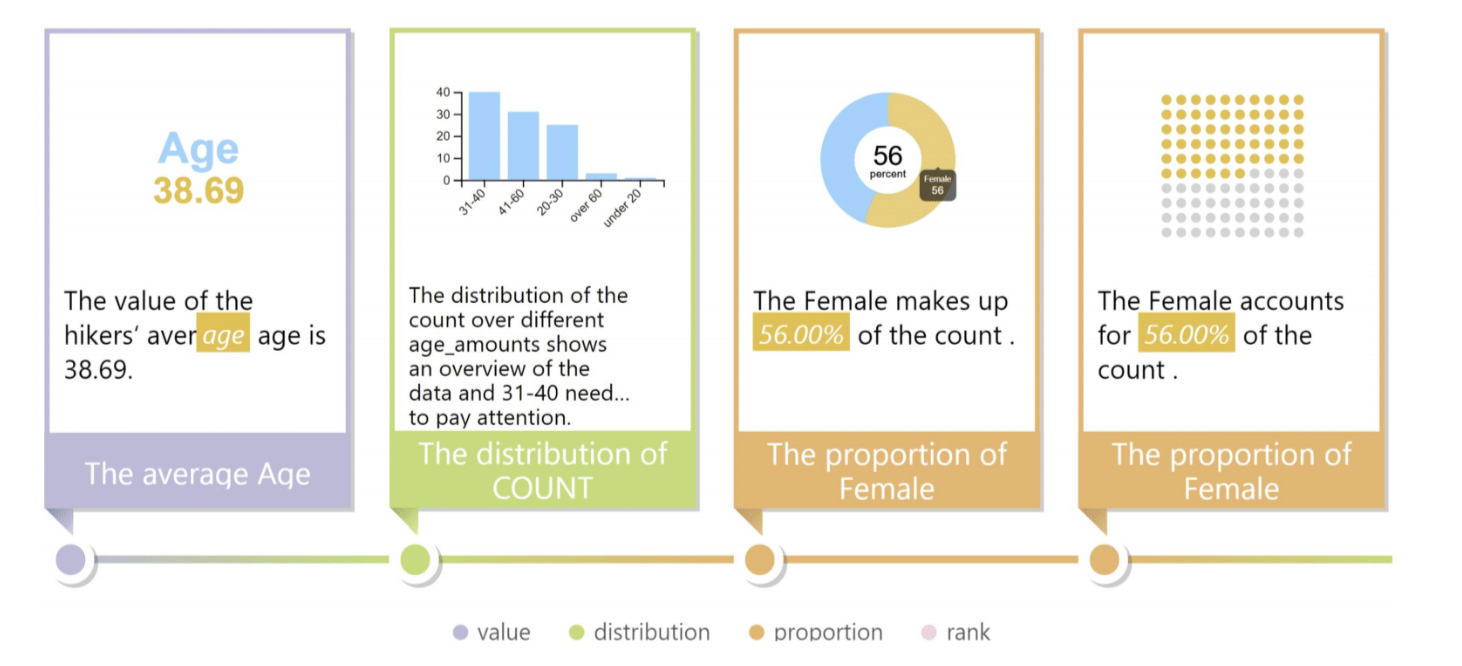

- DataShot (Yun et al., 2019)

Ref: Wang et al. (2020)

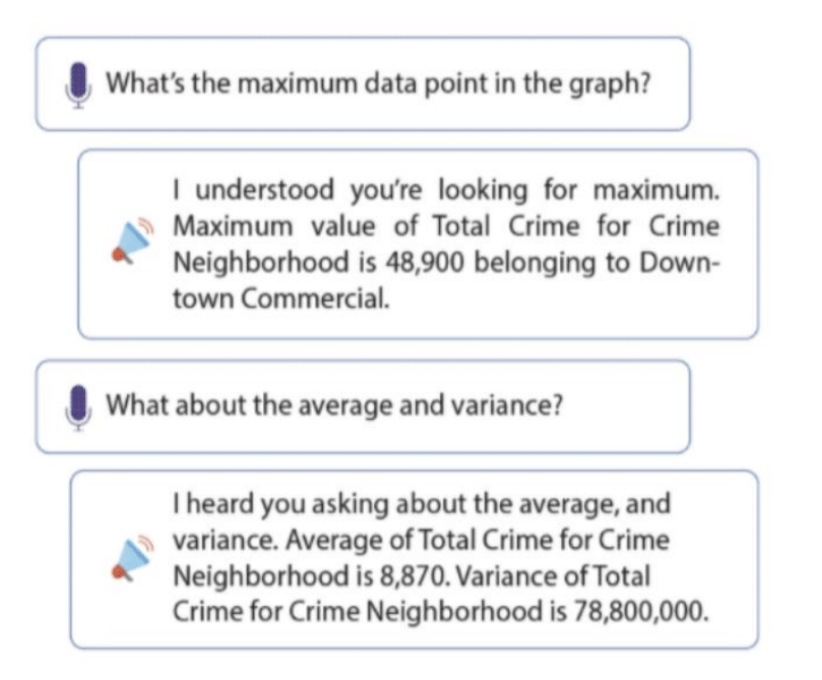

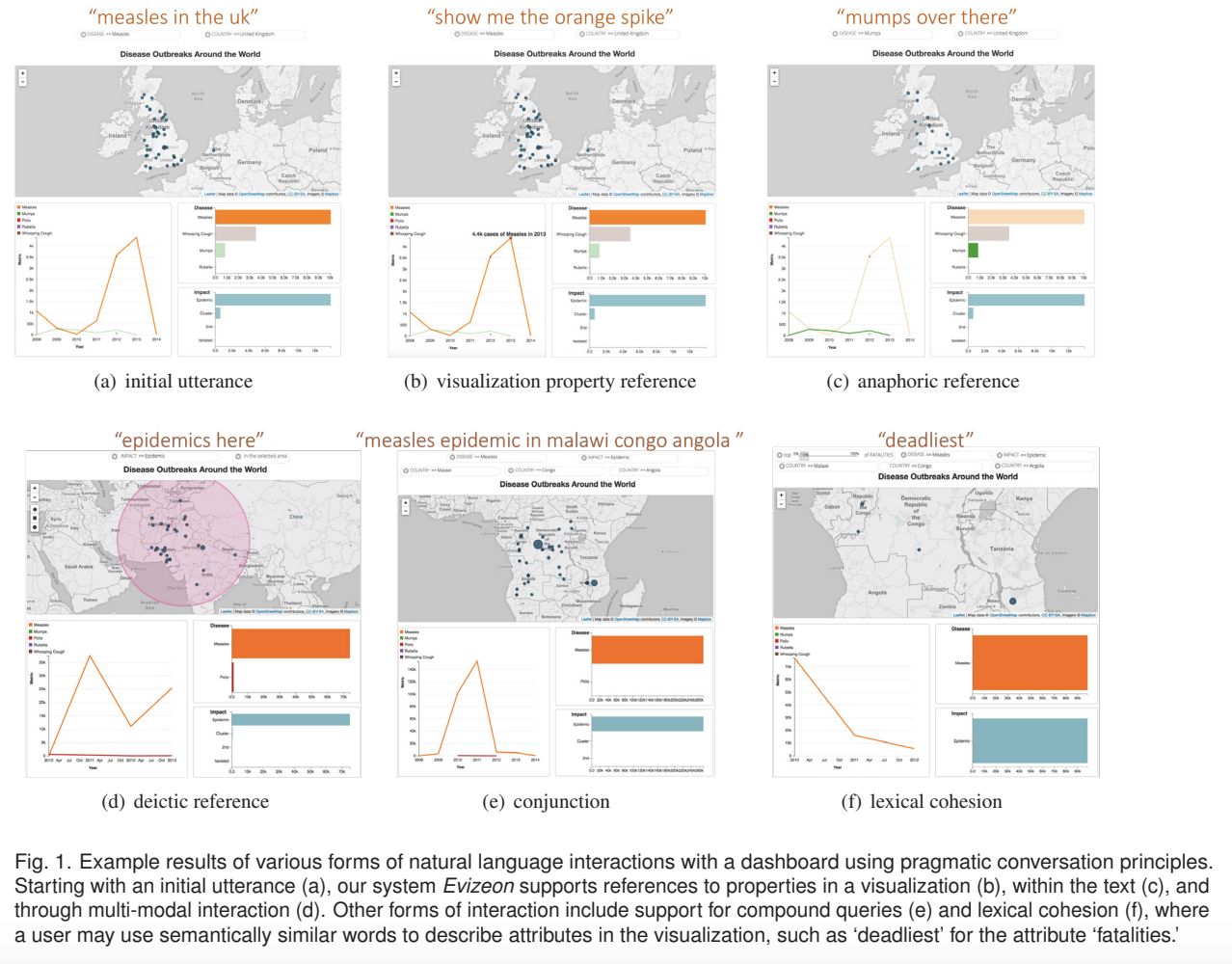

Conversational QA With Visualization

- Evizeon (Hoque et al., TVCG 2017)

Open Challenges & Ongoing Research

- Design of natural language interfaces

- Must consider richness and ambiguities of natural language

- Complex reasoning required to predict the answer

- Computer vision challenges for automatic understanding of image charts

- Inherently interdisciplinary (HCI, ML, NLP, InfoVis, Computer Vision)

Open Challenges & Ongoing Research

- Dataset creation

- Need for large-scale, real-world benchmark datasets

- Most existing datasets lack realism

- For many problem setups, there is no benchmark

Open Challenges & Ongoing Research

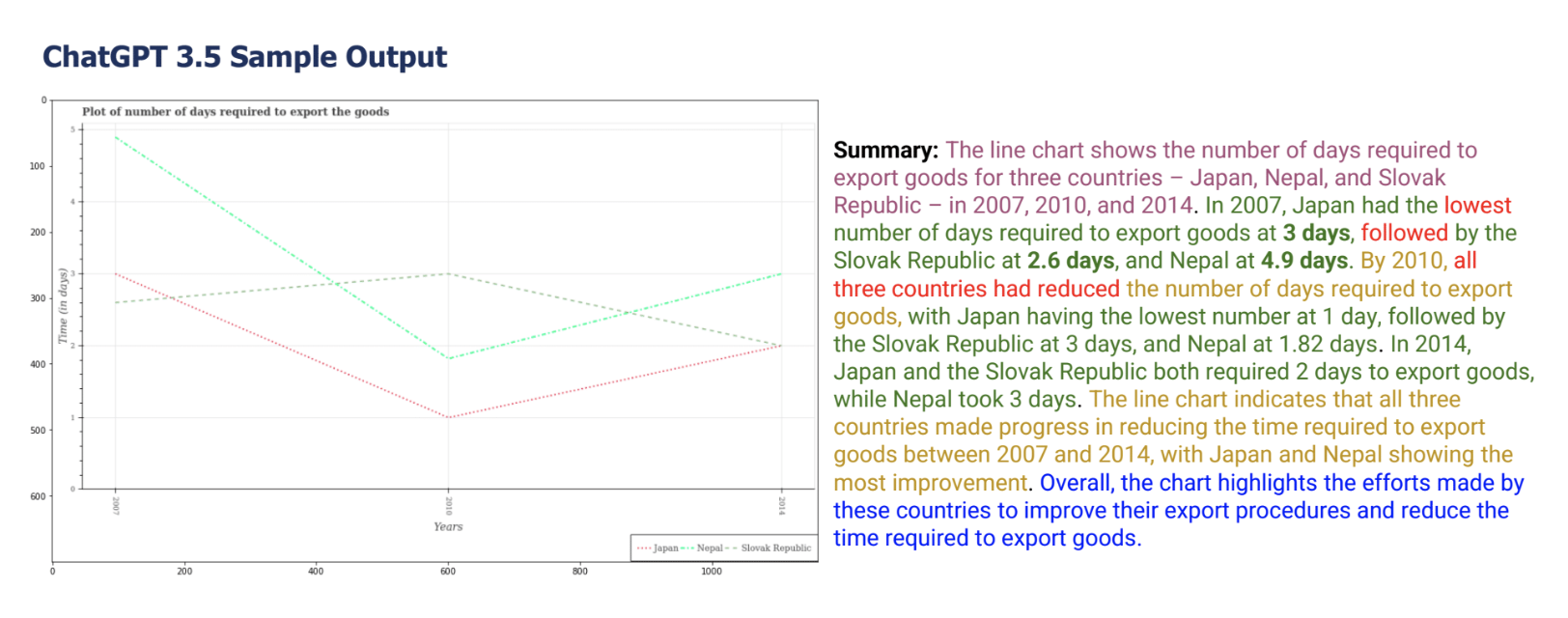

- Challenges with natural language generation

- Hallucinations

- Factual errors

- Perceptual and reasoning aspects

- Computer Vision Challenges

Open Challenges & Ongoing Research

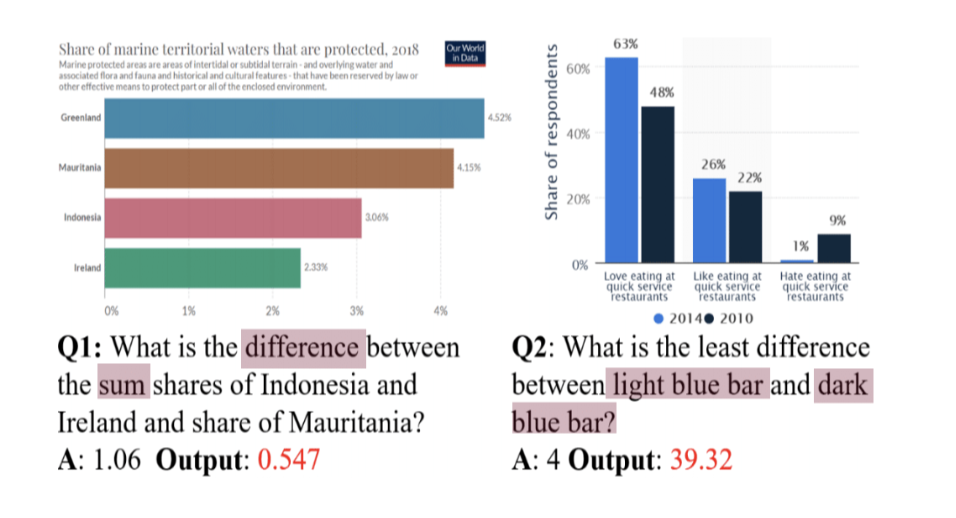

Open Challenges & Ongoing Research

Open Challenges & Ongoing Research

- Improving logical and visual reasoning

![]()

Ref: Masry et al. (2022)

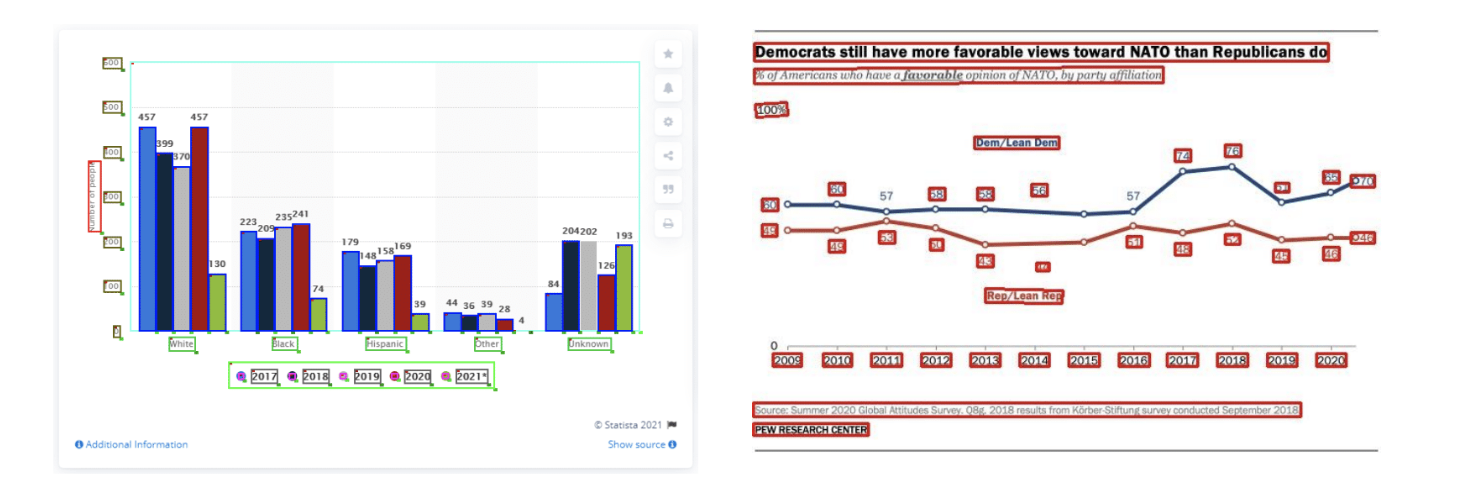

Open Challenges & Ongoing Research

- Computer vision challenges (e.g. chart data extraction)

![]()

Ref: Masry et al. (2022)

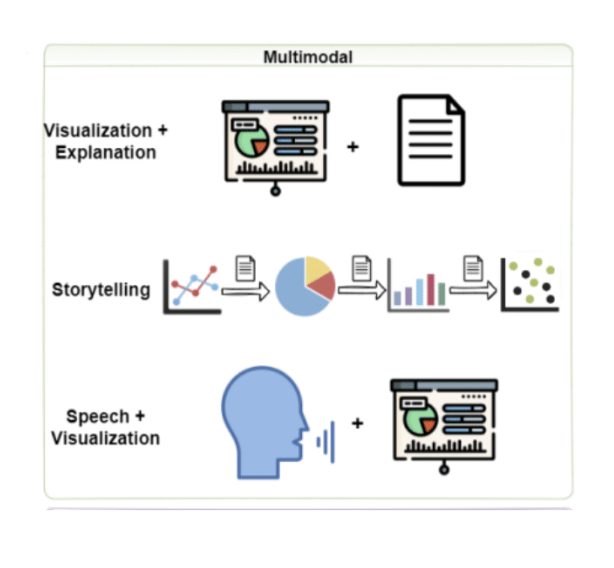

Open Challenges & Ongoing Research

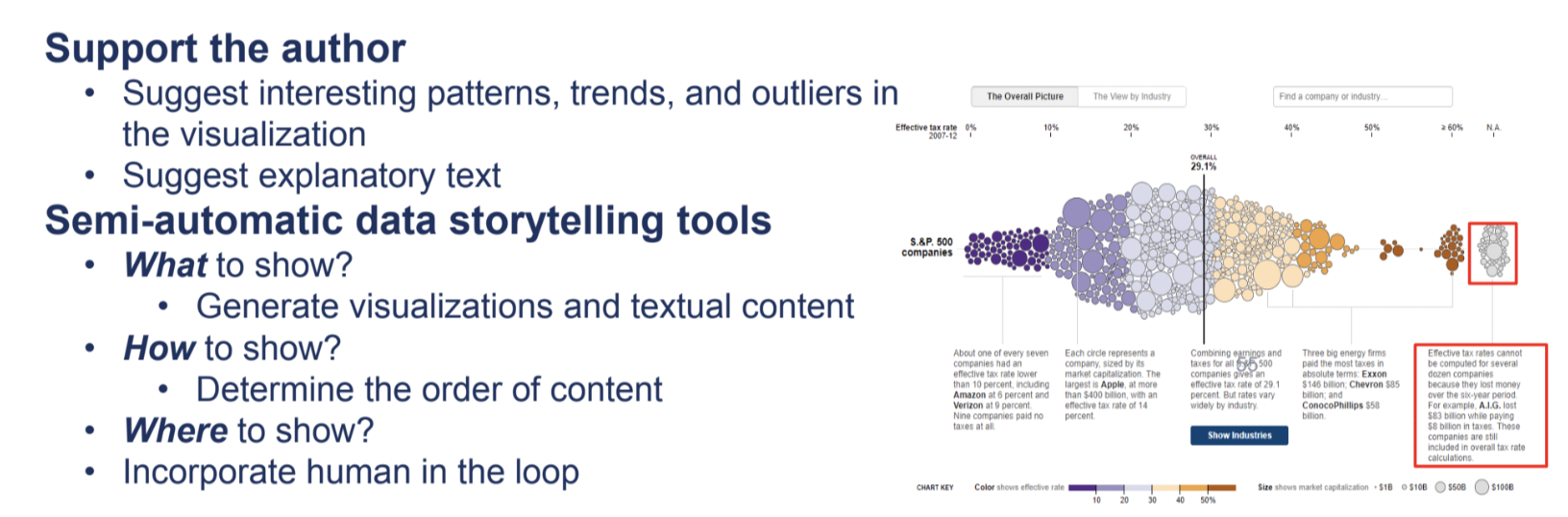

- How can we effectively combine text and visualization in data stories?

![]()

Open Challenges & Ongoing Research

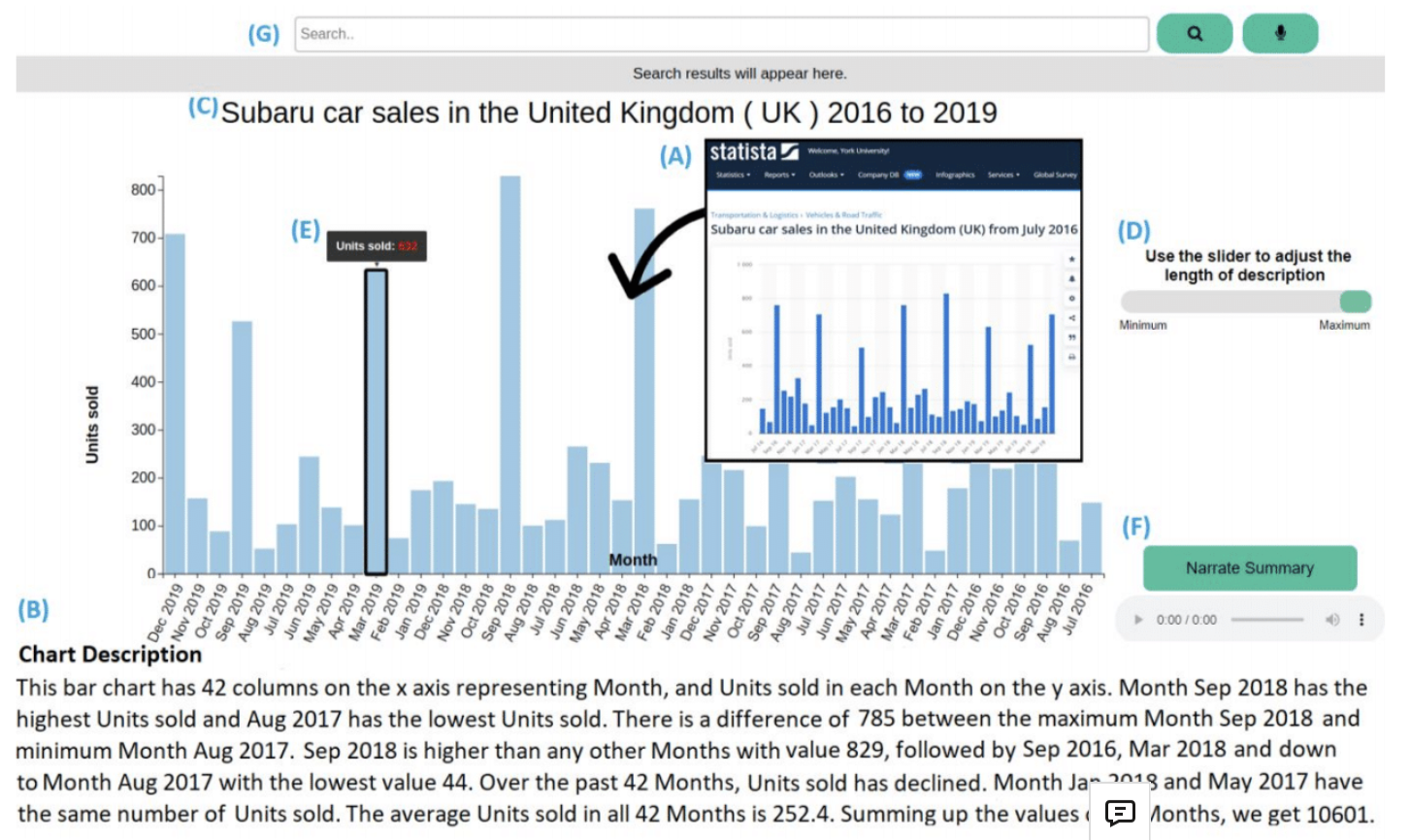

- NLP for Visualization accessiblity

![]()

Ref: Sharif et al. (2022)

Open Challenges & Ongoing Research

- NLP for Visualization accessiblity

![]()

Ref: Alam, Islam, and Hoque (2023)

Project Proposal Feedback

- For the remainder of the lab, please look over the feedback on your project proposals with your group members

- Any questions? Concerns? Any progress you want feedback on? Feel free to ask now

References

Alam, Md Zubair Ibne, Shehnaz Islam, and Enamul Hoque. 2023. “SeeChart: Enabling Accessible Visualizations Through Interactive Natural Language Interface for People with Visual Impairments.” IUI ’23. New York, NY, USA: Association for Computing Machinery. https://doi.org/10.1145/3581641.3584099.

Kantharaj, Shankar, Xuan Long Do, Rixie Tiffany Leong, Jia Qing Tan, Enamul Hoque, and Shafiq Joty. 2022. “OpenCQA: Open-Ended Question Answering with Charts.” In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, edited by Yoav Goldberg, Zornitsa Kozareva, and Yue Zhang, 11817–37. Abu Dhabi, United Arab Emirates: Association for Computational Linguistics. https://doi.org/10.18653/v1/2022.emnlp-main.811.

Kantharaj, Shankar, Rixie Tiffany Leong, Xiang Lin, Ahmed Masry, Megh Thakkar, Enamul Hoque, and Shafiq Joty. 2022. “Chart-to-Text: A Large-Scale Benchmark for Chart Summarization.” In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), edited by Smaranda Muresan, Preslav Nakov, and Aline Villavicencio, 4005–23. Dublin, Ireland: Association for Computational Linguistics. https://doi.org/10.18653/v1/2022.acl-long.277.

Masry, Ahmed, Xuan Long Do, Jia Qing Tan, Shafiq Joty, and Enamul Hoque. 2022. “ChartQA: A Benchmark for Question Answering about Charts with Visual and Logical Reasoning.” In Findings of the Association for Computational Linguistics: ACL 2022, edited by Smaranda Muresan, Preslav Nakov, and Aline Villavicencio, 2263–79. Dublin, Ireland: Association for Computational Linguistics. https://doi.org/10.18653/v1/2022.findings-acl.177.

Setlur, Vidya, Enamul Hoque, Dae Hyun Kim, and Angel X. Chang. 2020. “Sneak Pique: Exploring Autocompletion as a Data Discovery Scaffold for Supporting Visual Analysis.” In Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology, 966–78. UIST ’20. New York, NY, USA: Association for Computing Machinery. https://doi.org/10.1145/3379337.3415813.

Sharif, Ather, Olivia H. Wang, Alida T. Muongchan, Katharina Reinecke, and Jacob O. Wobbrock. 2022. “VoxLens: Making Online Data Visualizations Accessible with an Interactive JavaScript Plug-in.” In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. CHI ’22. New York, NY, USA: Association for Computing Machinery. https://doi.org/10.1145/3491102.3517431.

Shi, Danqing, Xinyue Xu, Fuling Sun, Yang Shi, and Nan Cao. 2020. “Calliope: Automatic Visual Data Story Generation from a Spreadsheet.” CoRR abs/2010.09975. https://arxiv.org/abs/2010.09975.

Tang, Benny, Angie Boggust, and Arvind Satyanarayan. 2023. “VisText: A Benchmark for Semantically Rich Chart Captioning.” In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), edited by Anna Rogers, Jordan Boyd-Graber, and Naoaki Okazaki, 7268–98. Toronto, Canada: Association for Computational Linguistics. https://doi.org/10.18653/v1/2023.acl-long.401.

Wang, Yun, Zhida Sun, Haidong Zhang, Weiwei Cui, Ke Xu, Xiaojuan Ma, and Dongmei Zhang. 2020. “DataShot: Automatic Generation of Fact Sheets from Tabular Data.” IEEE Transactions on Visualization and Computer Graphics 26: 895–905. https://api.semanticscholar.org/CorpusID:201093978.