import pandas as pd, numpy as np

from sklearn import datasets

from sklearn.decomposition import PCA

from matplotlib import pyplot as plt

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score, precision_score, recall_score

from sklearn.model_selection import train_test_split

import lime, lime.lime_tabular, shapWelcome back to the Visualization for Machine Learning Lab!

Week 6: Interpreting Black Box Models with LIME AND SHAP (and PCA if time)

Miscellaneous

- Homework 2 due TONIGHT at 11:59pm

- Homework 1 grades released - ask Rithwick (rg4361@nyu.edu) any questions about grades

Interpreting Black Box Models

- Not as intuitive as white box models, can be hard to define a model’s decision boundary in a human-understandable manner

- However there are ways to analyze what factors affect model outputs!

- LIME

- SHAP

Local Interpretable Model-Agnostic Explanations (LIME)

- What is LIME?

- LIME is a python library that explains the prediction of any classifier by learning an interpretable model locally around the prediction

LIME

- Why is LIME a good model explainer?

- Interpretable by non-experts

- Local fidelity (replicates the model’s behavior in the vicinity of the instance being predicted)

- Model agnostic (does not make any assumptions about the model)

- Global perspective (when used on a representative set, LIME can provide a global intuition of the model)

Ref: Sharma (2020)

LIME

- How does LIME work?

- For an in depth explanation of the math, see Sharma (2020)

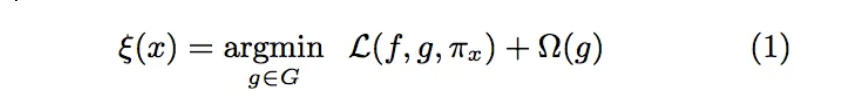

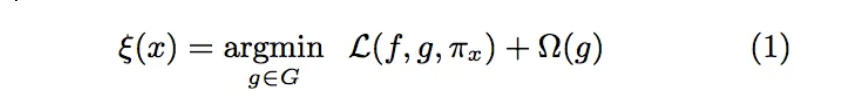

- Fidelity-Interpretability Tradoff

- We want an explainer that is faithful (replicates our model’s behavior locally) and interpretable. To achieve this, LIME minimizes

Ref: Sharma (2020)

LIME

f: an original predictor

x: original features

g: explanation model which could be a linear model, decision tree, or falling rule lists

Pi: proximity measure between an instance of z to x to define locality around x. It weighs z’ (perturbed instances) depending upon their distance from x.

First Term: the measure of the unfaithfulness of g in approximating f in the locality defined by Pi. This is termed as locality-aware loss in the original paper

Last term: a measure of model complexity of explanation g (e.g. if your explanation model is a decision tree it can be the depth of the tree)

Ref: Sharma (2020)

LIME Example in Python

We first import the relevant libraries:

LIME Example in Python

Recall the Iris dataset, which contains flowers that can be sorted into 3 subspecies classes based on 4 features:

LIME Example in Python

We ignore Class 0 for now (we will see why shortly), and split the data into a training set (80%) and test set (20%):

LIME Example in Python

We train a random forest classifier on our training set, and generate predictions for our test set:

classifier = RandomForestClassifier(random_state=42)

classifier.fit(X_train, y_train)

predicted = classifier.predict(X_test)

pre = precision_score(y_test, predicted)

rec = recall_score(y_test, predicted)

acc = accuracy_score(y_test, predicted)

print("Precision: ", pre)

print("Recall: ", rec)

print("Accuracy: ", acc)Precision: 1.0

Recall: 0.9166666666666666

Accuracy: 0.95LIME Example in Python

We use lime to create an explainer based on our training set, and generate an explanation for the 10th sample in our test set:

explainer1 = lime.lime_tabular.LimeTabularExplainer(X_train.values,

feature_names=X_train.columns.values.tolist(),

class_names=['class 1', 'class 2'],

verbose=True,

mode='classification',

random_state=42)

lime_values = explainer1.explain_instance(X_test.values[10], classifier.predict_proba, num_features=4)

##some lime_values properties intercept, local_pred, scoreIntercept 0.6704191905472919

Prediction_local [0.233346]

Right: 0.22LIME Example in Python

- We see that this explanation has three parts:

- On the left, we see that the classifier estimated there was a 78% chance the sample was from Class 1, and a 22% chance the sample was from Class 2

- In the center, we see that petal length and width increased the proability that the sample was from Class 1, while sepal length and width increased the proability that the sample was from Class 2. Petal length and width also had greater influence on their respective increase than sepal length and width

- On the right, we see the actual LIME values for each feature. The color of each row corresponds to the class that feature is "voting for"

Shapley Values (SHAP)

- A method from coalitional game theory that tells us how to fairly distribute the “payout” among the features

- The “game” is the prediction task for a single instance of the dataset

- The “gain” is the actual prediction for this instance minus the average prediction for all instances

- The “players” are the feature values of the instance that collaborate to receive the gain (= predict a certain value)

- Useful for debugging models, explaining individual model predictions, and data

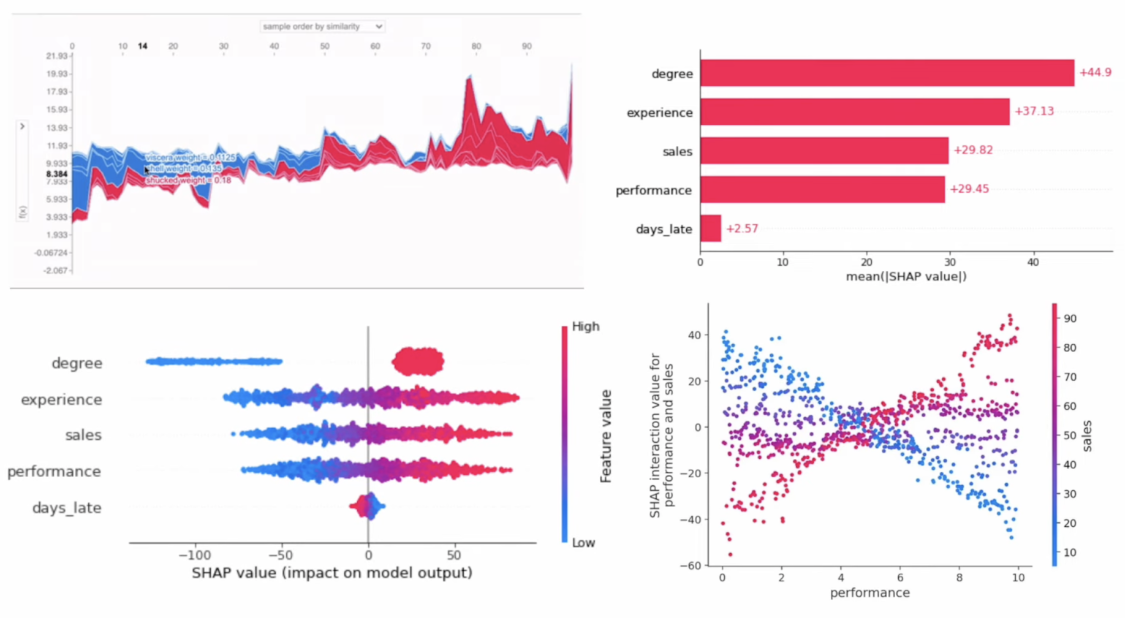

SHAP Example

- Suppose the HR dept of your company has asked you to build a model that predicts the annual bonus for each of the company’s employees

- We are given a dataset with 1000 employees and the following 5 features:

- Experience (continuous)

- Degree (binary)

- Sales (continuous)

- Performance (continuous)

- Days Late (continuous)

Ref: ADataOdyssey (2023)

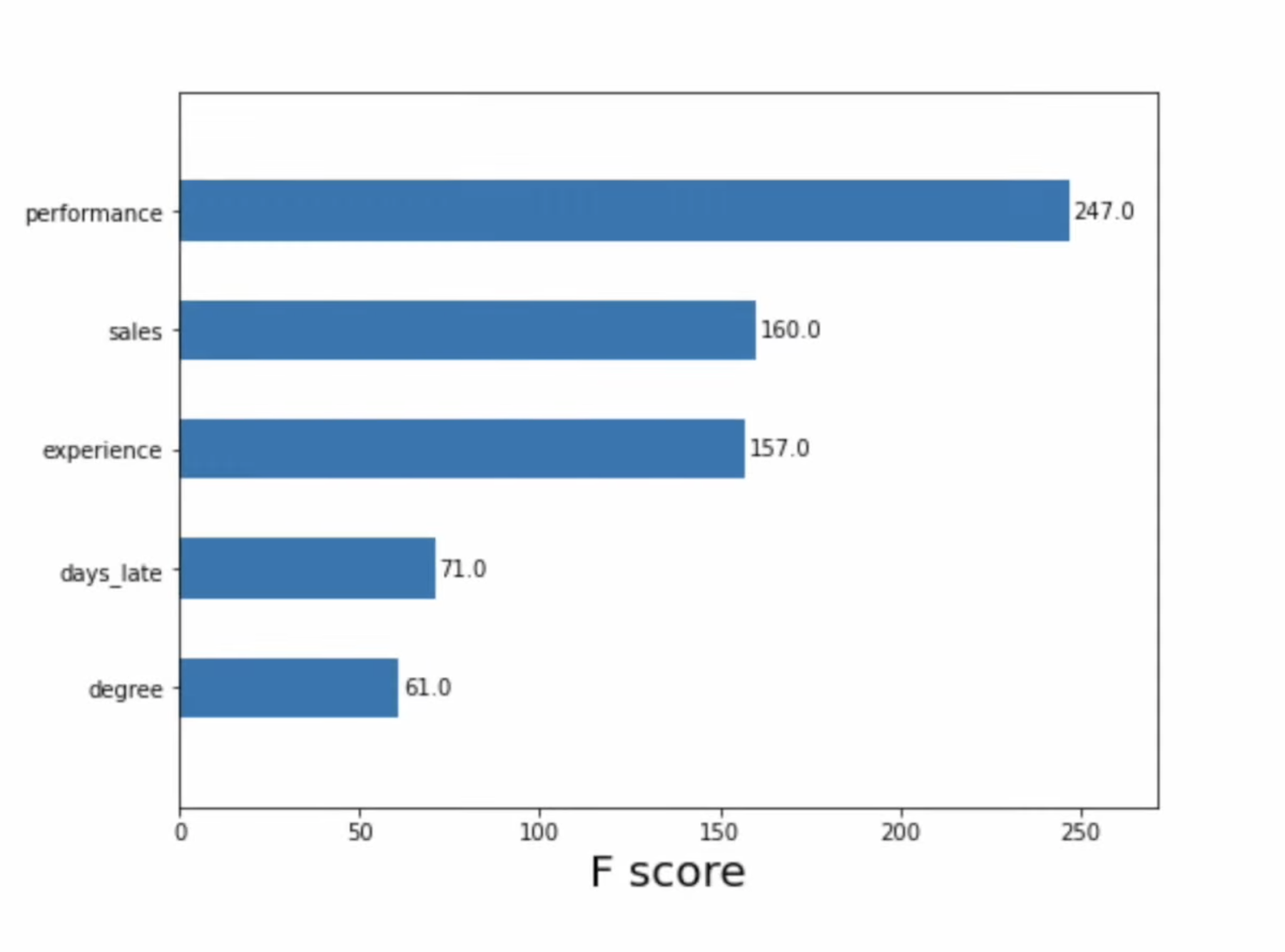

SHAP Example

We can use feature importance to understand how important each feature is to model predictions in general, but it cannot tell us

1. Feature affect on individual predictions

2. If a feature tends to increase or decrease the prediction

3. (For classification) if a feature changes the probability of a positive prediction

Ref: ADataOdyssey (2023)

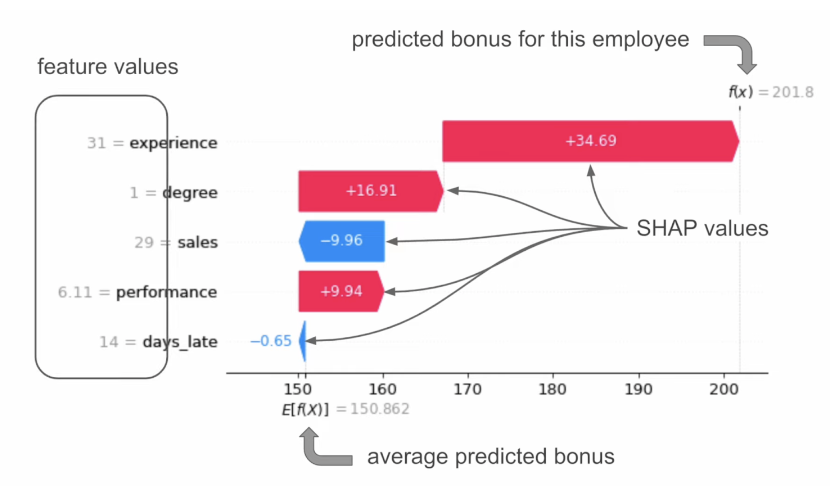

SHAP Example (Waterfall Plot)

- “When an employee has a degree, the predicted bonus is $16.91 higher than the average.” = “Degree has increased the prediction”

Ref: ADataOdyssey (2023)

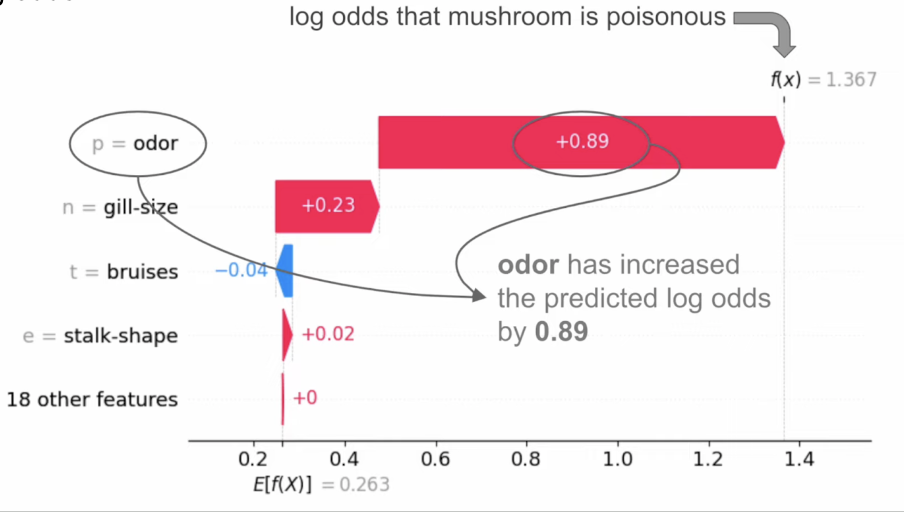

SHAP Example (Classification)

- Suppose we have a classification model that predicts whether mushrooms are poisonous (class 1) or edible (class 0)

- Use log odds (the logarithm of the odds ratio - odds are likelihood ratios, and tell us how likely it is that an event will happen)

Ref: ADataOdyssey (2023)

Other SHAP Plots (for model as a whole)

Ref: ADataOdyssey (2023)

SHAP Examples in Python

We create a SHAP explainer and generate SHAP values for our test set:

SHAP Examples in Python

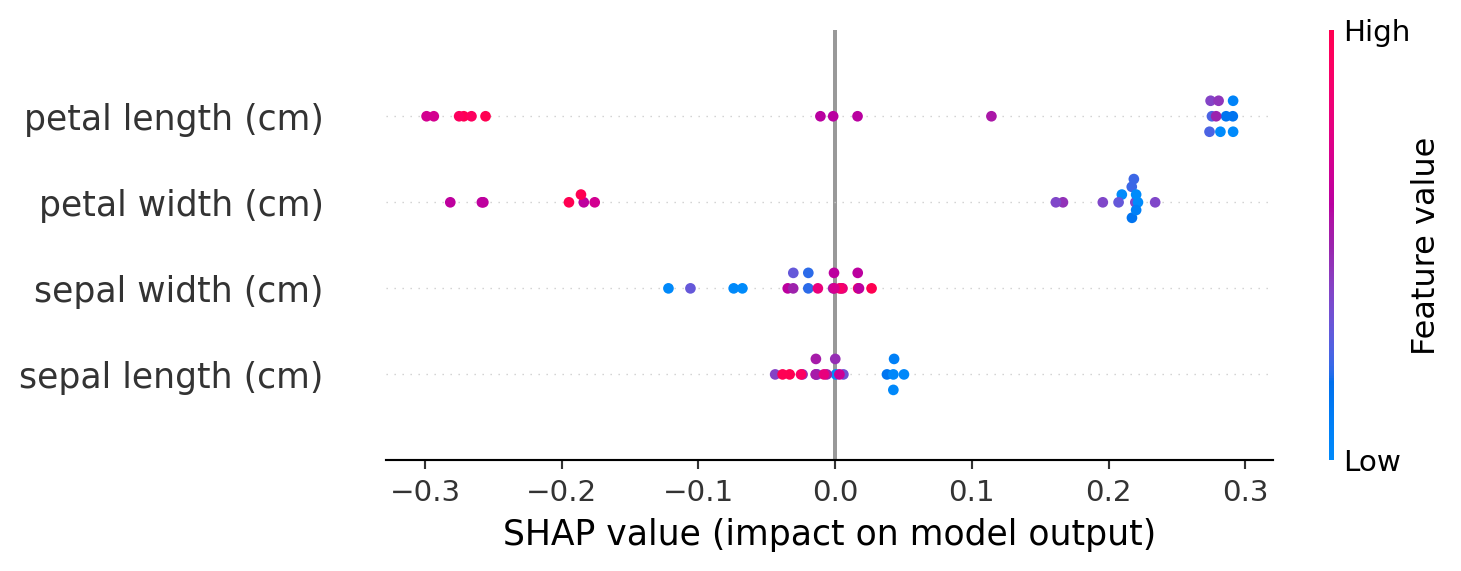

- We can visualize these SHAP values as a beeswarm plot:

- Each dot is a row of the dataset

- Features (y-axis) are ranked from top to bottom by their mean absolute SHAP values for the entire dataset

- X-axis position corresponds to each dot's SHAP value

- Color corresponds to the raw feature value

SHAP Examples in Python

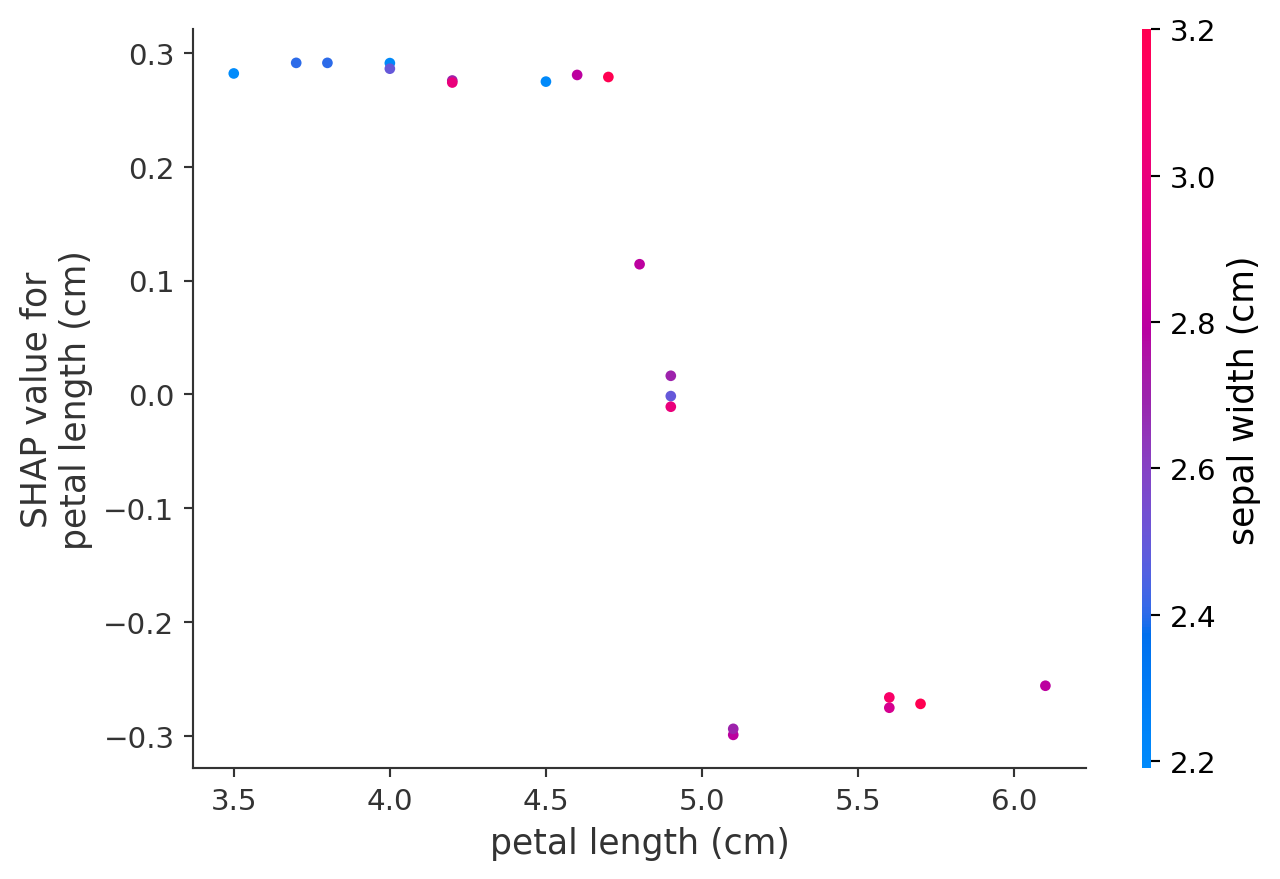

- Dependence plots show the relationship between SHAP and raw values for a specific feature

- Vertical dispersion in SHAP values seen for fixed variable values is due to interaction effects with other features

- Dependence plots are often coloured by the values of a strongly interacting feature (in this case, sepal width)

SHAP Examples in Python

Finally, force plots can be thought of as a condensed waterfall plot:

shap.initjs()

sample_idx = 10

shap.force_plot(explainer2.expected_value[class_idx], shap_values[class_idx][sample_idx], X_test.values[sample_idx])Have you run `initjs()` in this notebook? If this notebook was from another user you must also trust this notebook (File -> Trust notebook). If you are viewing this notebook on github the Javascript has been stripped for security. If you are using JupyterLab this error is because a JupyterLab extension has not yet been written.

SHAP Examples in Python

shap.initjs()

class_idx = 1

shap.force_plot(explainer2.expected_value[class_idx], shap_values[class_idx][sample_idx], X_test.values[sample_idx])Have you run `initjs()` in this notebook? If this notebook was from another user you must also trust this notebook (File -> Trust notebook). If you are viewing this notebook on github the Javascript has been stripped for security. If you are using JupyterLab this error is because a JupyterLab extension has not yet been written.

Further SHAP Reading

See this article for a more in-depth breakdown of how to read each type of SHAP plot.

Ref: Cooper (2023)

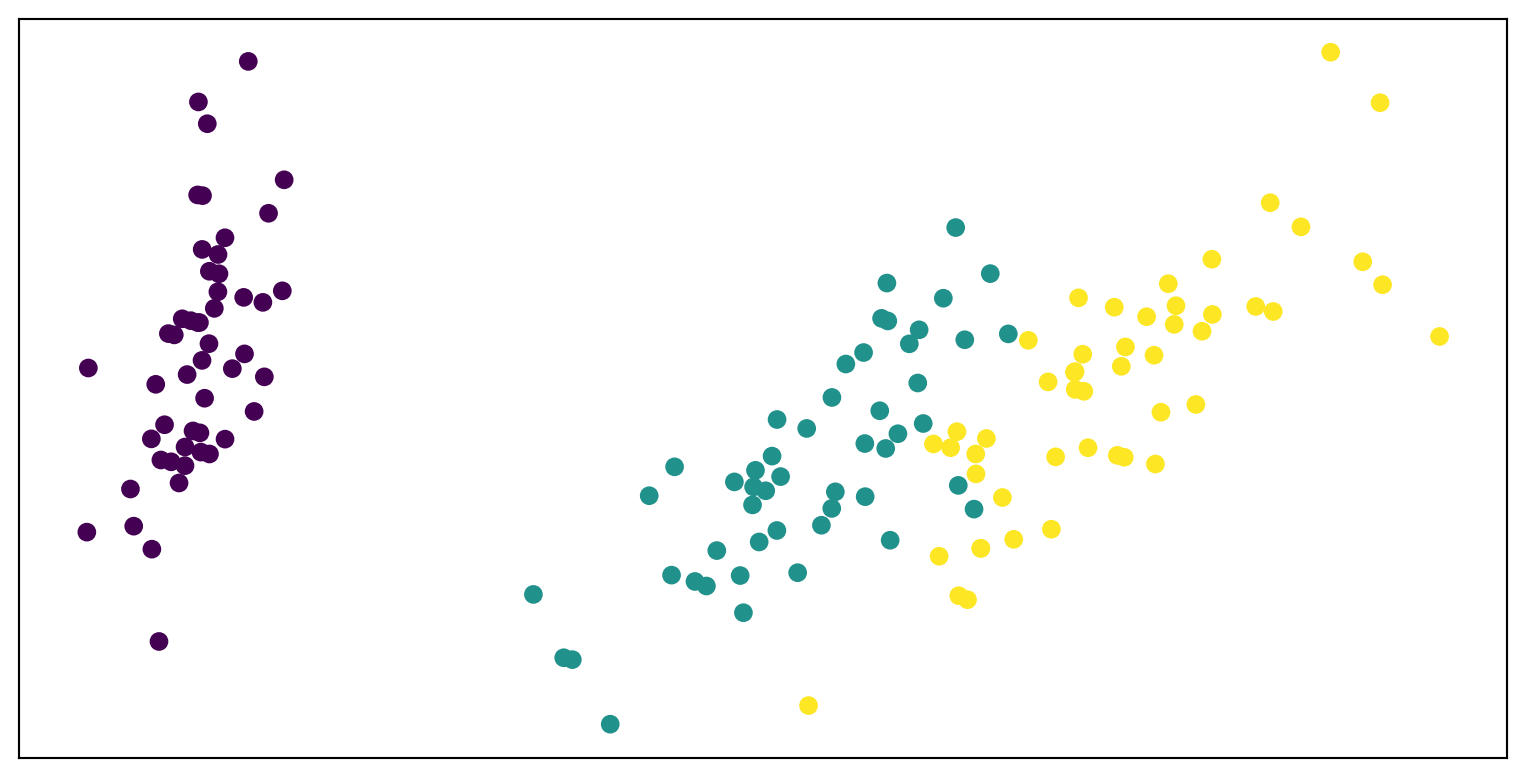

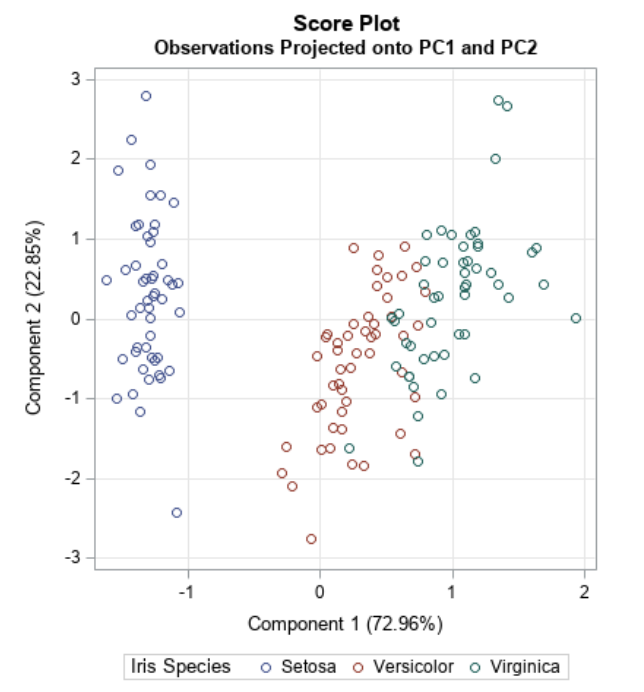

Principal Component Analysis (PCA)

- Dimensionality reduction technique that allows us to find clusters of similar data points based on many features (which we boil down to two “principal components” PC1 and PC2)

- PC1 and PC2 represent the directions in the data space with the highest and second-highest variances, respectively

- Way to bring out strong patterns from large and complex datasets

Ref: Team (2018)

Video Intro

Ref: Starmer (2017)

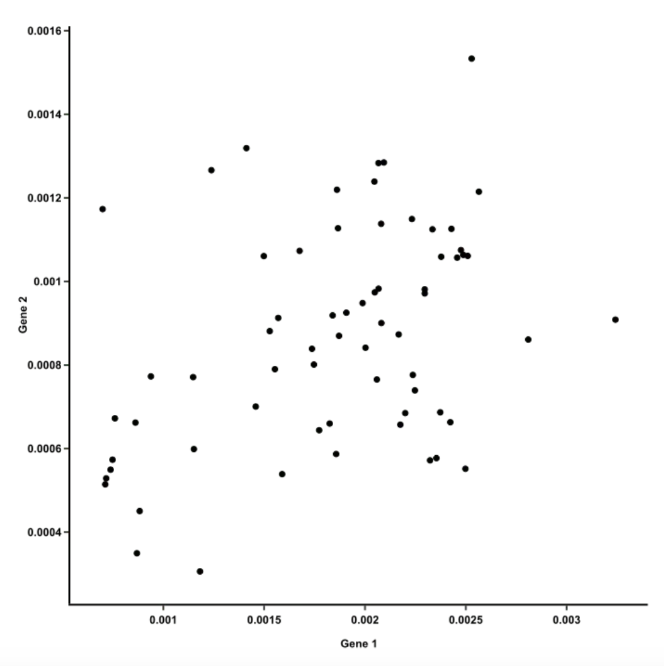

PCA Example

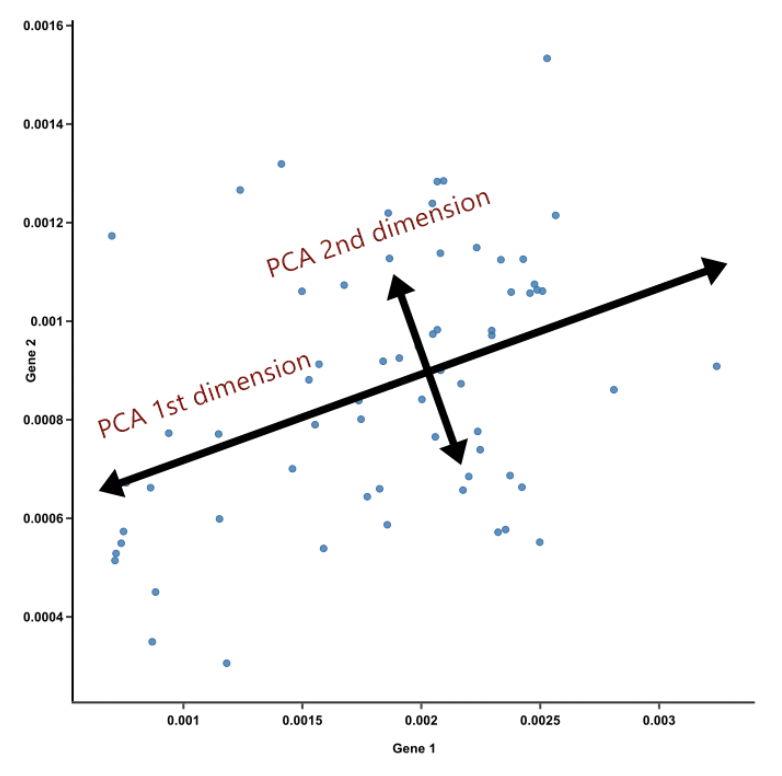

- Suppose we have a dataset that measures the expression of 15 genes from 60 mice

- How do you know which mice are similar to one another, and which ones are different?

- How do you know which genes are responsible for such similarities or differences?

- Let’s start by plotting the data for two genes against each other:

- Each dot = 1 mouse

Ref: Team (2018)

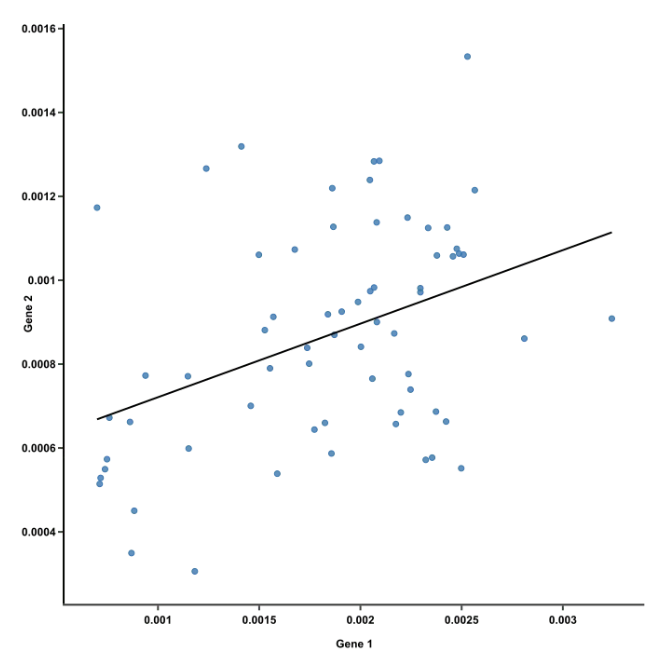

PCA Example

- The first principal component is the line of best fit for this data. It is a line that, if you project the original dots on it, two things happen:

- The total distance among the projected points is maximized. This means they can be distinguished from one another as clearly as possible.

- The total distance from the original points to their corresponding projected points is minimized. This means we have a representation that is as close to the original data as possible.

- AKA PC1 must convey the maximum variation among data points AND contain minimum error (these are actually achieved at the same time)

Ref: Team (2018)

PCA Example

- But we have more than 2 genes! And we can’t reasonably make a plot with 15 axes

- To create PC1 for all 15 genes,

- A line is anchored at the center of the 15-D cloud of dots and rotated in 15 directions, all the while acting as a “mirror,” on which the original 60 dots are projected

- This rotation continues until the total distance among projected points is maximum

- The rotating line now describes the most variation among 60 mice, and is fit to be PC1

Ref: Team (2018)

PCA Example

- PC2 is the second line that meets PC1, perpendicularly, at the center of the cloud, and describes the second most variation in the data

Ref: Team (2018)

PCA Example

- If PCA is suitable for your data, just the first 2 or 3 principal components should convey most of the information of the data already. This is nice because:

- Principal components help reduce the number of dimensions down to 2 or 3, making it possible to see strong patterns.

- Yet we didn’t have to throw away any genes in doing so. Principal components take all dimensions and data points into account.

- Since PC1 and PC2 are perpendicular to each other, we can rotate them and make them straight. These are the axes of our PCA plot.

Ref: Team (2018)

PCA Example in Python

Code from Today

This Jupyter notebook collates the code from today’s lab.