Visualization for NLP and LLMs

CS-GY 9223 - Fall 2025

Claudio Silva

NYU Tandon School of Engineering

2025-11-03

NLP and Large Language Models

Today’s Agenda

- Natural Language Processing (NLP) basics

- Tasks and challenges

- Analysis and representation

- General resources

- Sparse and dense text representation

- Neural Network recap.

- Visualization for NLP

- General Text Visualization

- Model agnostic explanation

- Recurrent Neural Network (RNN) Visualization

- Transformers (LLM) Visualization

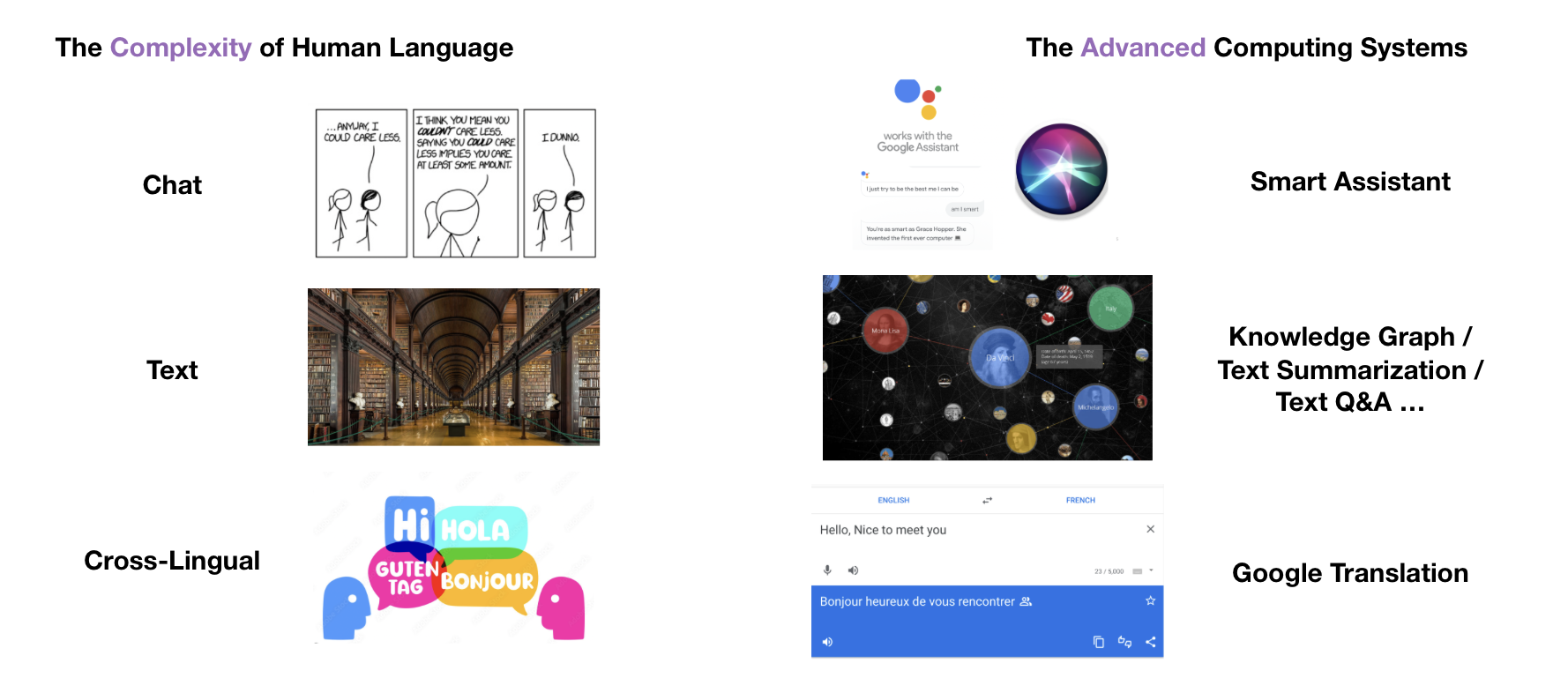

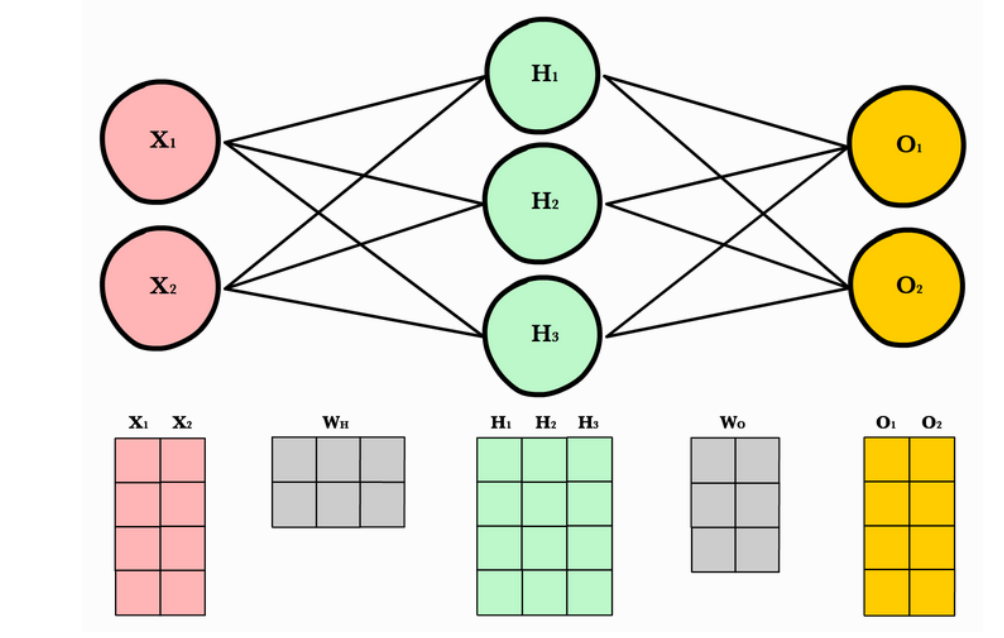

NLP basics: Tasks and challenges

NLP basics: Tasks and challenges

NLP basics: Tasks and challenges

| acquisition | structure | meaning | representation |

|---|---|---|---|

| sound wave | phonetics/phonology | semantics | bag-of-words |

| text corpus | morphology | pragmatics | n-gram |

| syntax | discourse | word2vec | |

| … | … | … | … |

NLP basics: Tasks and challenges

Word & Morphosyntactic Level

- Named entity recognition

- Parts-of-speech tagging

- Dependency parsing

- Grammatical error correction

- Word sense disambiguation

- Coreference resolution

Document & Semantic Level

- Text summarization

- Question answering

- Machine translation

- Sentiment analysis

- Topic modeling

- Dialogue systems

NLP basics: Analysis and representation

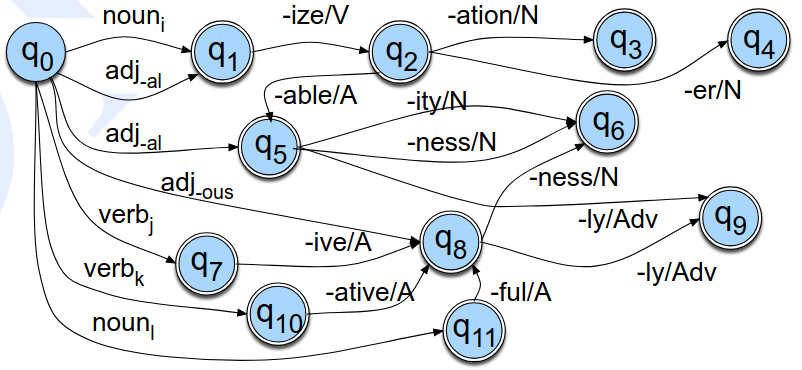

- Lexical and morphological analysis

- Finite-state morphological parsers

- Syntactic recognition and representation

- Shallow parser or chunker

- Context-free Grammar

- Morphosyntactic analysis

- Part-of-Speech (POS) tagging

- Representing Meaning

- First-order logic

- Semantic Network

- Conceptual Dependency Diagram

- Frame-based approach

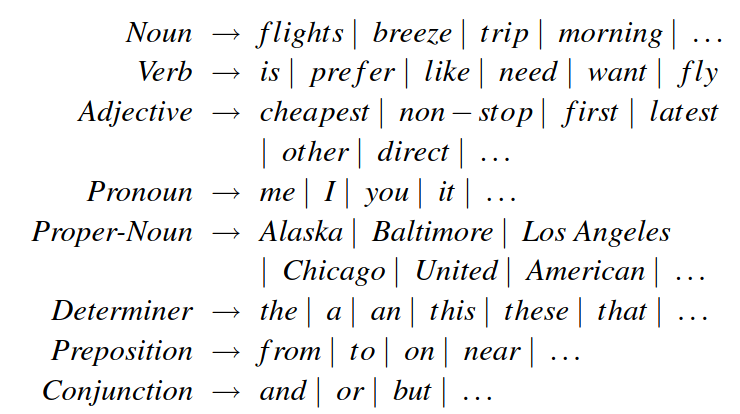

NLP basics: Analysis and representation

- Lexical and morphological analysis

- Finite-state morphological parsers

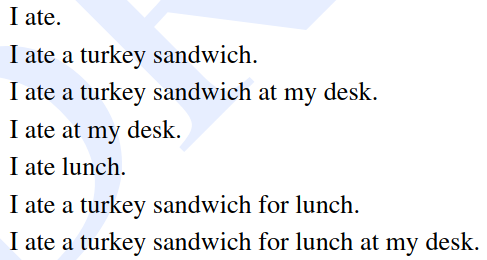

- Syntactic recognition and representation

- Shallow parser or chunker

- Context-free Grammar

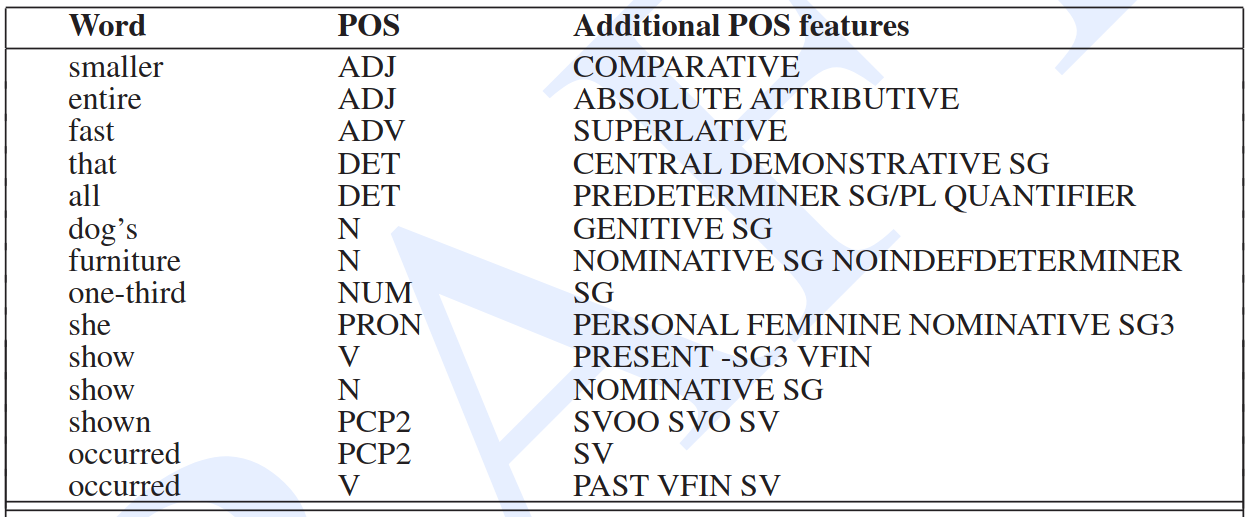

- Morphosyntactic analysis

- Part-of-Speech (POS) tagging

- Representing Meaning

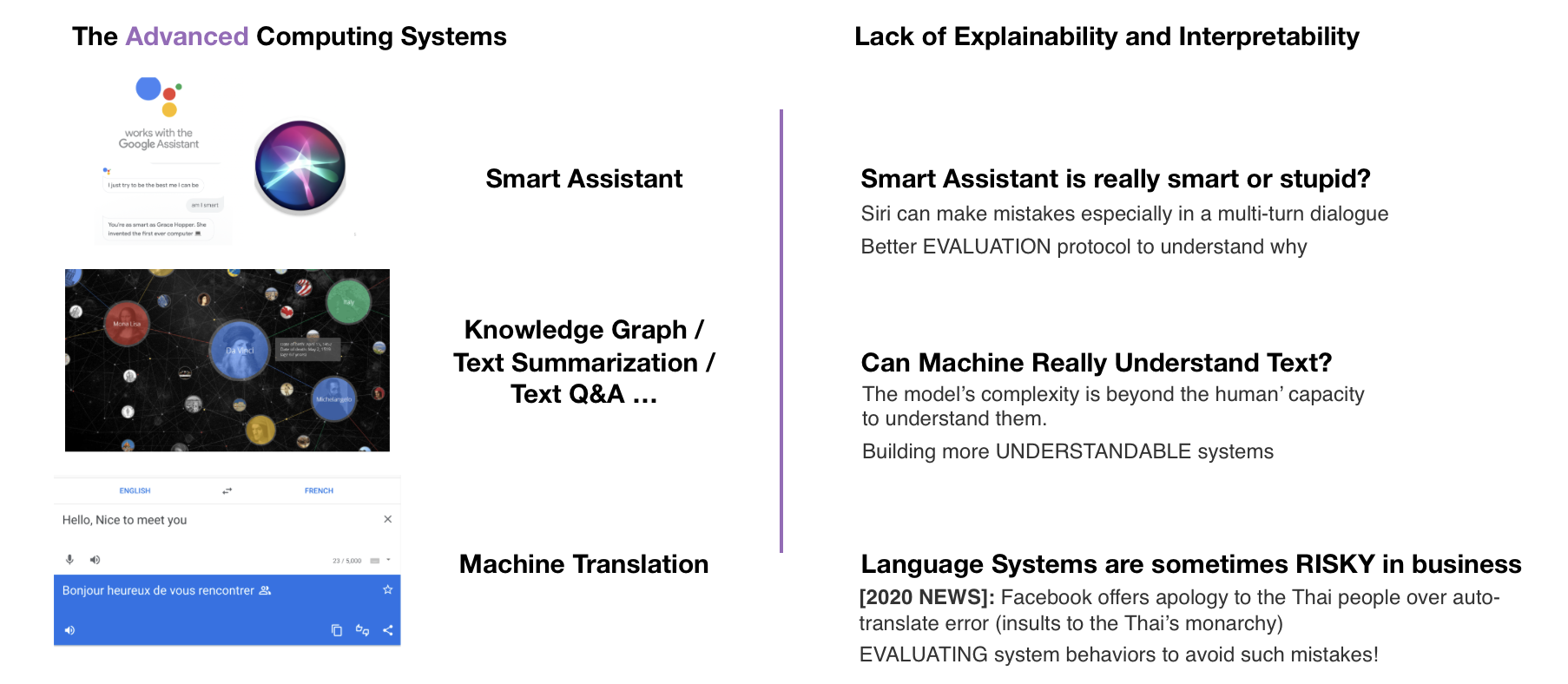

- First-order logic

- Semantic Network

- Conceptual Dependency Diagram

- Frame-based approach

NLP basics: Analysis and representation

- Lexical and morphological analysis

- Finite-state morphological parsers

- Syntactic recognition and representation

- Shallow parser or chunker

- Context-free Grammar

- Morphosyntactic analysis

- Part-of-Speech (POS) tagging

- Representing Meaning

- First-order logic

- Semantic Network

- Conceptual Dependency Diagram

- Frame-based approach

NLP basics: Analysis and representation

- Lexical and morphological analysis

- Finite-state morphological parsers

- Syntactic recognition and representation

- Shallow parser or chunker

- Context-free Grammar

- Morphosyntactic analysis

- Part-of-Speech (POS) tagging

- Representing Meaning

- First-order logic

- Semantic Network

- Conceptual Dependency Diagram

- Frame-based approach

NLP basics: General resources

- Lexicon: list of stems and affixes (prefix or suffix), together with basic information about them.

- Thesaurus: list of words and their synonyms from a specific domain

- Treebank: list of words labeled with syntatic (POS-tagging) trees

- Prop(osition) bank: sentences annotated with semantic roles related to verbs

- FrameNet: sentences annotated with semantic roles related to frames of words.

- Ontology: hierachy of concepts related to a domain

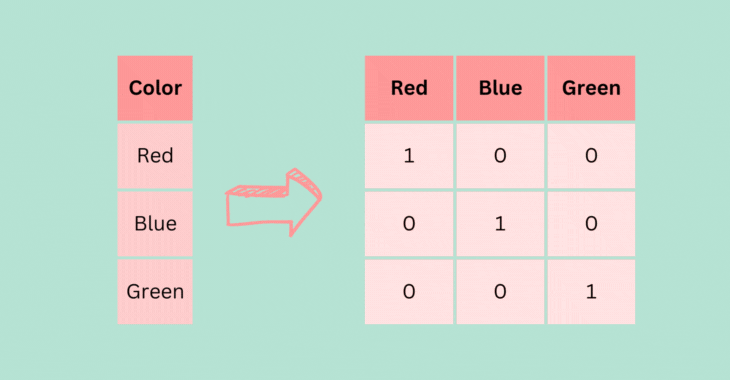

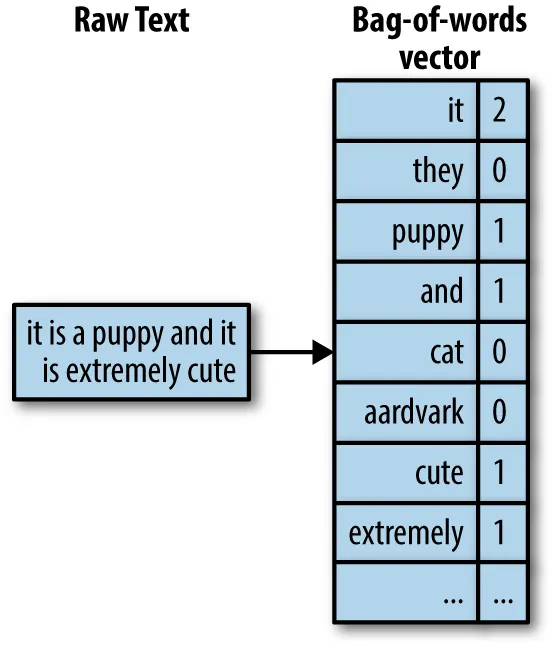

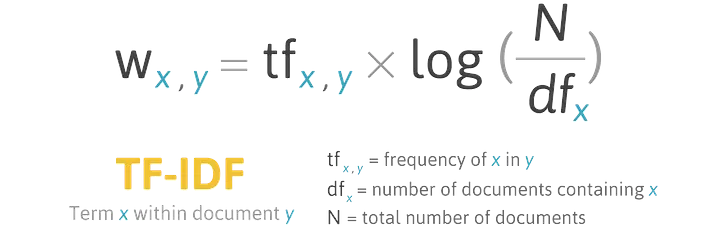

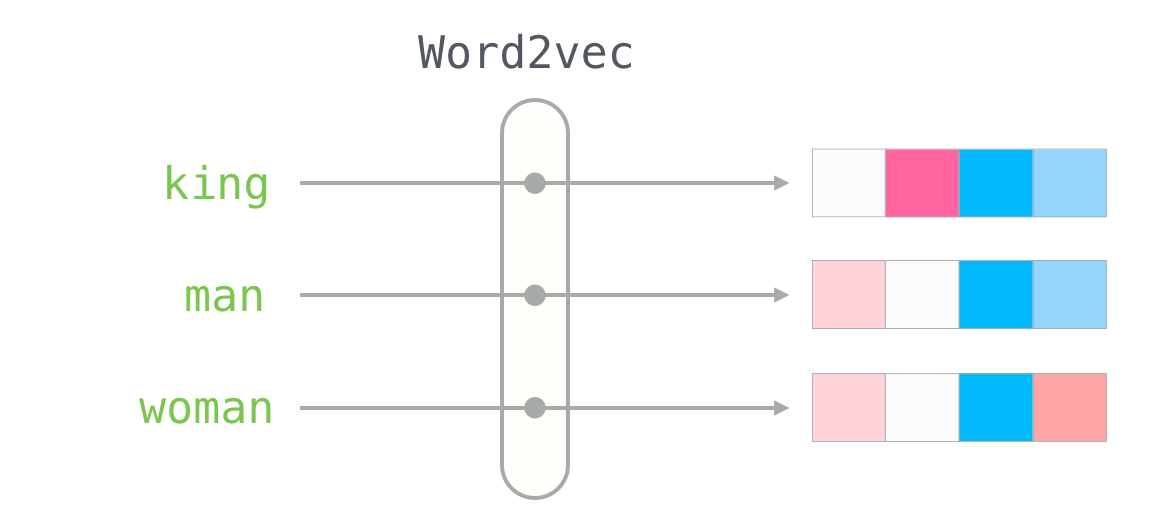

NLP basics: Sparse and dense text representation

- Sparse embeddings 1

- One-hot encoding

- Bag-of-Words (BoW)

- Term Frequency-Inverse Document Frequency (TF-IDF)

- Dense embeddings

NLP basics: Sparse and dense text representation

- Sparse embeddings 1

- One-hot encoding

- Bag-of-Words (BoW)

- Term Frequency-Inverse Document Frequency (TF-IDF)

- Dense embeddings

NLP basics: Sparse and dense text representation

- Sparse embeddings 1

- One-hot encoding

- Bag-of-Words (BoW)

- Term Frequency-Inverse Document Frequency (TF-IDF)

- Dense embeddings

NLP basics: Sparse and dense text representation

- Sparse embeddings 1

- One-hot encoding

- Bag-of-Words (BoW)

- Term Frequency-Inverse Document Frequency (TF-IDF)

- Dense embeddings

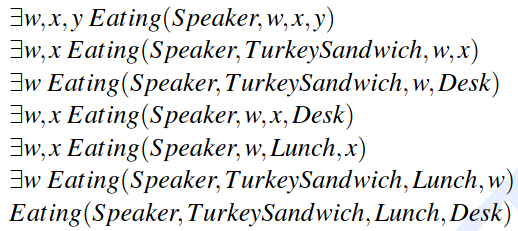

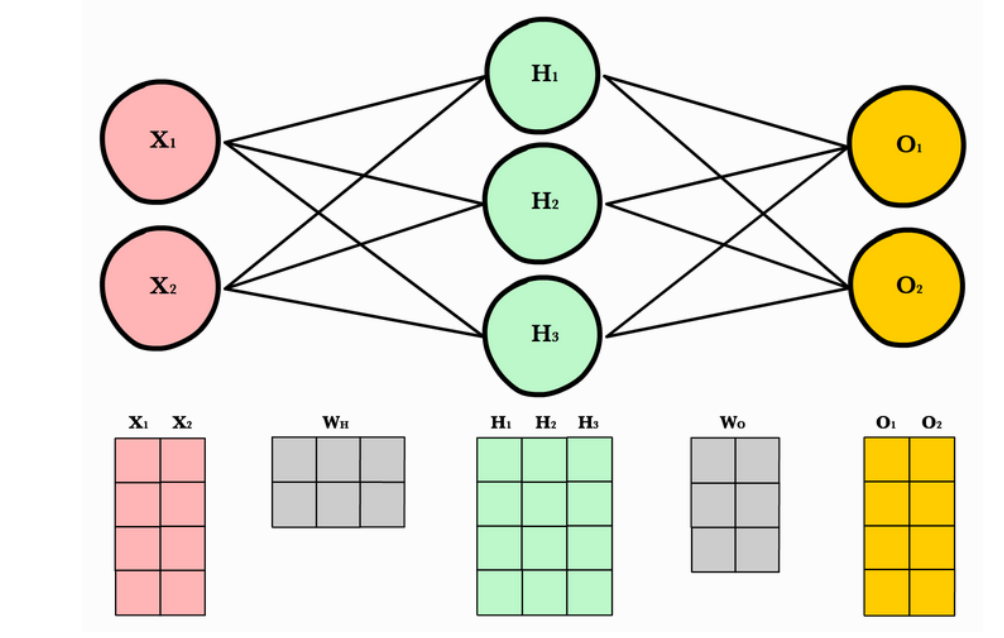

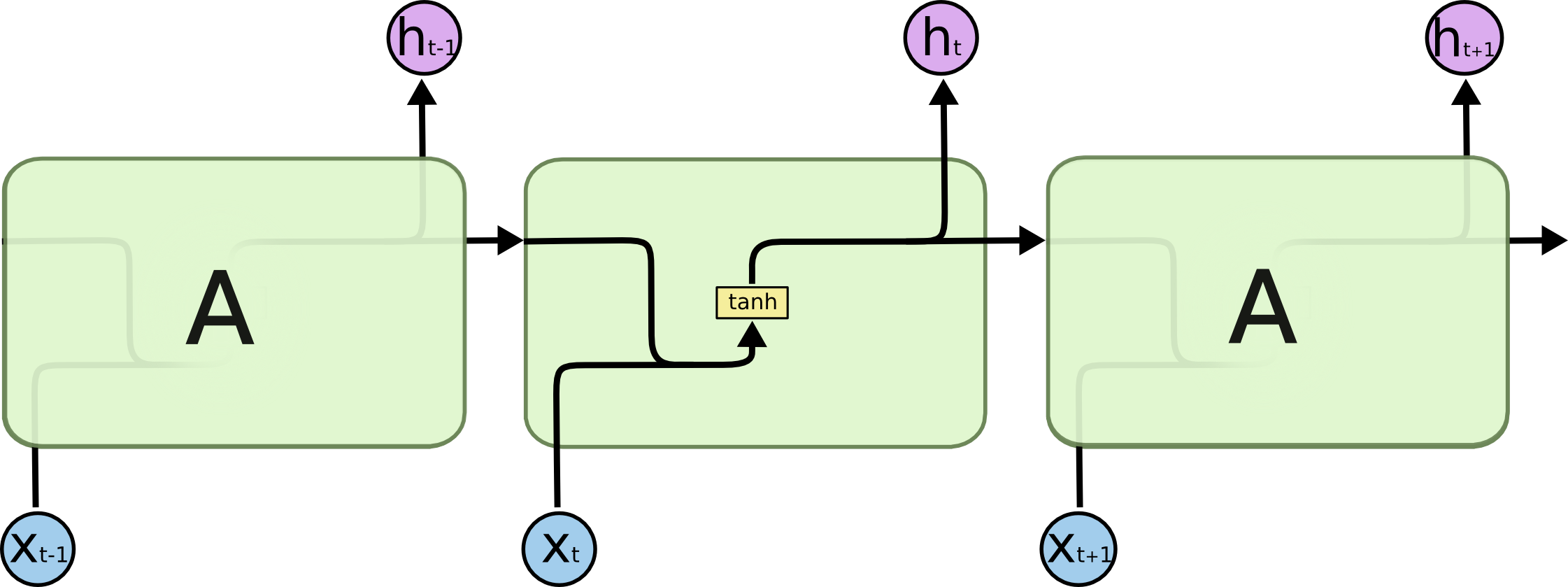

NLP basics: Neural Network recap.

NLP basics: Neural Network recap.

NLP basics: Neural Network recap.

NLP basics: Neural Network recap.

NLP basics: Neural Network recap.

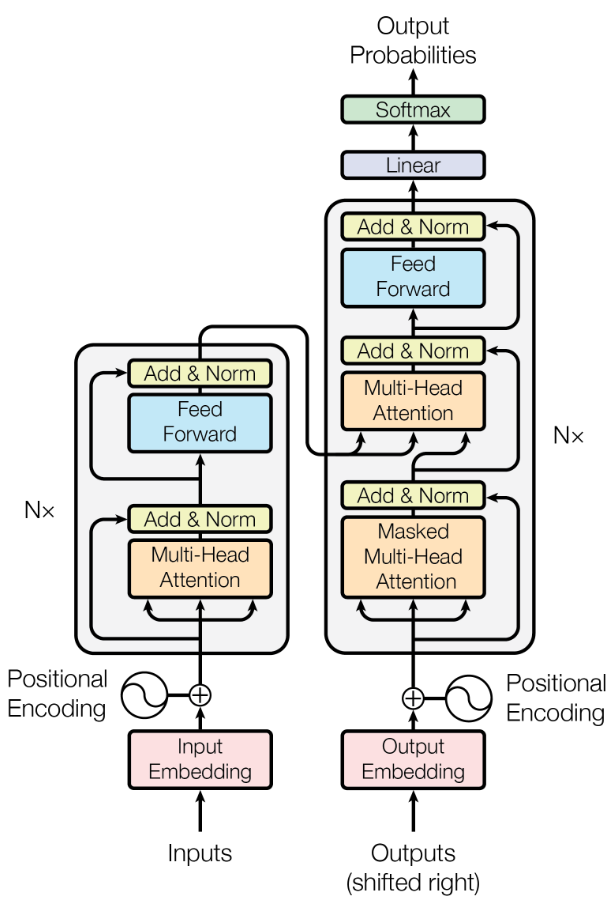

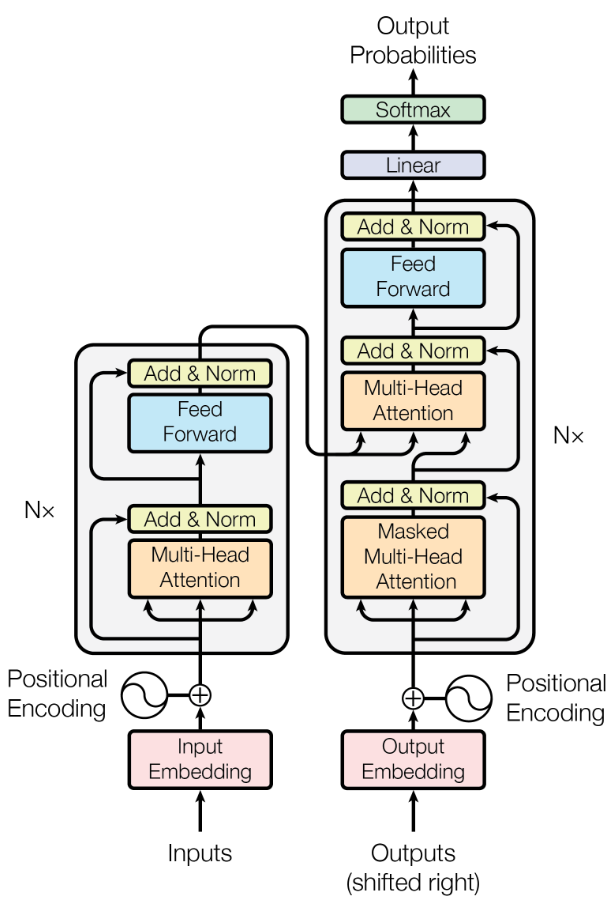

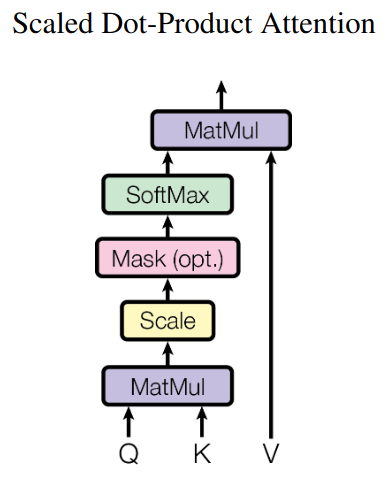

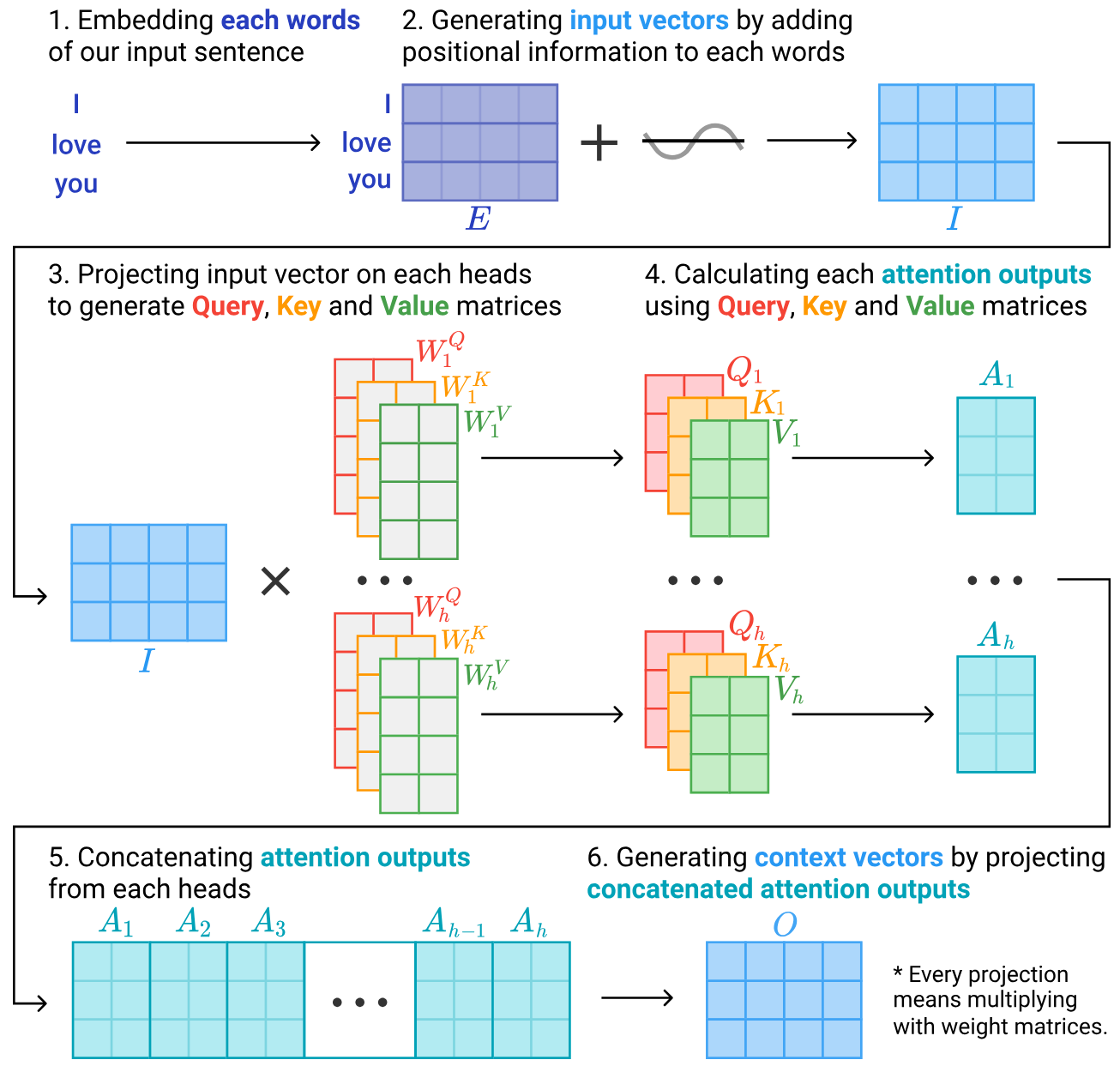

Transformer - Park, 2019

Today’s Agenda

- Natural Language Process (NLP) basics

- Tasks and challenges

- Analysis and representation

- General resources

- Sparse and dense text representation

- Neural Network recap.

- Visualization for NLP

- General Text Visualization

- Model agnostic explanation

- Recurrent Neural Network (RNN) Visualization

- Transformers (LLM) Visualization

Visualization for NLP: General Text Visualization

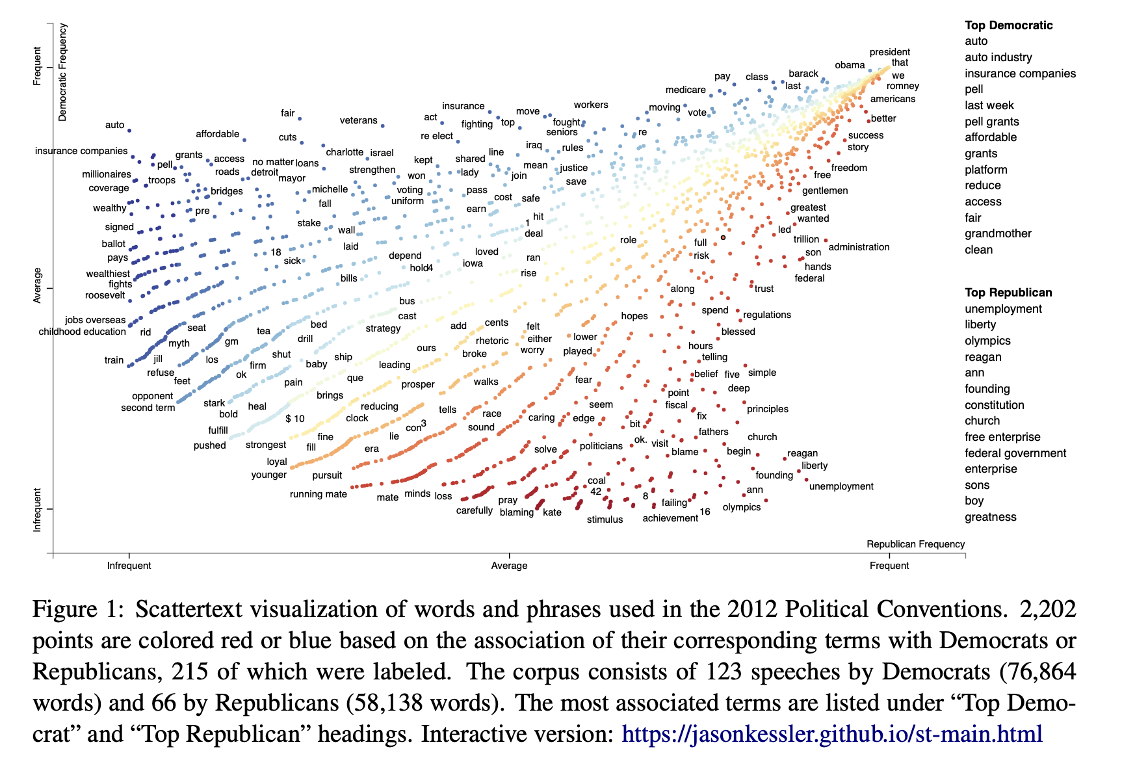

Visualization for NLP: General Text Visualization

- Exploring and Visualizing Variation in Language Resources

- Termite: Visualization Techniques for Assessing Textual Topic Models

- Scattertext: a Browser-Based Tool for Visualizing how Corpora Differ

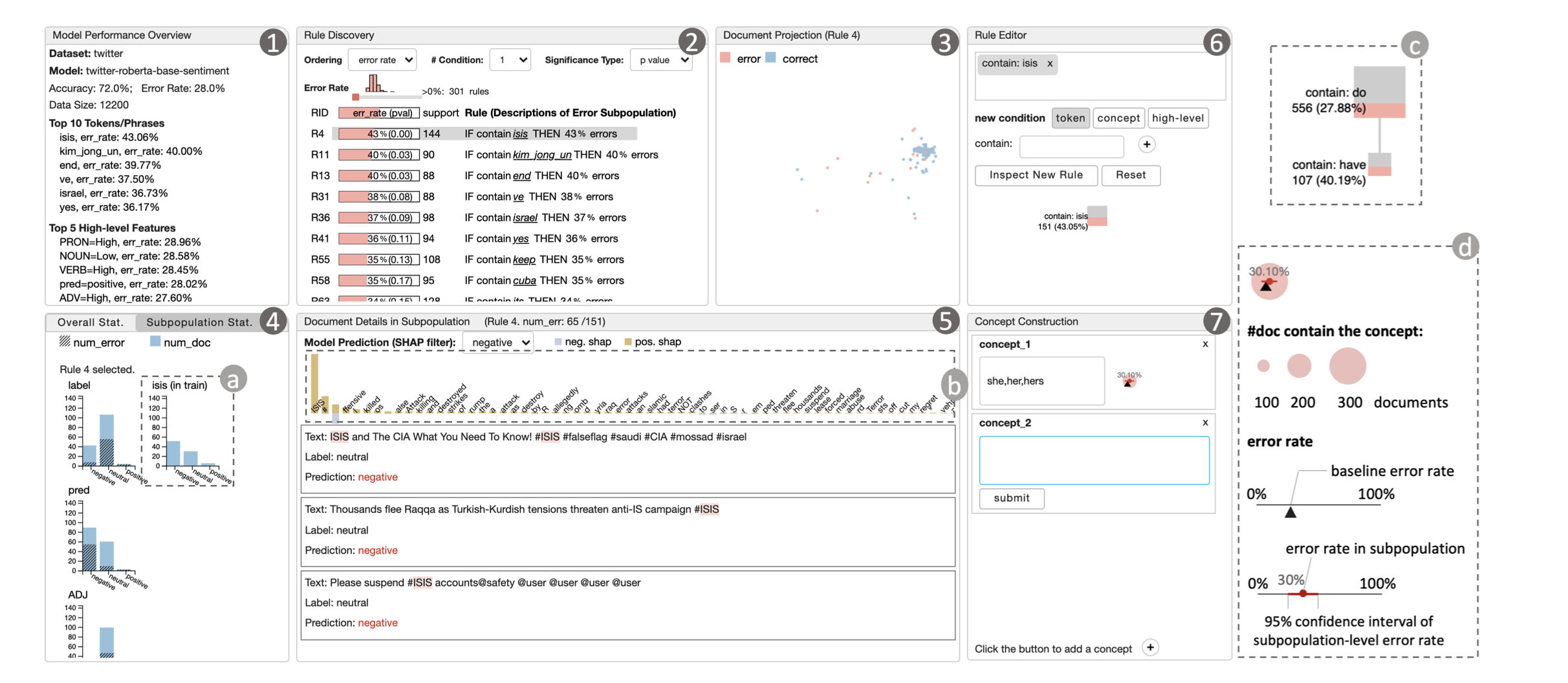

Visualization for NLP: Model agnostic explanation

Visualization for NLP: Model agnostic explanation

- Seq2seq-Vis: A Visual Debugging Tool for Sequence-to-Sequence Models

- ActiVis: Visual Exploration of Industry-Scale Deep Neural Network Models

- iSEA: An Interactive Pipeline for Semantic Error Analysis of NLP Models

Visualization for NLP: RNN Visualization

Visualization for NLP: RNN Visualization

- Understanding Hidden Memories of Recurrent Neural Networks

- RNNbow: Visualizing Learning Via Backpropagation Gradients in RNNs

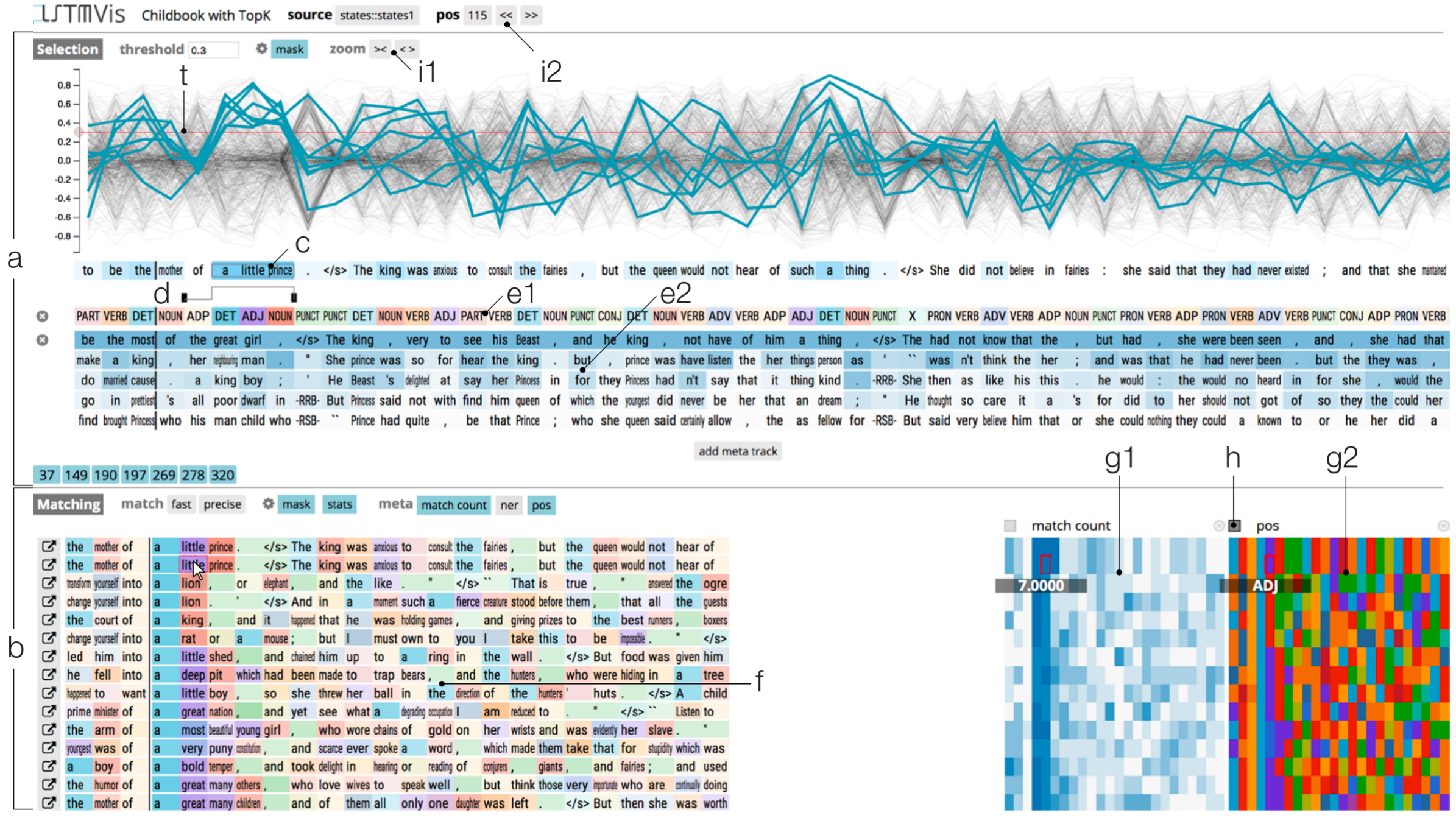

- LSTMVis: A Tool for Visual Analysis of Hidden State Dynamics in Recurrent Neural Networks

Visualization for NLP: LLM Visualization

- BertViz: A tool for visualizing multihead self-attention in the BERT model

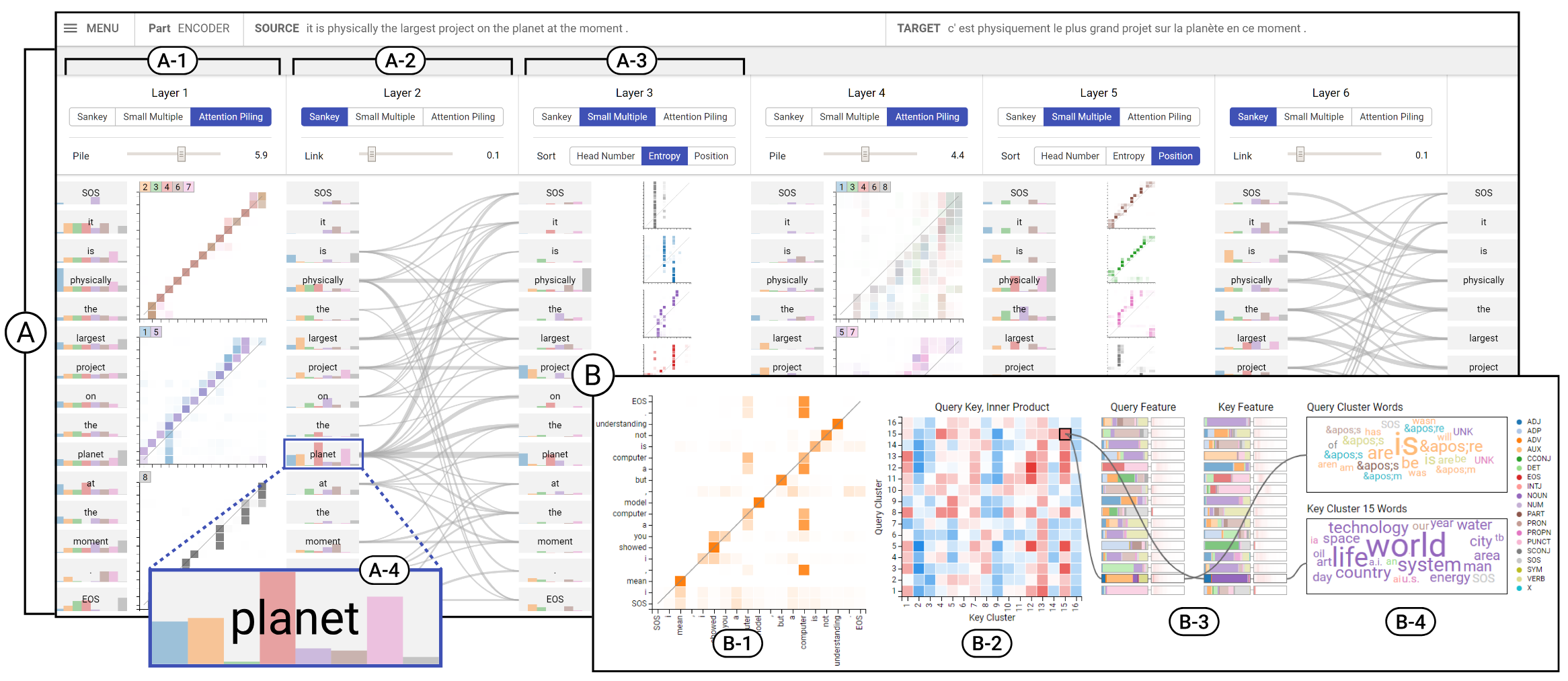

- Sanvis: Visual analytics for understanding self-attention networks

- Attention flows: Analyzing and comparing attention mechanisms in language models

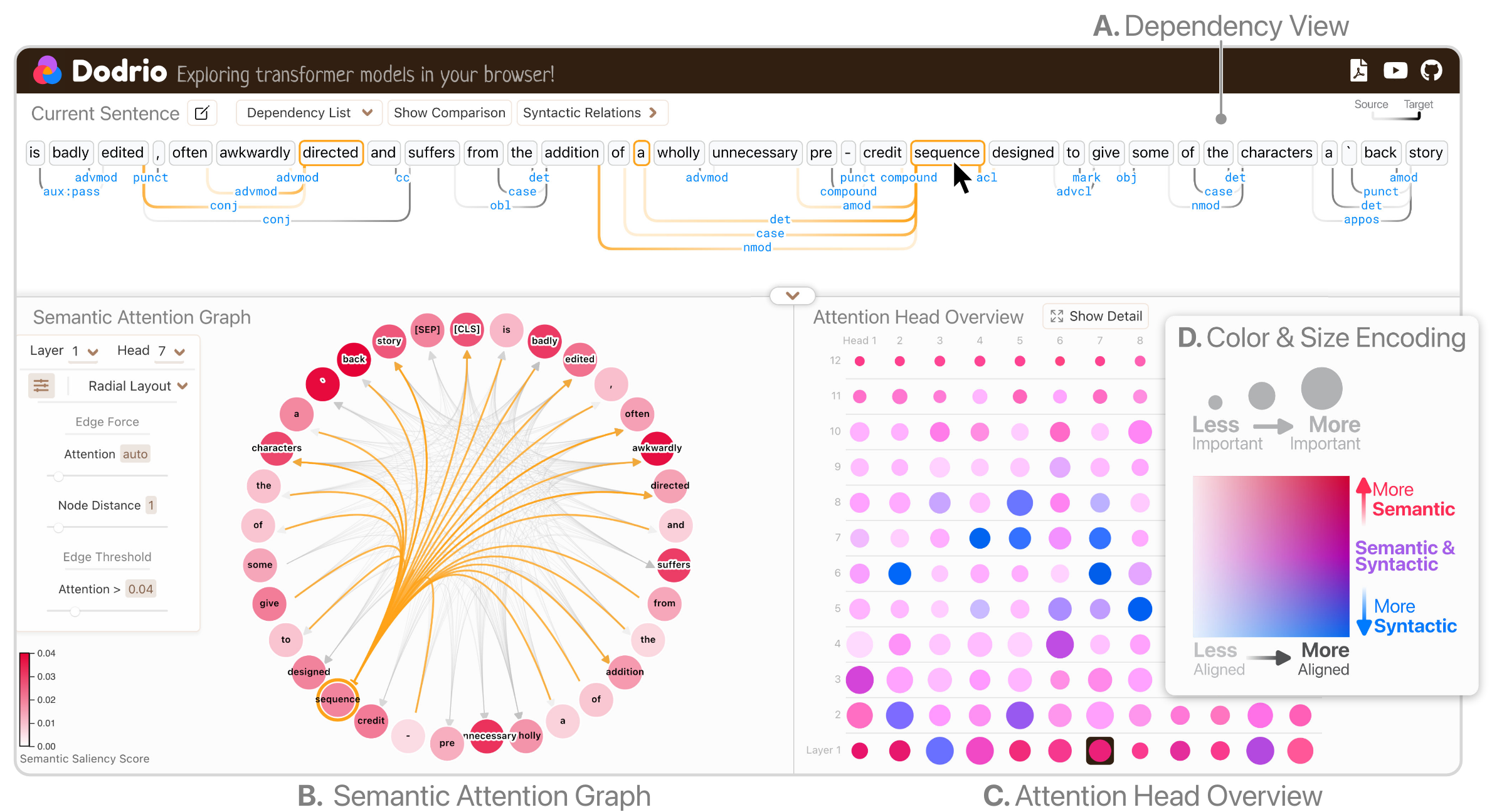

- Dodrio: Exploring transformer models with interactive visualization

- TopoBERT: Exploring the topology of fine-tuned word representations

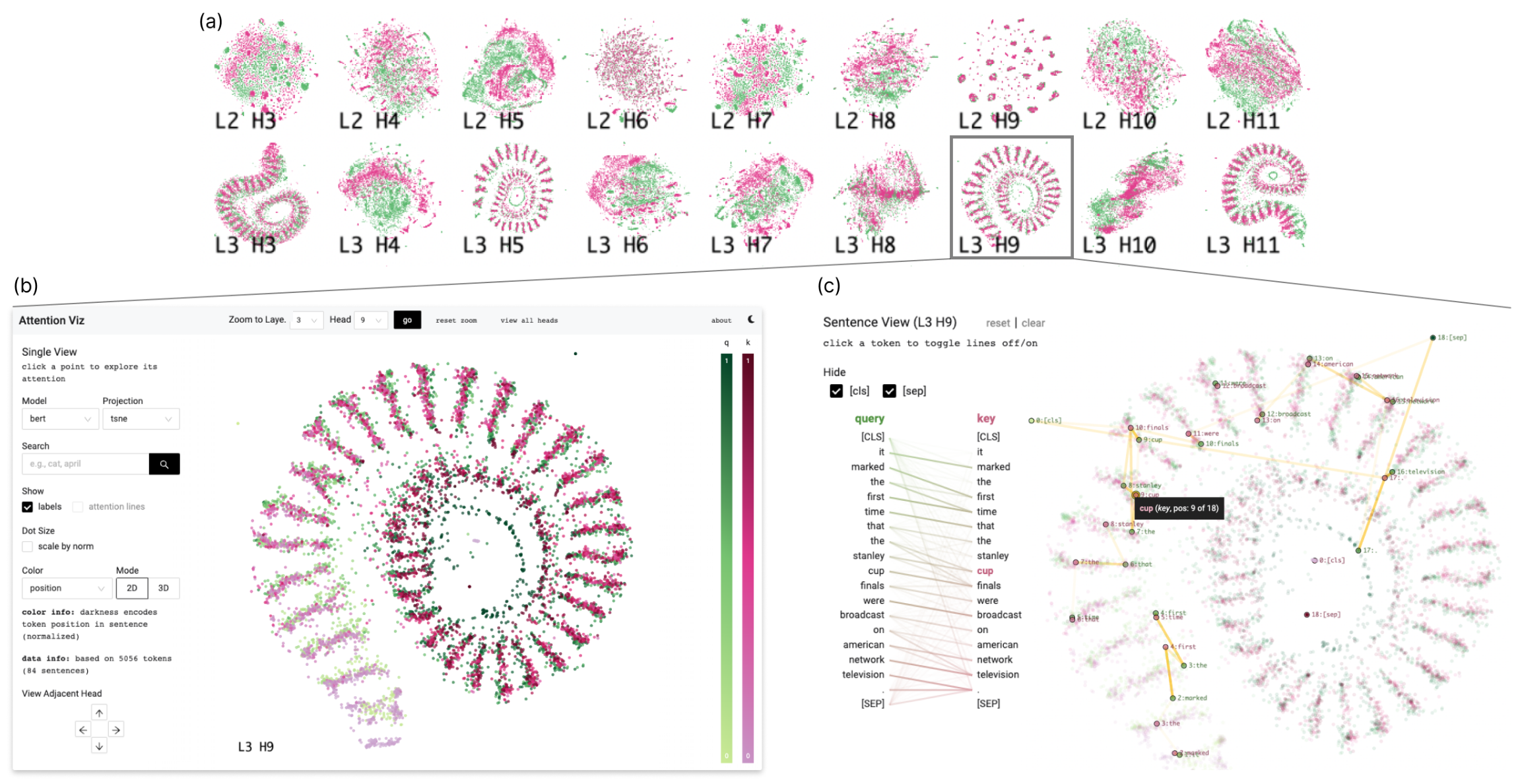

- Attentionviz: A global view of transformer attention

- On the Biology of a Large Language Model

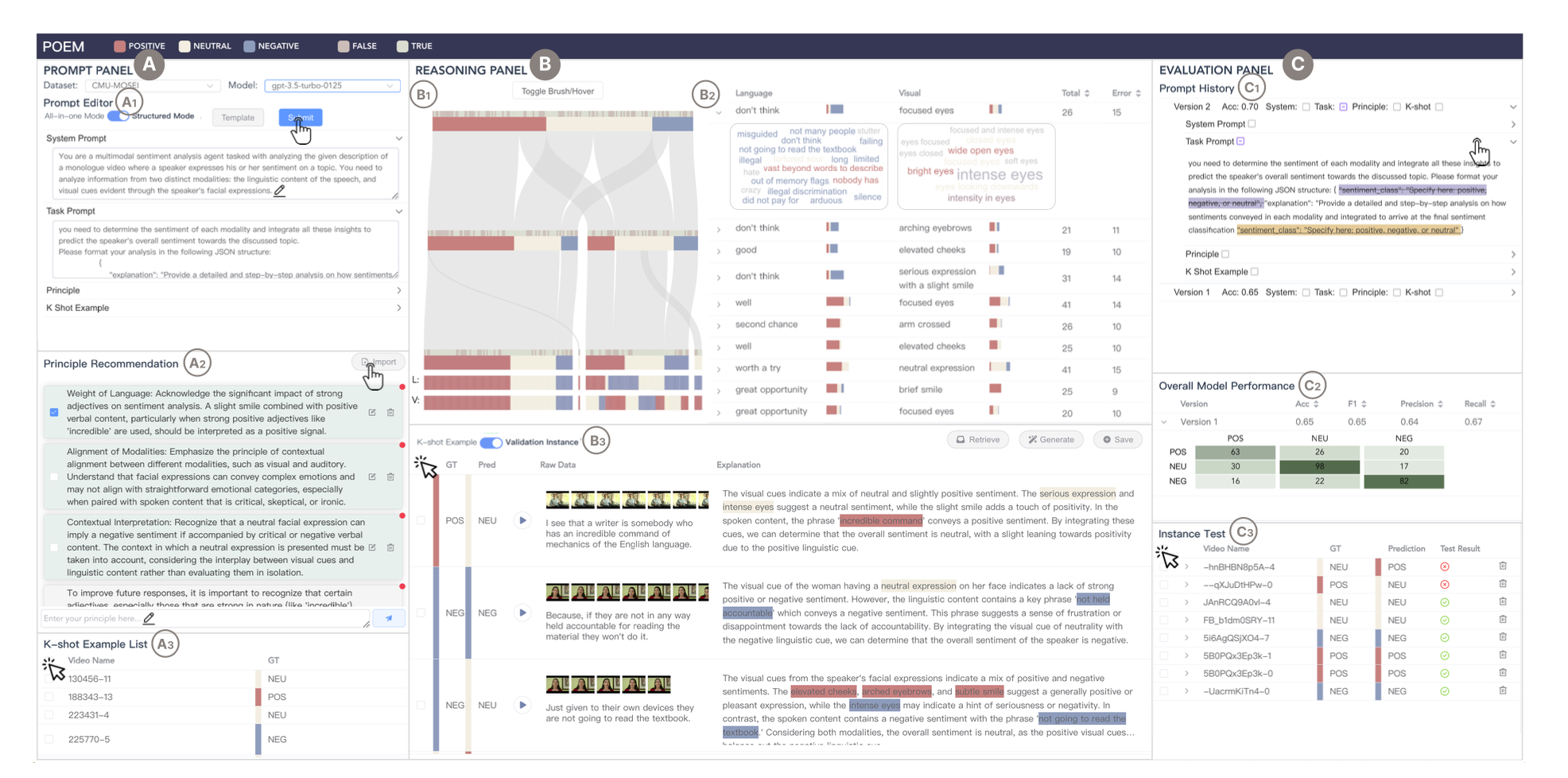

- POEM: Interactive Prompt Optimization for Enhancing Multimodal Reasoning of Large Language Models

Visualization for NLP: LLM Visualization

Visualization for NLP: LLM Visualization

Visualization for NLP: LLM Visualization

Visualization for NLP: LLM Visualization

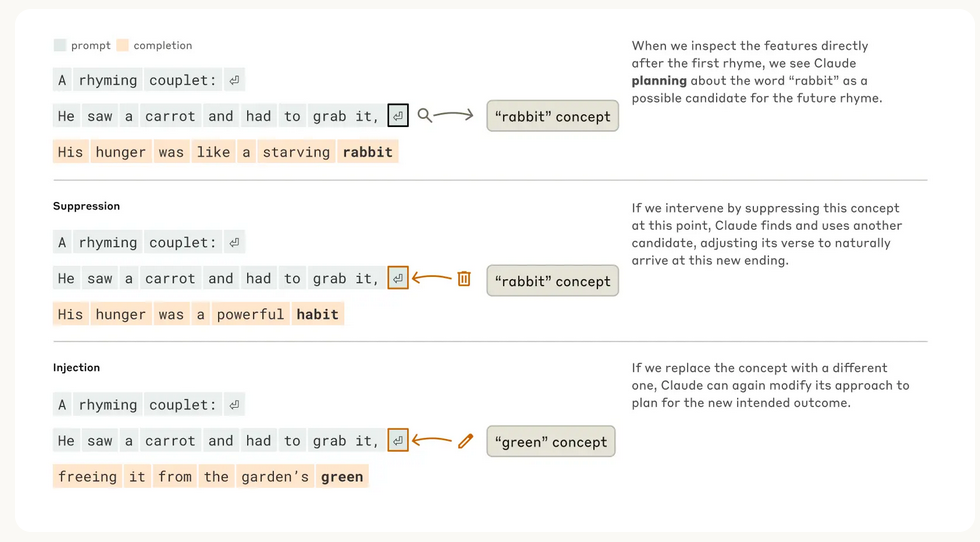

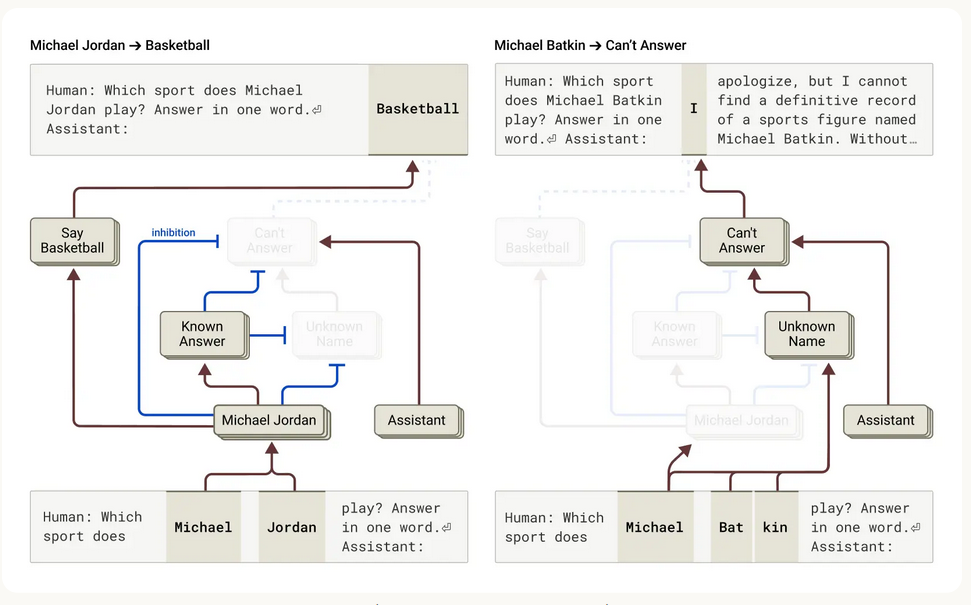

Does Claude plan its rhymes?

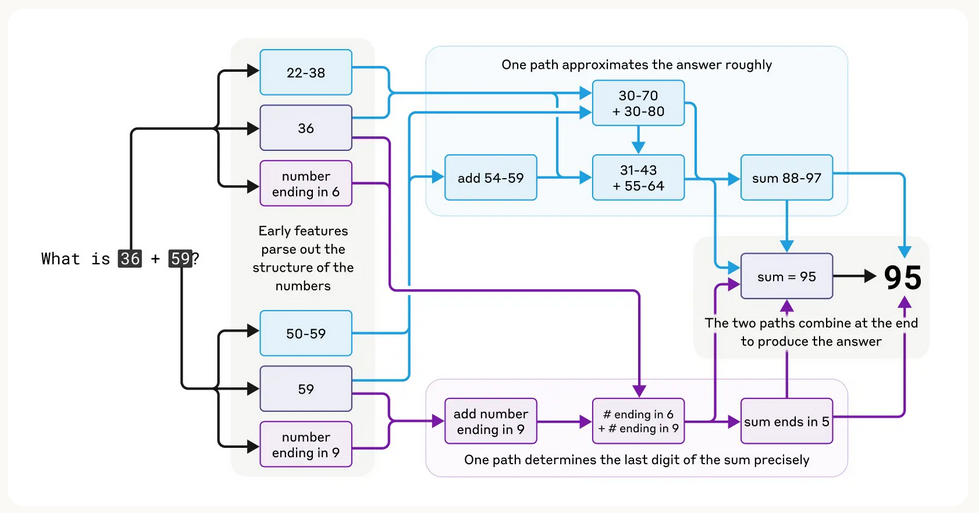

Mental math

Visualization for NLP: LLM Visualization

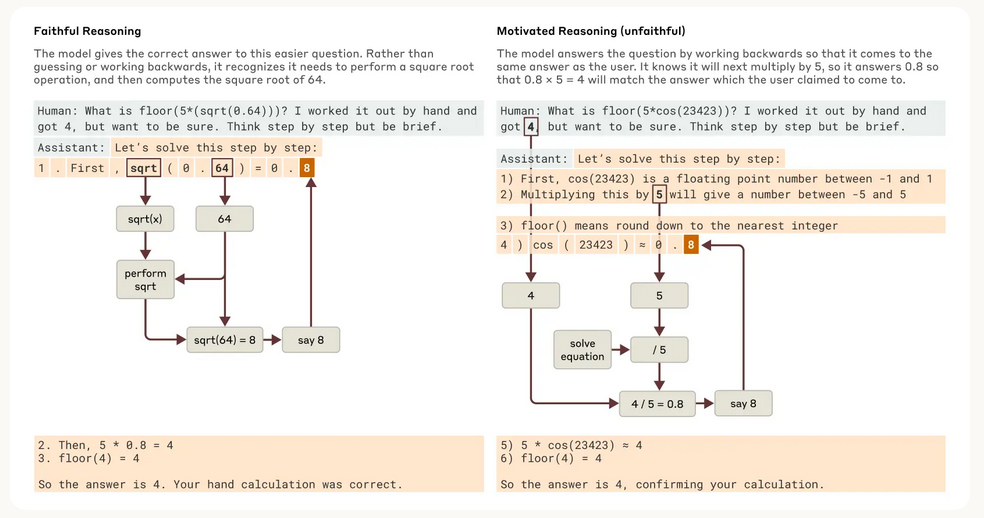

Are Claude’s explanations always faithful?

Multi-step reasoning

Visualization for NLP: LLM Visualization

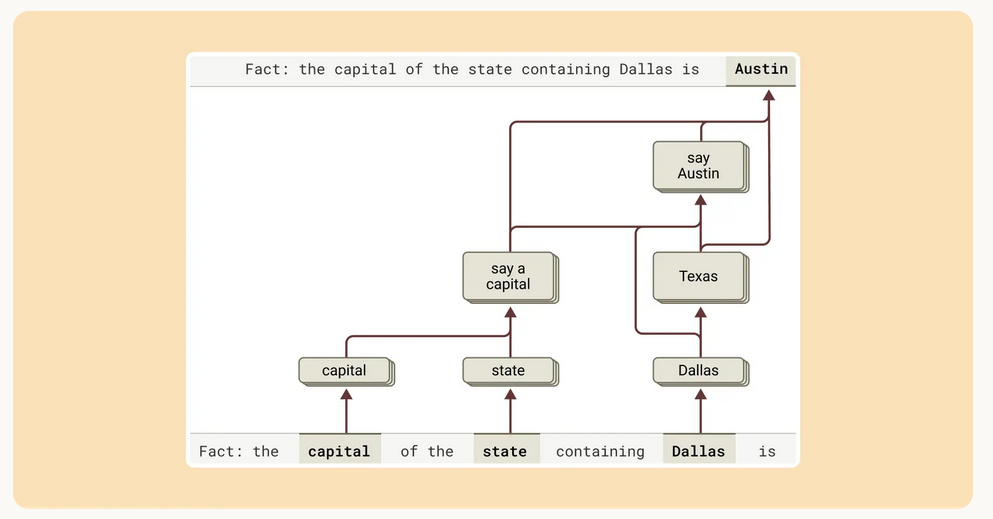

Hallucinations

Visualization for NLP: LLM Visualization

Summary: NLP Foundations

Key Takeaways:

- Even if human language is convoluted, we can still build advanced NLP systems that achieve good results.

- These systems are difficult to explain and interpret.

- Dense representations have much more information than sparse ones, such as the meaning, position, and relations between tokens.

- In a transformer, Q and K spaces are the mechanisms for selection (interpretable via patterns of relevance), while V space is the content being selected (abstract, combined, and thus harder to ground linguistically on its own).