from sklearn.datasets import fetch_california_housing

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.inspection import PartialDependenceDisplay

import matplotlib.pyplot as plt

# Load California housing dataset

# Features: median income, house age, average rooms, etc.

housing = fetch_california_housing()

X, y = housing.data, housing.target

# Train a gradient boosting regressor

model = GradientBoostingRegressor(

n_estimators=100,

max_depth=4,

learning_rate=0.1,

random_state=0

).fit(X, y)

# Create PDPs for: MedInc (0), HouseAge (1), and their interaction

# MedInc = median income, HouseAge = median house age

features = [0, 1, (0, 1)]

display = PartialDependenceDisplay.from_estimator(

model, X, features, feature_names=housing.feature_names

)Black-box Model Interpretation

CS-GY 9223 - Fall 2025

NYU Tandon School of Engineering

2025-10-06

Black Box Model Assessment

Agenda

Goal: Study Model Agnostic Interpretability Methods. These should help to explain any type of ML Models.

Partial Dependence Plot (PDP)

Local Interpretable Model-agnostic Explanations (LIME)

SHAP (SHapley Additive exPlanations)

Comparative Analysis and Trade-offs

Acknowledgments:

Materials adapted from:

- Molnar, C. (2024). Interpretable Machine Learning

- Molnar, C. (2024). Interpreting Machine Learning Models With SHAP

Bike Rentals (Regression)

This dataset contains daily counts of rented bicycles from the bicycle rental company Capital-Bikeshare in Washington D.C., along with weather and seasonal information. The goal is to predict how many bikes will be rented depending on the weather and the day. The data can be downloaded from the UCI Machine Learning Repository.

Here is the list of features used in Molnar’s book:

- Count of bicycles including both casual and registered users. The count is used as the target in the regression task.

- The season, either spring, summer, fall or winter.

- Indicator whether the day was a holiday or not.

- The year, either 2011 or 2012.

- Number of days since the 01.01.2011 (the first day in the dataset). This feature was introduced to take account of the trend over time.

- Indicator whether the day was a working day or weekend.

- The weather situation on that day. One of: clear, few clouds, partly cloudy, cloudy mist + clouds, mist + broken clouds, mist + few clouds, mist light snow, light rain + thunderstorm + scattered clouds, light rain + scattered clouds heavy rain + ice pallets + thunderstorm + mist, snow + mist

- Temperature in degrees Celsius.

- Relative humidity in percent (0 to 100).

- Wind speed in km per hour.

Molnar, C. (2022). Interpretable Machine Learning. 2nd Edition.

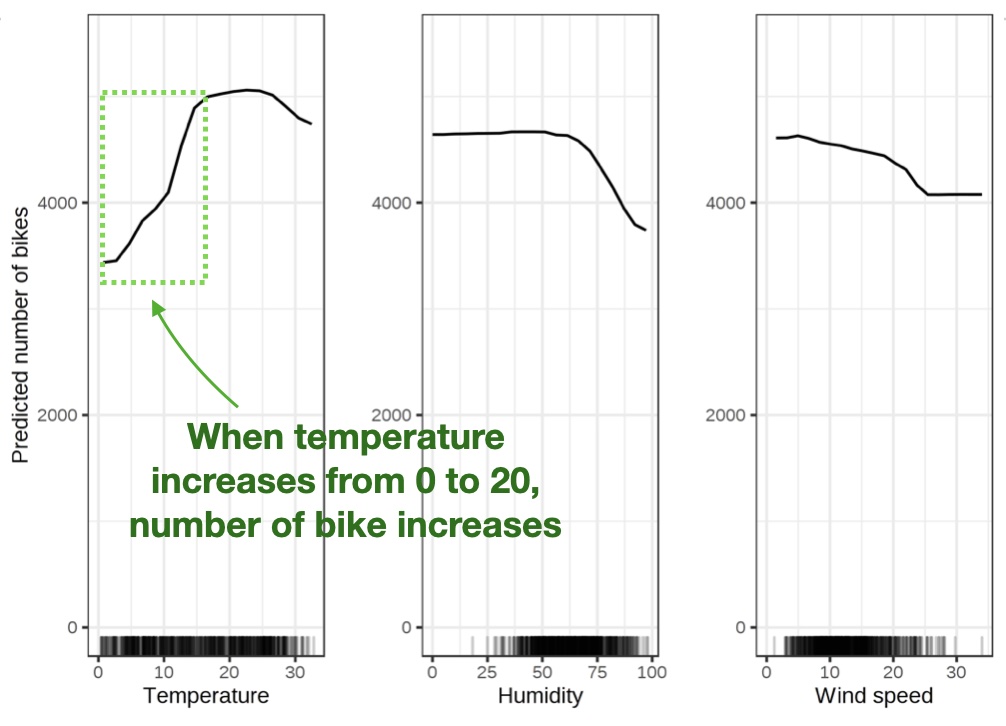

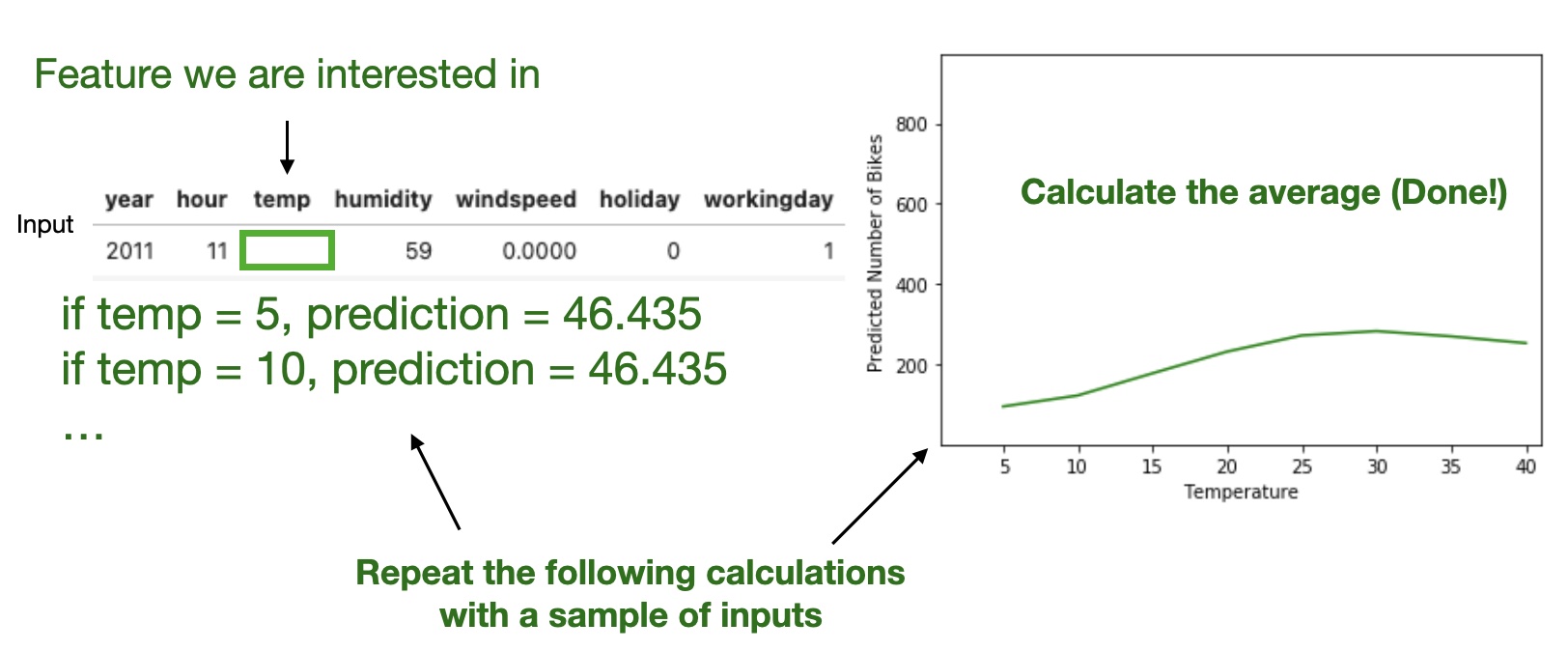

Partial Dependence Plot (PDP)

Shows the marginal effect one or two features have on the predicted outcome of a machine learning model (J. H. Friedman 2001).

1D PDP: Line plot showing effect of single feature

2D PDP (Interaction Plots):

For two features, PDP generates a heatmap showing interactions.

🟦🟦🟨🟧🟧

🟦🟦🟨🟧🟧

🟩🟩🟨🟨🟨

🟩🟩🟨🟨🟨

Complex contours = strong feature interaction

Friedman, J. H. (2001). Greedy function approximation: A gradient boosting machine. Annals of Statistics.

PDP Visualization: Interpreting Feature Effects

1. Monotonic/Linear

↗

↗

↗

Straight diagonal line

↑ Feature → ↑ Prediction

Feature has consistent positive (or negative) effect

2. Non-linear/Sweet Spot

╱‾╲

╱ ╲

╱ ╲

Curve peaks and drops

Optimal range exists

(like temperature example)

3. Flat Line

━━━━━━━

Horizontal line

No marginal effect

Feature is globally unimportant

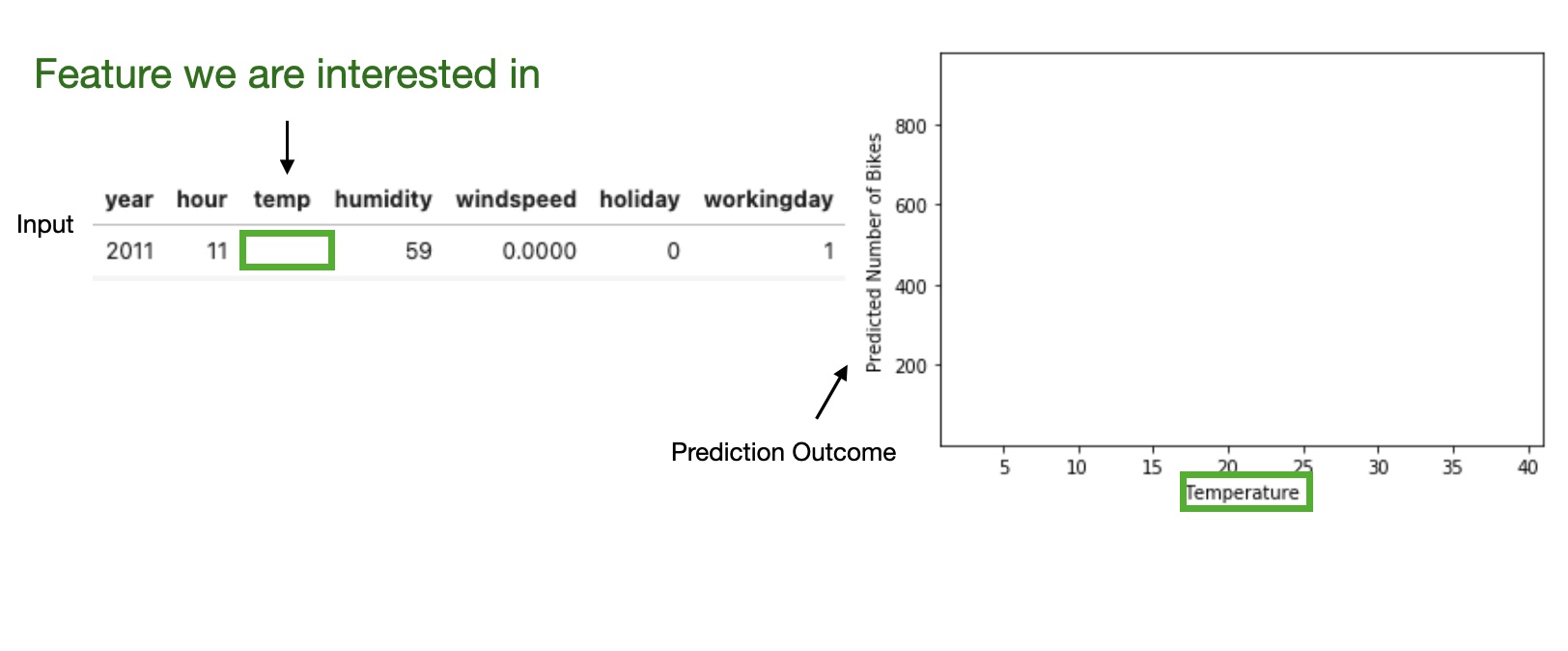

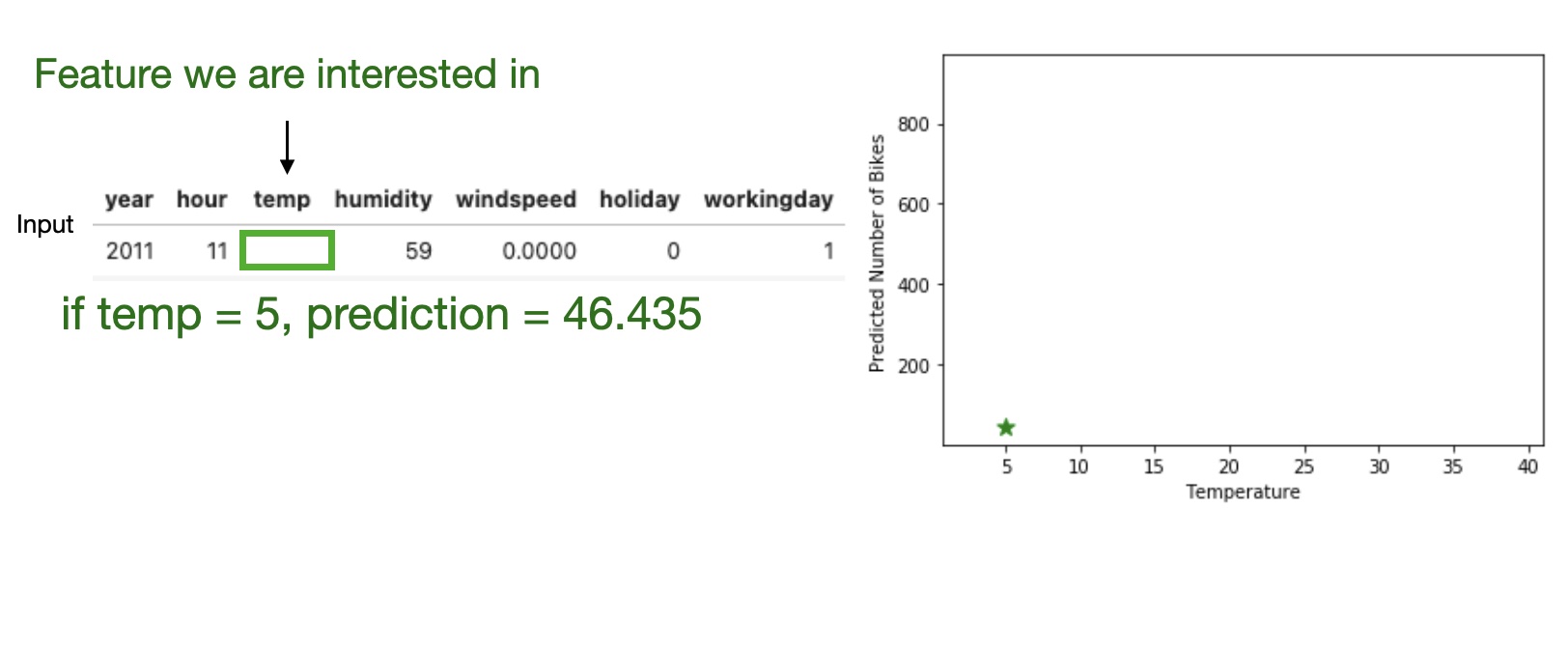

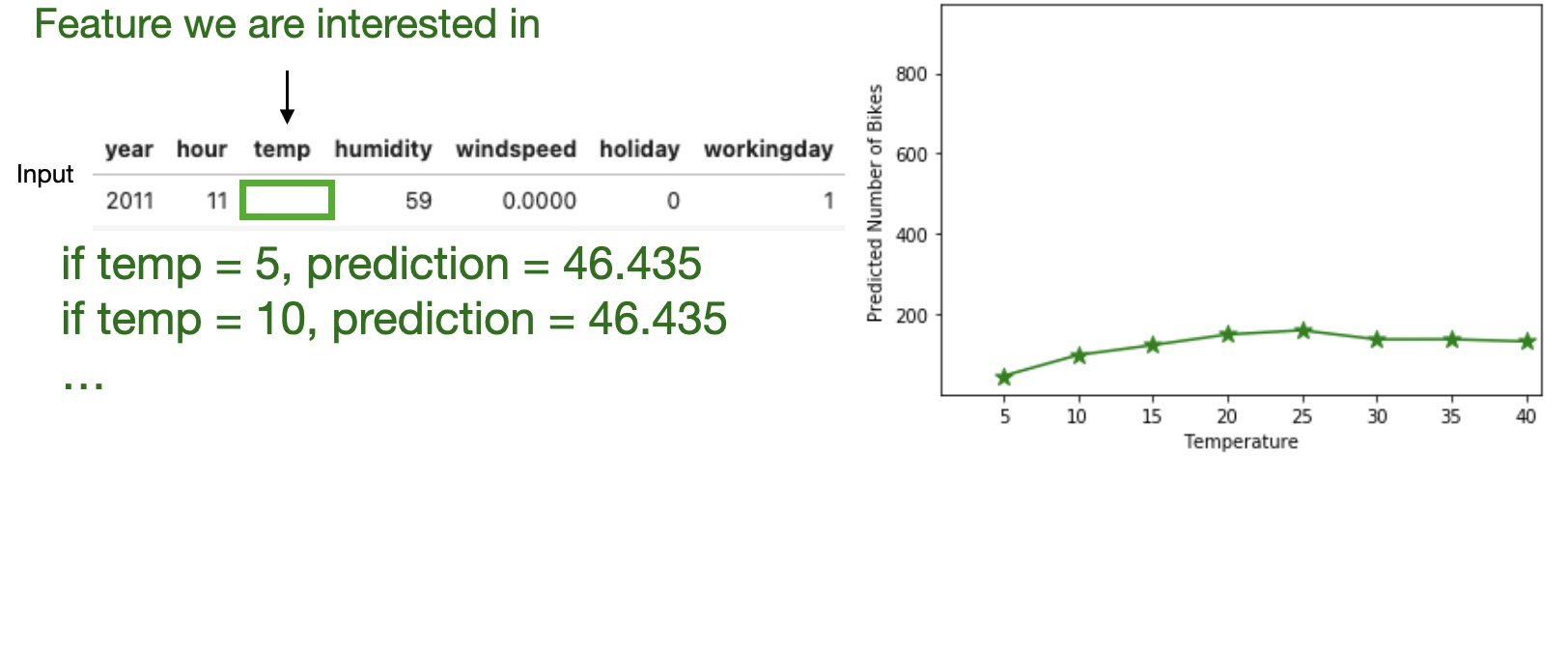

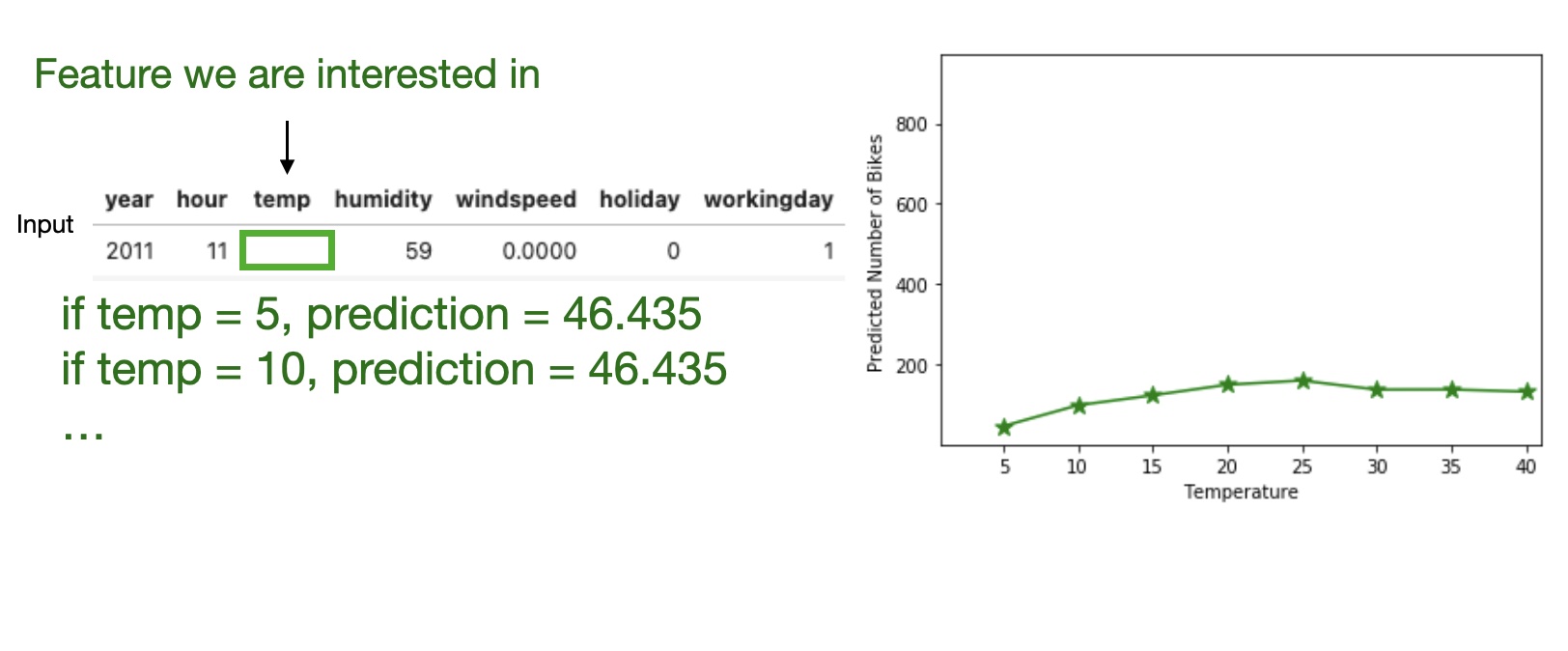

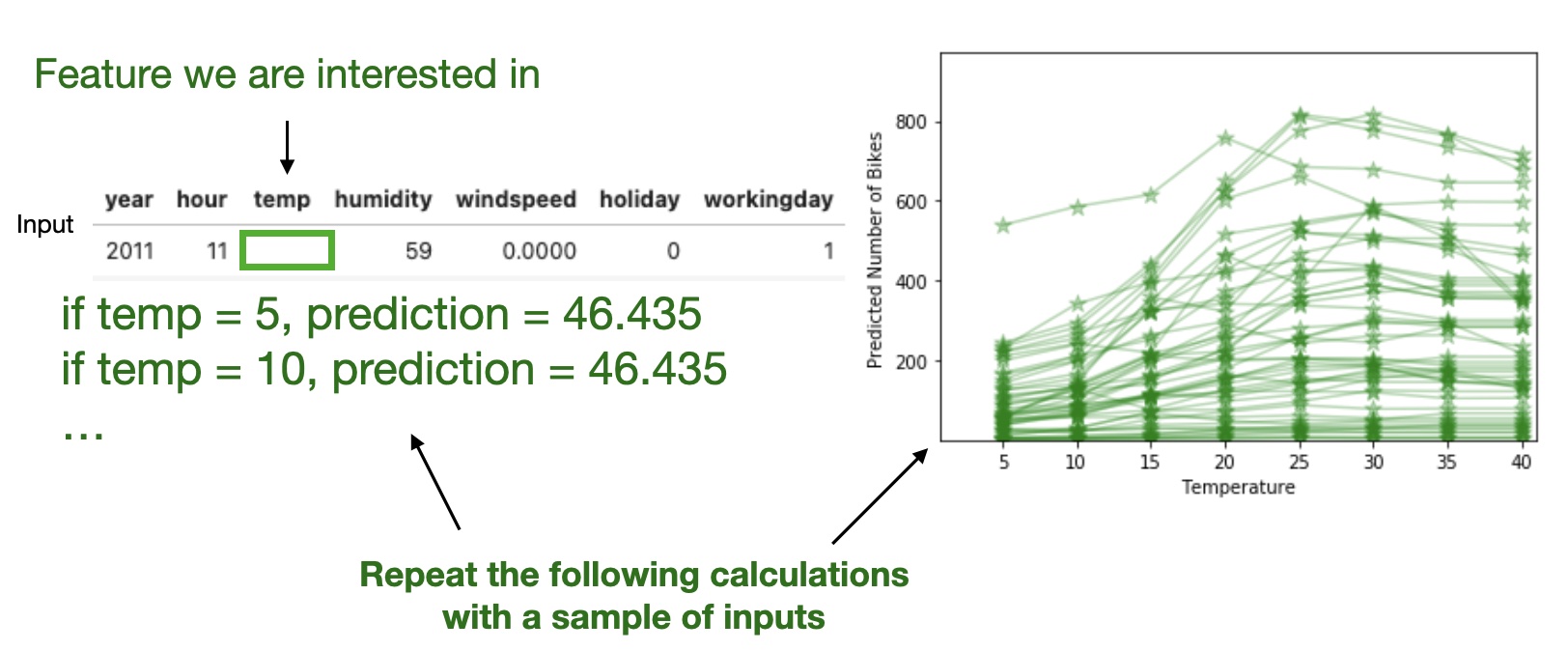

Partial Dependence Plot (PDP)

High level idea: marginalizing the machine learning model output over the distributions of the all other features to show the relationship between the feature we are interested in and the predicted outcome.

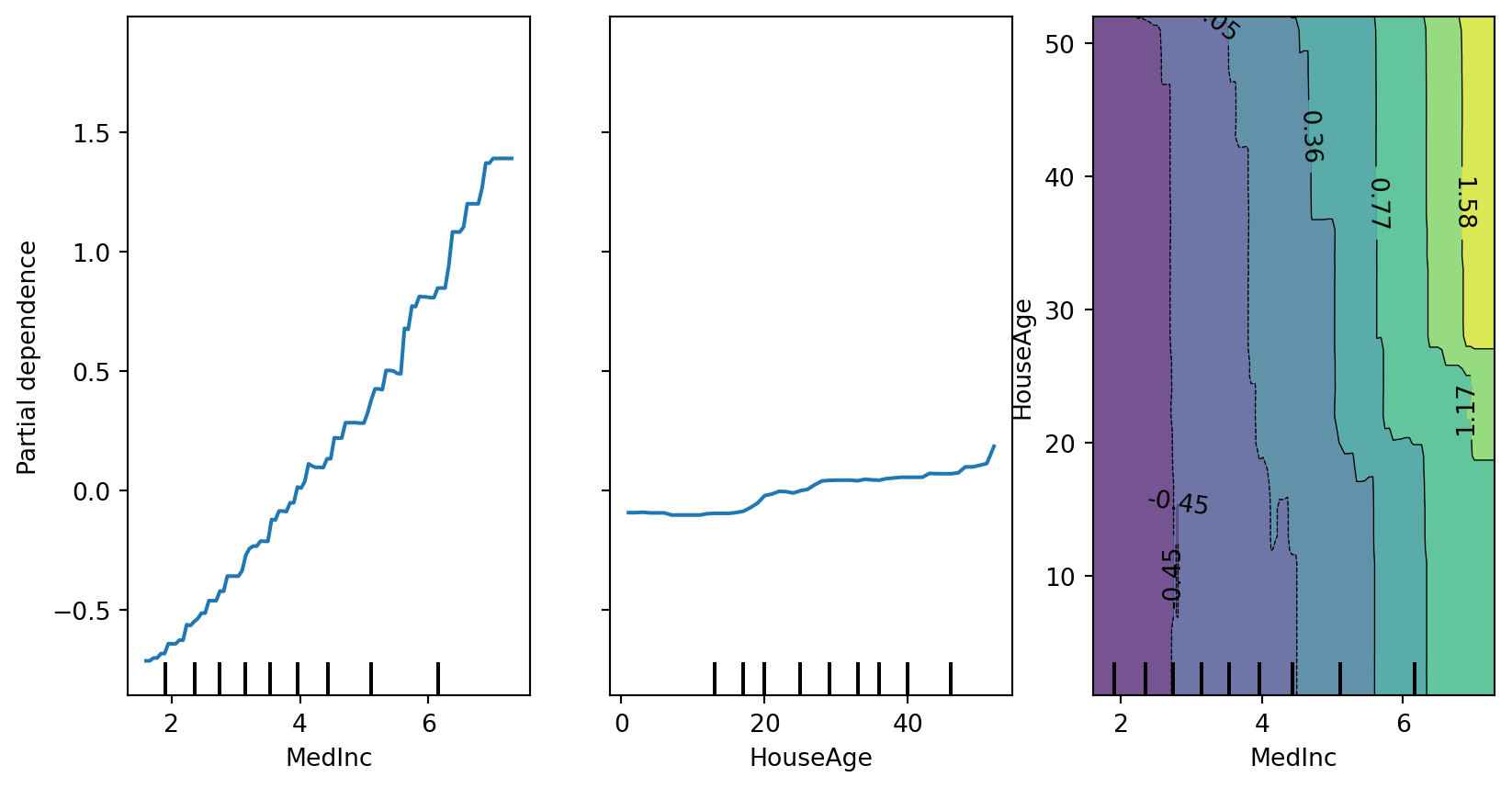

PDP: Code Example with scikit-learn

Dataset: California Housing - median house value prediction (8 features)

PDP: Output Visualization

Interpretation:

- Left (MedInc): Strong positive monotonic relationship - higher median income → higher house prices (as expected)

- Middle (HouseAge): Non-monotonic effect - newer and very old houses have lower values, middle-aged houses peak

- Right (Interaction): Shows how income and age combine - high income dominates regardless of age (vertical gradient)

Partial Dependence Plot (PDP)

Pros

- Intuitive

- Interpretation is clear

- Easy to implement

Cons

- Assume independence among features

- Can only show few features

- Hidden heterogeneous effects from averaging

From Global to Local: Bridging PDP to LIME

PDP’s Limitation: Averaging Hides Individual Differences

The Problem:

PDP shows the average effect across all instances.

But what if the effect differs between: - Cold days vs. warm days? - New customers vs. loyal customers? - Low-income vs. high-income applicants?

PDP cannot tell you!

The Solution:

Move from global to local explanations.

Instead of asking: > “How does temperature affect rentals on average?”

Ask: > “Why did the model predict this specific value for this particular day?”

→ Enter LIME

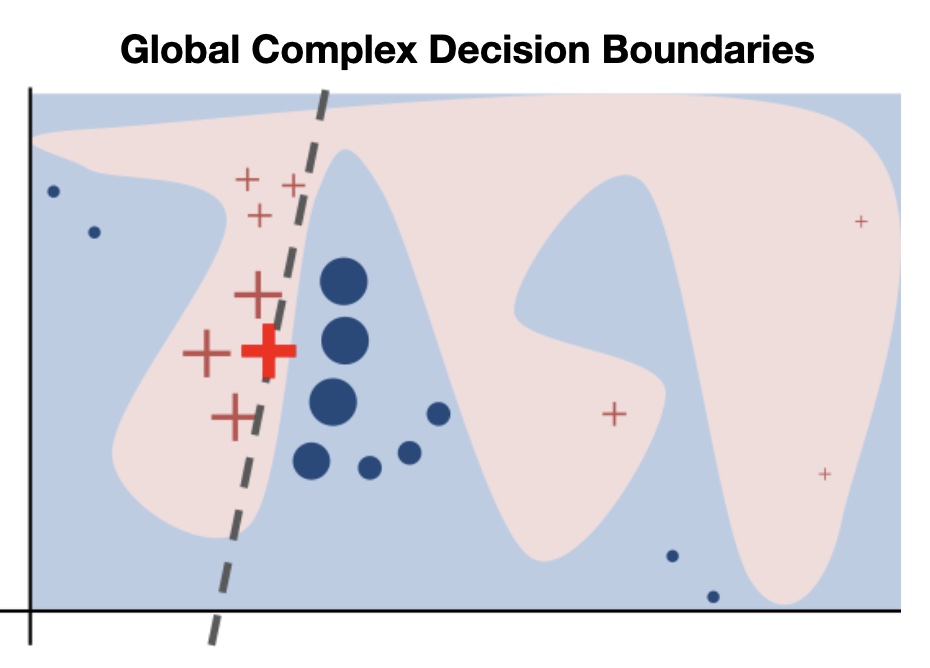

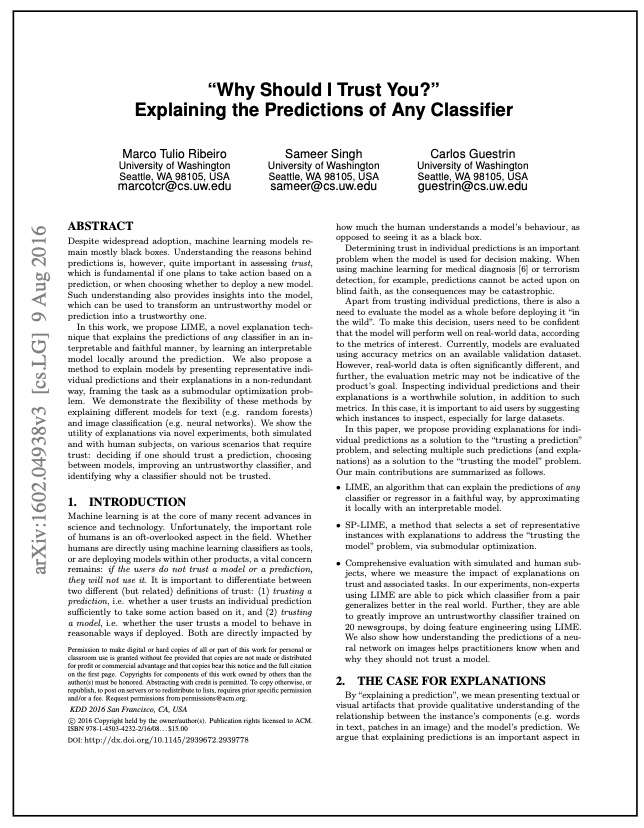

Local Interpretable Model-agnostic Explanations (LIME)

Training local surrogate models to explain individual predictions

Ribeiro, M. T., Singh, S., & Guestrin, C. (2016). “Why Should I Trust You?” Explaining the Predictions of Any Classifier. KDD.

Training local surrogate models to explain individual predictions

The idea is quite intuitive. First, forget about the training data and imagine you only have the black box model where you can input data points and get the predictions of the model. You can probe the box as often as you want. Your goal is to understand why the machine learning model made a certain prediction. LIME tests what happens to the predictions when you give variations of your data into the machine learning model.

LIME generates a new dataset consisting of perturbed samples and the corresponding predictions of the black box model.

On this new dataset LIME then trains an interpretable model, which is weighted by the proximity of the sampled instances to the instance of interest. The interpretable model can be anything from the interpretable models chapter, for example Lasso or a decision tree. The learned model should be a good approximation of the machine learning model predictions locally, but it does not have to be a good global approximation. This kind of accuracy is also called local fidelity.

Mathematically, local surrogate models with interpretability constraint can be expressed as follows:

\[\text{explanation}(x) = \arg\min_{g \in G} L(f, g, \pi_x) + \Omega(g)\]

Section 9.2, on Molnar’s book.

Local Interpretable Model-agnostic Explanations (LIME)

Algorithm

- Pick an input that you want an explanation for.

- Sample the neighbors of the selected input (i.e. perturbation).

- Train a linear classifier on the neighbors.

- The weights on the linear classifier is the explanation.

Local Interpretable Model-agnostic Explanations (LIME)

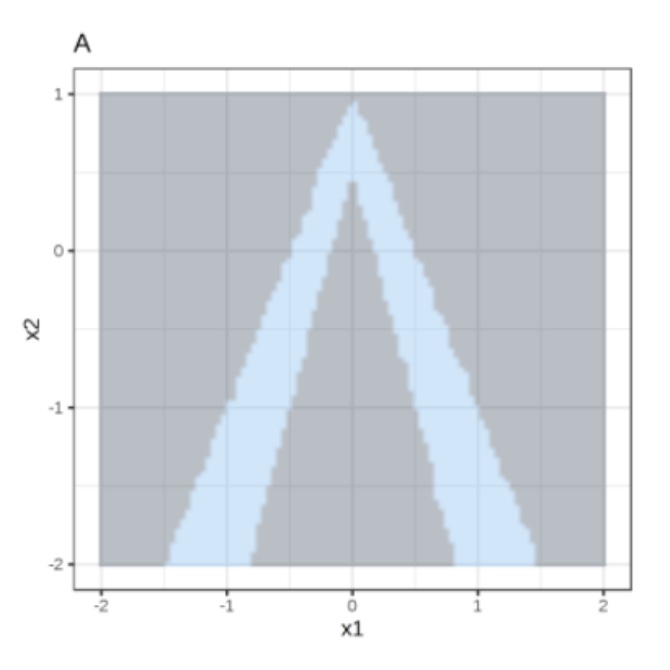

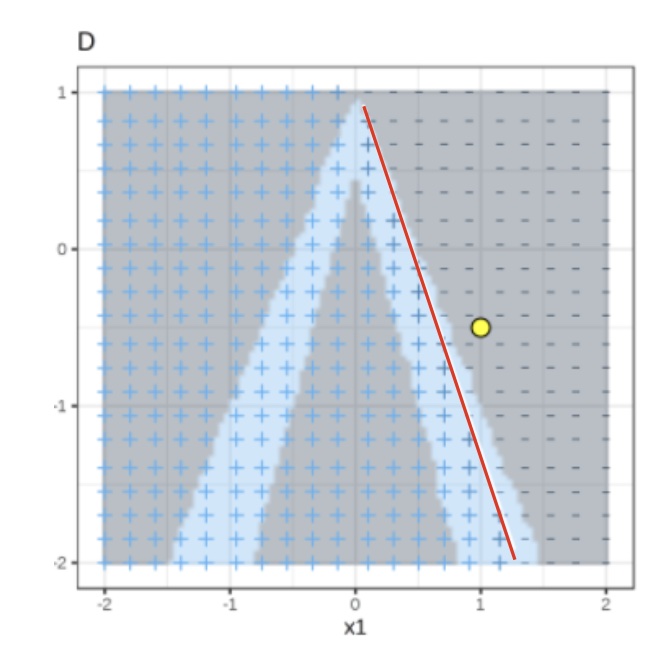

Random forest predictions given features x1 and x2.

Predicted classes: 1 (dark) or 0 (light).

Local Interpretable Model-agnostic Explanations (LIME)

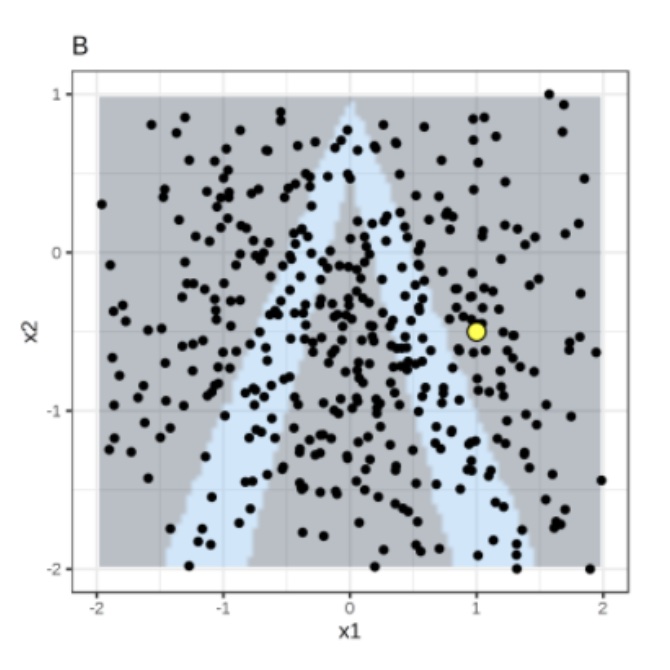

Instance of interest (big yellow dot) and data sampled from a normal distribution (small dots).

Local Interpretable Model-agnostic Explanations (LIME)

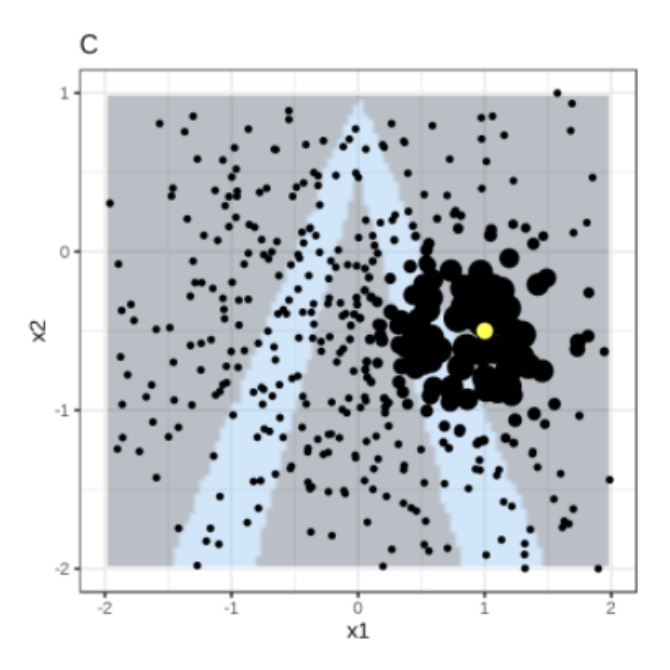

Assign higher weight to points near the instance of interest. I.e., \(weight(p) = \sqrt{\frac{e^{-d^2}}{w^2}}\) where \(d\) is the distance between \(p\) and the instantce of interest, and \(w\) is the kernel width (self-defined).

Local Interpretable Model-agnostic Explanations (LIME)

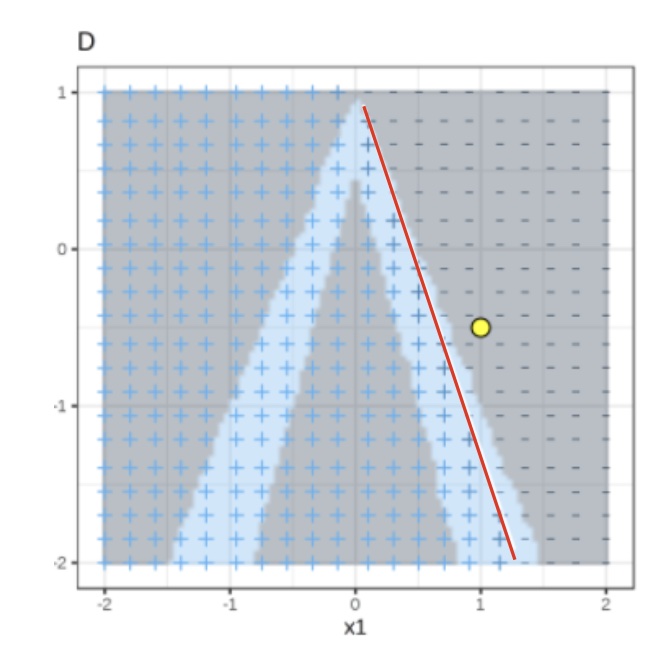

Use both the samples and sample weights to train a linear classifier.

Signs of the grid show the classifications of the locally learned model from the weighted samples. The red line marks the decision boundary (P(class=1) = 0.5).

The official implementation uses a Ridge Classifier as the linear model for explanation.

Training local surrogate models to explain individual predictions

\(s_i\) = sample weight, \(\lambda\) = regularization term

Ridge Classifier

\[minimize \sum_{i=1}^{M} s_i(y_i - \hat{y}_i)^2\]

\[= \sum_{i=1}^{M} s_i(y_i - \sum_{j=0}^{p} w_j \times x_{ij})^2 + \lambda \sum_{j=0}^{p} w_j^2\]

\(w_j\) = trained weight to explain the importance of feature j

The higher the \(\lambda\), the more sparse the \(w\) (more zeros) will become.

Ribeiro, M. T., Singh, S., & Guestrin, C. (2016). “Why Should I Trust You?” Explaining the Predictions of Any Classifier. KDD.

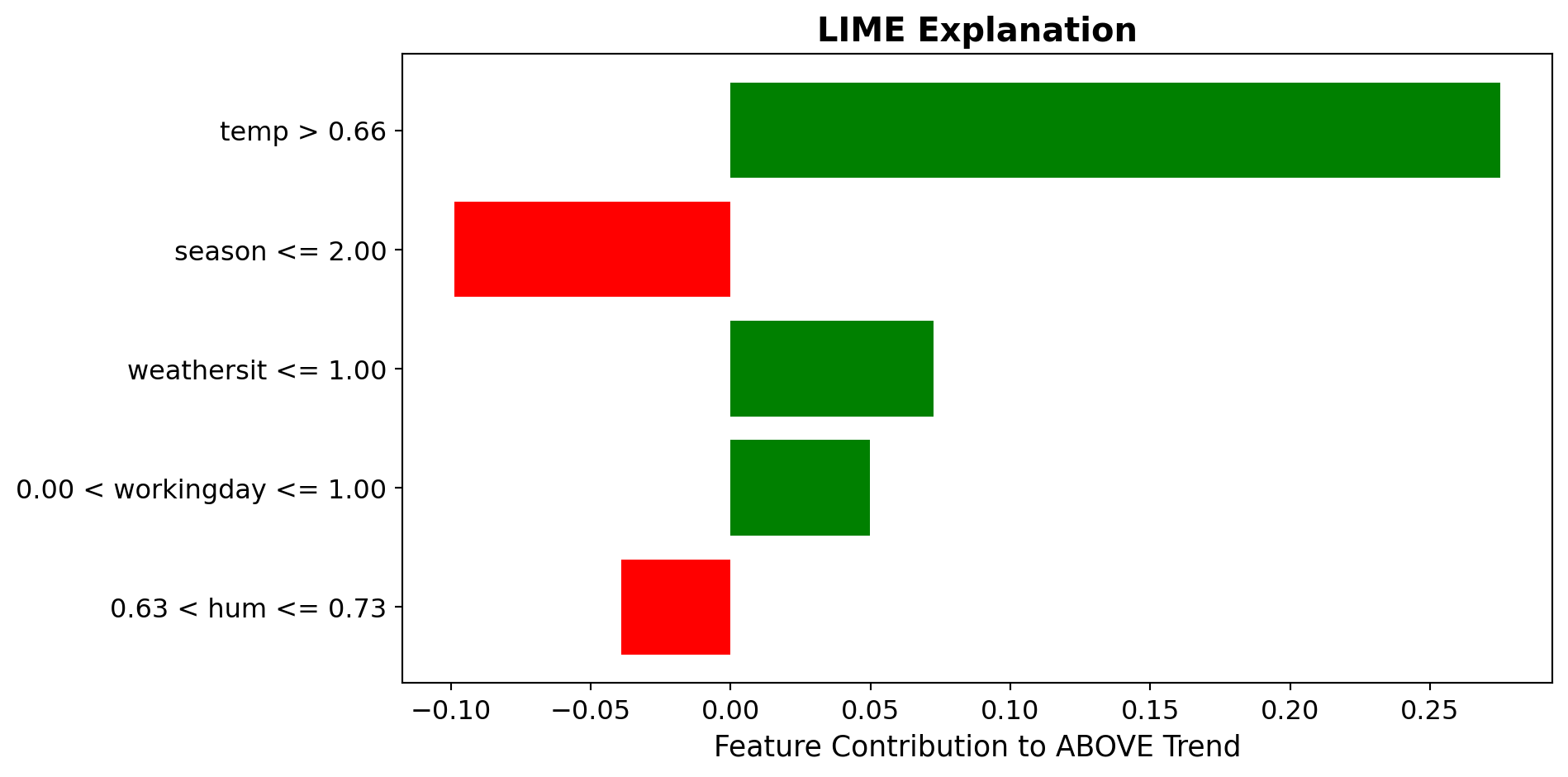

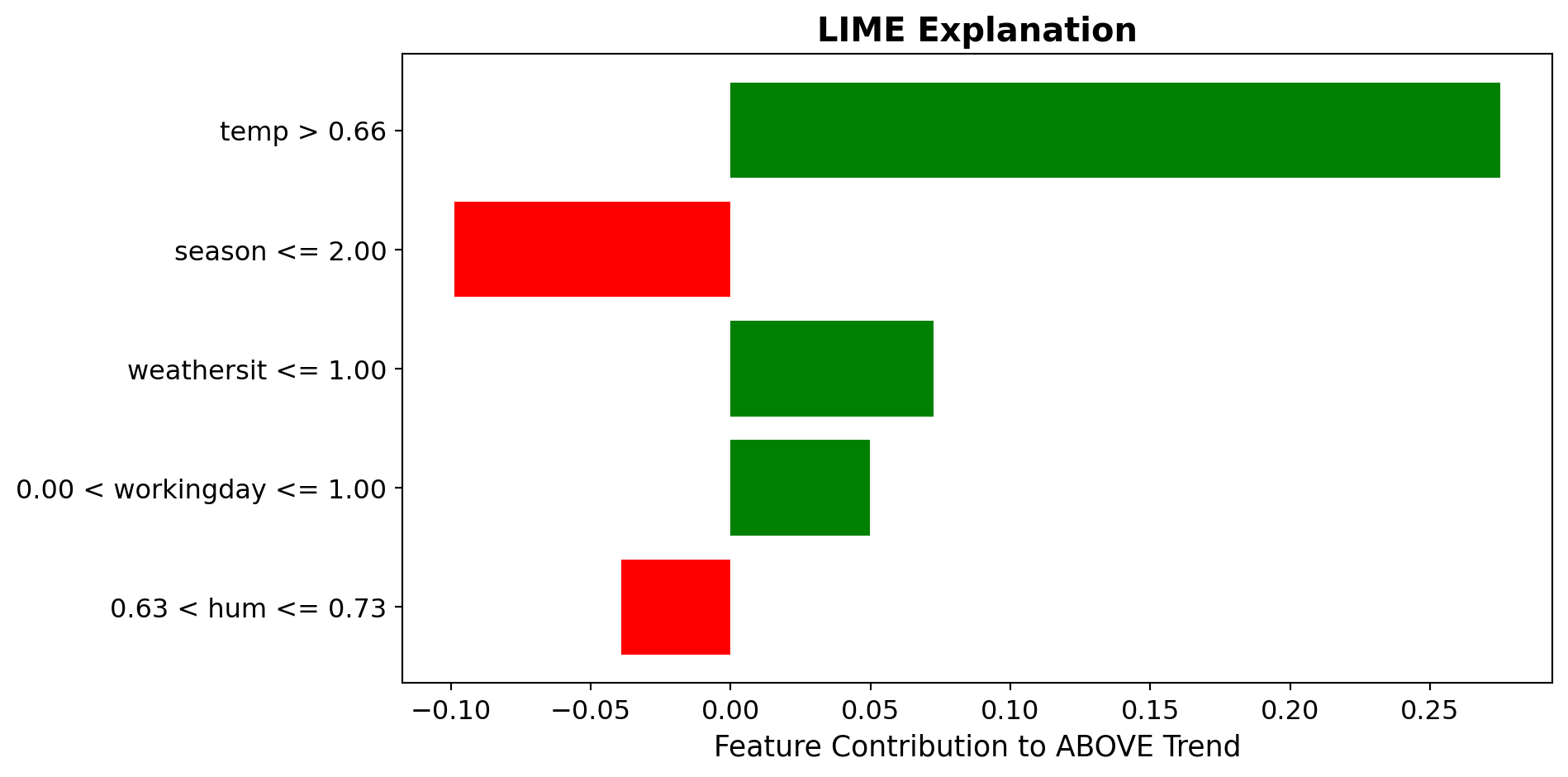

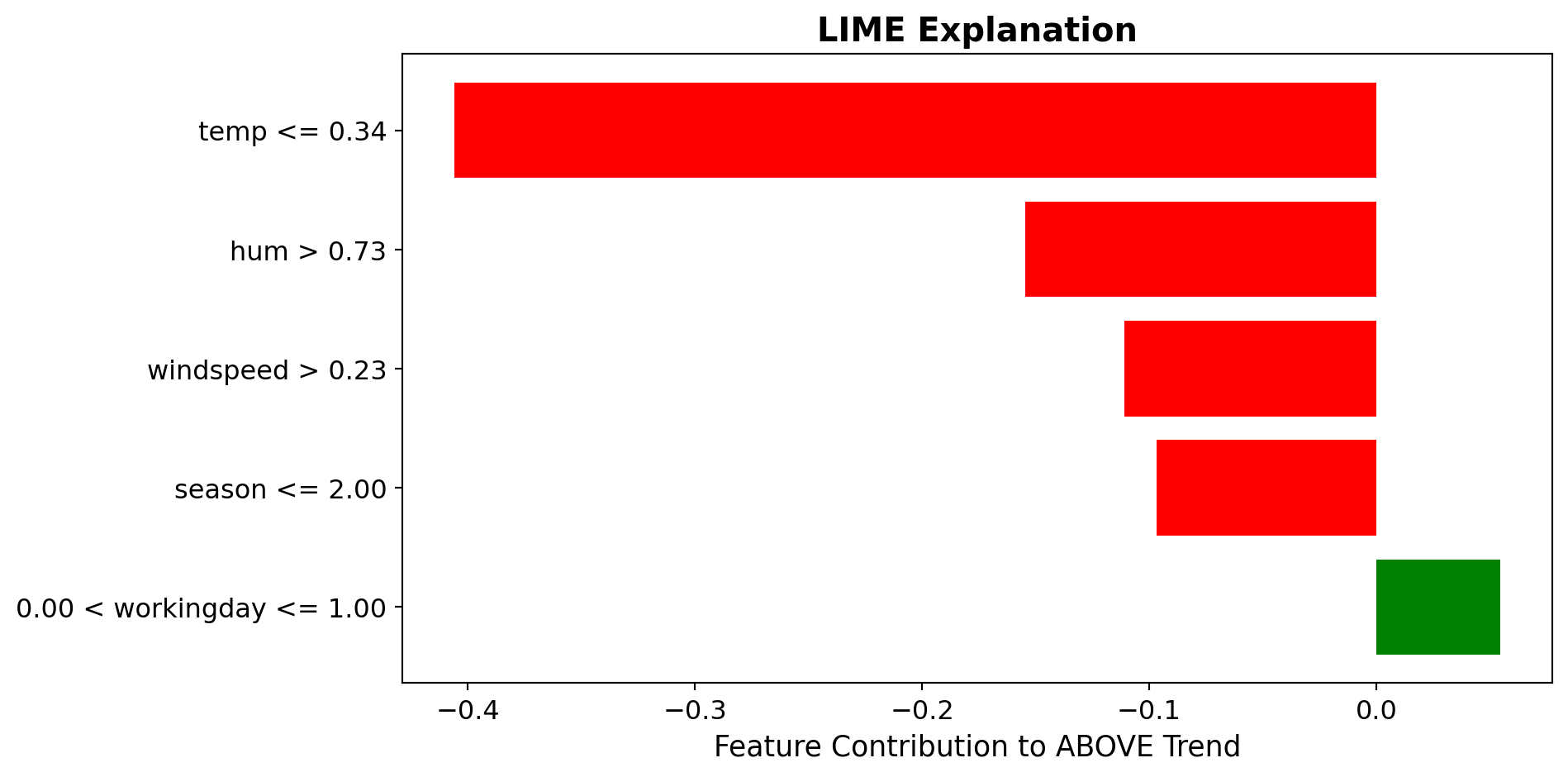

LIME Visualization: Bar Charts for Local Explanations

Primary LIME Output: Sparse Bar Charts

Case 1: High Rental Day Prediction: ABOVE Trend

▬▬▬▬▬▬▬▬▬▬ Temp > 20°C

▬▬▬▬▬▬▬ Windspeed Low

▬▬▬ Holiday = False

✓ Warm temperature strongly supports high rentals ✓ Low wind moderately supports ✗ Non-holiday slightly opposes

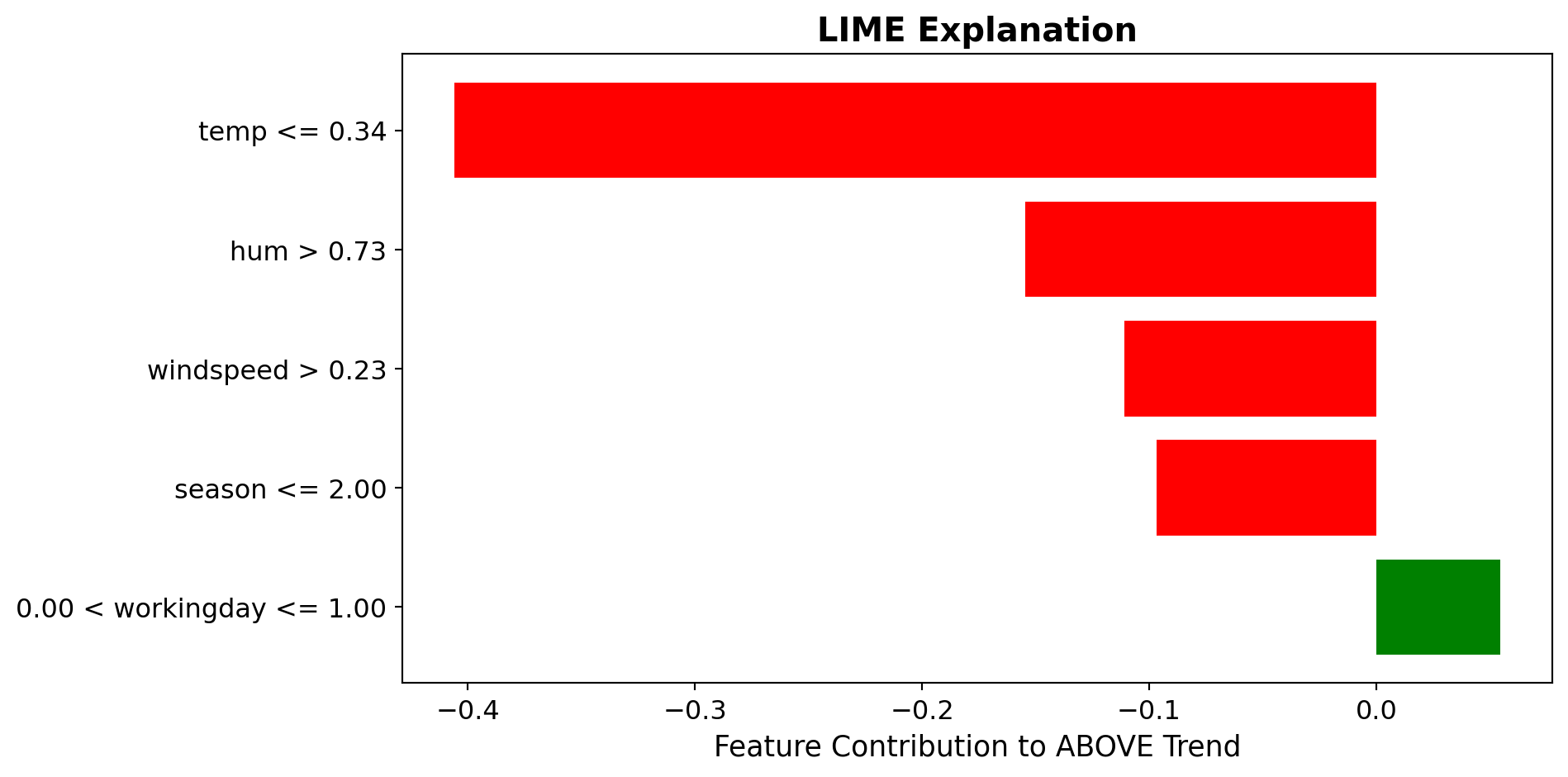

Case 2: Low Rental Day Prediction: BELOW Trend

▬▬▬▬▬▬▬▬▬▬▬▬ Weather: Rain

▬▬▬▬▬▬▬▬ Temp < 5°C

▬▬ Weekday = True

✗ Rain strongly opposes high rentals ✗ Cold temperature opposes ✓ Weekday weakly supports

Local Surrogate (LIME): Bike Rental Example

Task: Predict if bike rentals will be above or below trend

High Rental Day

Prediction: {python} f”{pred_high[1]:.2f}“ probability ABOVE trend

✓ High temperature strongly supports

✓ Good weather condition supports

Low Rental Day

Prediction: {python} f”{pred_low[0]:.2f}“ probability BELOW trend

✗ Bad weather strongly opposes

✗ Low temperature opposes

Bike Sharing Dataset from UCI Machine Learning Repository. Fanaee-T, H., & Gama, J. (2014). Event labeling combining ensemble detectors and background knowledge. Progress in Artificial Intelligence, 2(2-3), 113-127.

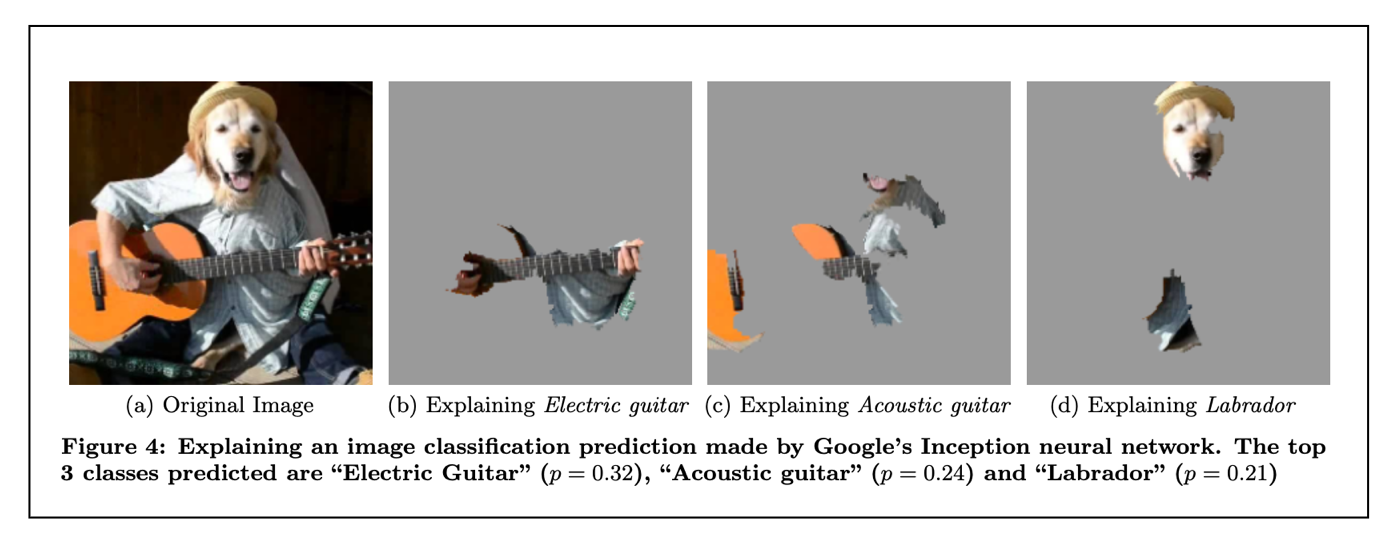

Local Surrogate (LIME)

Training local surrogate models to explain individual predictions

Ribeiro, M. T., Singh, S., & Guestrin, C. (2016). “Why Should I Trust You?” Explaining the Predictions of Any Classifier. KDD. Figure 4.

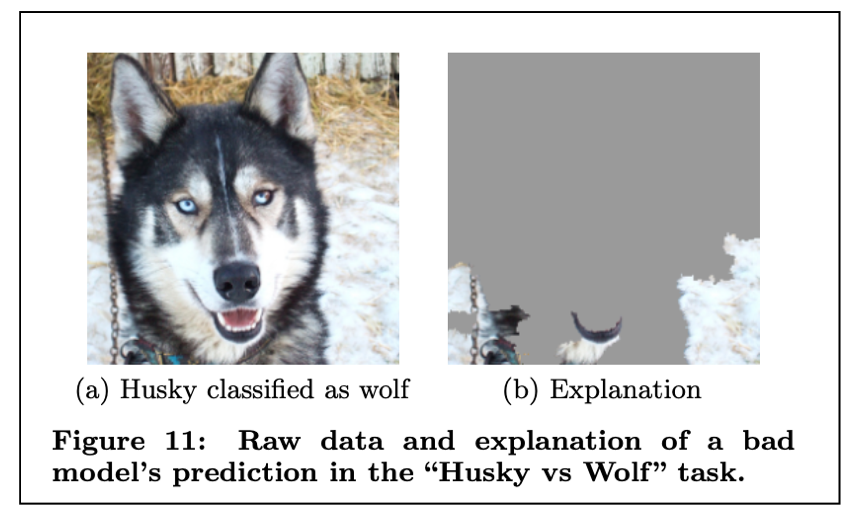

Local Surrogate (LIME)

Raw data and explanation of a bad model’s prediction in the “Husky vs Wolf” task

Ribeiro, M. T., Singh, S., & Guestrin, C. (2016). “Why Should I Trust You?” Explaining the Predictions of Any Classifier. KDD. Figure 11.

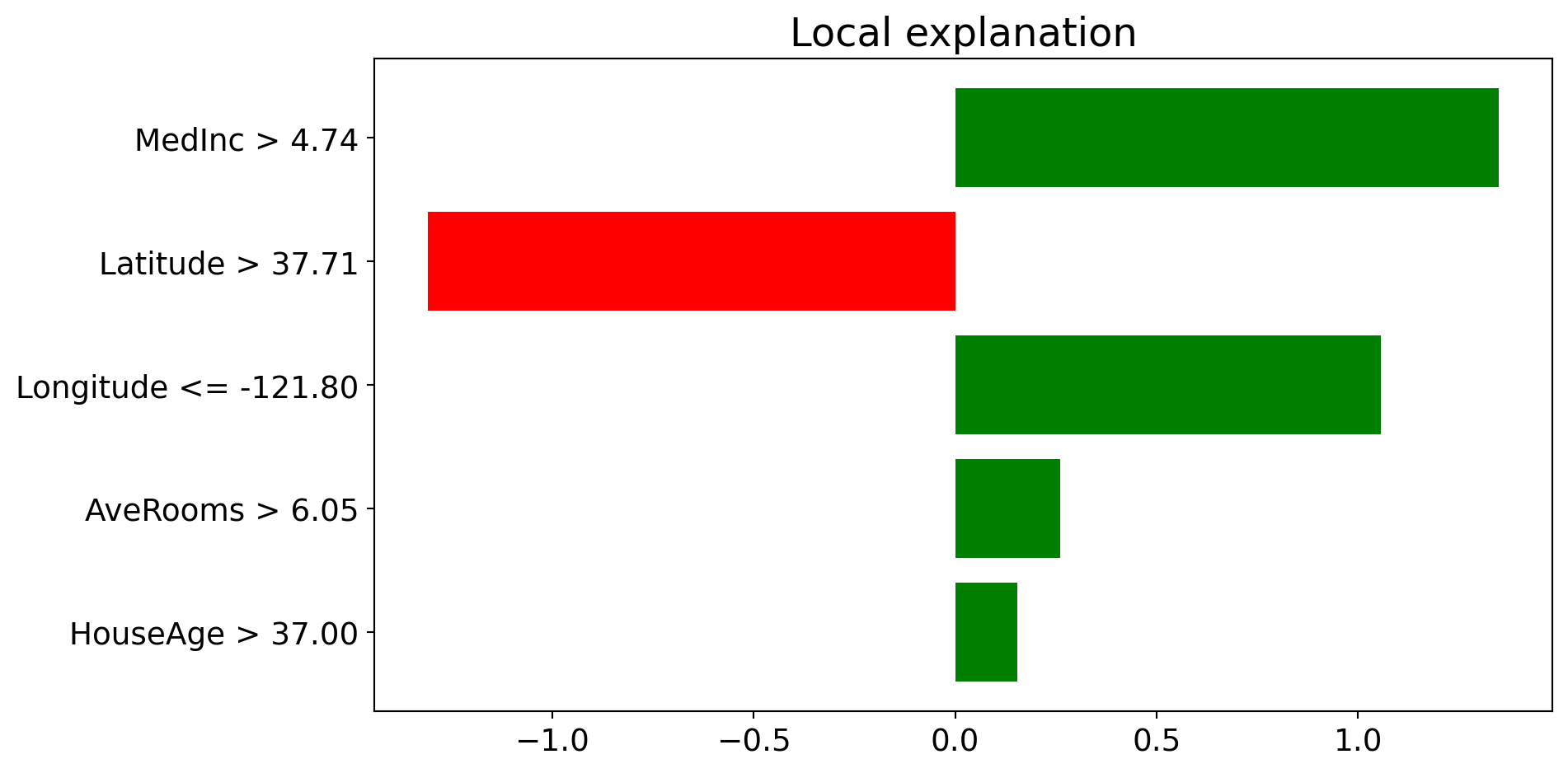

LIME: Code Example

Dataset: California Housing (same model as PDP example)

import lime

import lime.lime_tabular

import numpy as np

# Reload California Housing data for this example

housing = fetch_california_housing()

X_housing, y_housing = housing.data, housing.target

# Retrain model on California Housing

model = GradientBoostingRegressor(

n_estimators=100,

max_depth=4,

learning_rate=0.1,

random_state=0

).fit(X_housing, y_housing)

# Create LIME explainer using training data

explainer = lime.lime_tabular.LimeTabularExplainer(

training_data=X_housing,

feature_names=housing.feature_names,

mode='regression',

random_state=0

)

# Select an instance to explain (e.g., instance 0)

instance_idx = 0

instance = X_housing[instance_idx]

# Generate explanation for this instance

# predict_fn should return predictions

exp = explainer.explain_instance(

instance,

model.predict,

num_features=5 # Show top 5 features

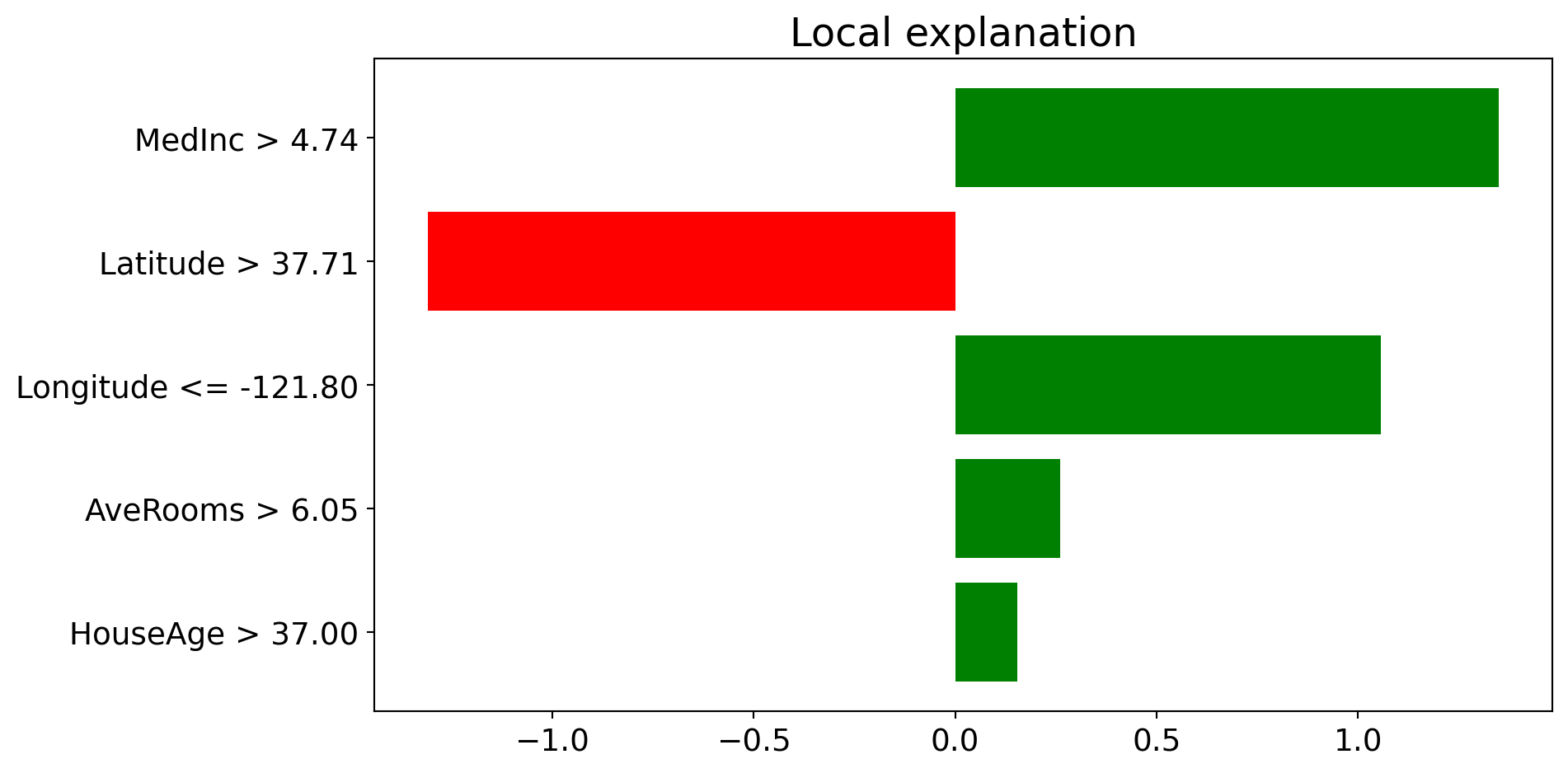

)LIME: Output Visualization

Explanation for Instance 0:

Predicted value: 4.29 (in units of $100k)

The bar chart shows which features push the prediction higher (positive) or lower (negative) for this specific house.

Local Interpretable Model-agnostic Explanations (LIME)

Pros

- Explanations are short (= selective) and possibly contrastive.

- we can control the sparsity of weight coefficients in the regressions method.

- Very easy to use.

Cons

- Unstable results due to sampling.

- Hard to weight similar neighbors in a high dimensional dataset.

- Many parameters for data scientists to hide biases.

SHAP (SHapley Additive exPlanations)

SHAP (Lundberg and Lee 2017a) is a game-theory-inspired method created to explain predictions made by machine learning models. SHAP generates one value per input feature (also known as SHAP values) that indicates how the feature contributes to the prediction of the specified data point.

Lundberg, S. M., & Lee, S. I. (2017). A Unified Approach to Interpreting Model Predictions. NeurIPS. | Molnar, C. (2024). SHAP Book.

A Short History of Shapley Values and SHAP

Three key milestones:

- 1953: The introduction of Shapley values in game theory

- 2010: The initial steps toward applying Shapley values in machine learning

- 2017: The advent of SHAP, a turning point in machine learning

Lloyd Shapley’s Pursuit of Fairness (1953)

Lloyd Shapley - “The greatest game theorist of all time”

- PhD at Princeton (post-WWII): “Additive and Non-Additive Set Functions”

- 1953 paper: “A Value for n-Person Games”

- 2012 Nobel Prize in Economics (with Alvin Roth) for work in market design and matching theory

The Problem: How to fairly divide payouts among players who contribute differently to a cooperative game?

The Solution: Shapley values provide a mathematical method for fair distribution based on marginal contributions

Applications of Shapley values:

- Political science

- Economics

- Computer science

- Dividing profits among shareholders

- Allocating costs among collaborators

- Assigning credit in research projects

Key insight: By 1953, Shapley values were well-established in game theory, but machine learning was still in its infancy.

Shapley, L. S. (1953). A value for n-person games. Contributions to the Theory of Games, 2(28), 307-317.

Early Days in Machine Learning (2010)

2010: Erik Štrumbelj and Igor Kononenko propose using Shapley values for ML

- Paper: “An efficient explanation of individual classifications using game theory”

- 2014 follow-up: Further methodology development

Why didn’t it gain immediate traction?

❌ Barriers to adoption:

- Explainable AI/Interpretable ML not widely recognized yet

- No code released with the papers

- Estimation method too slow for images/text

- Limited awareness outside specialized communities

✅ What was needed:

- Growing demand for interpretability

- Open-source implementation

- Faster computation methods

- High-profile publication venue

- Integration with popular ML frameworks

Štrumbelj, E., & Kononenko, I. (2010). An efficient explanation of individual classifications using game theory. JMLR, 11, 1-18.

The SHAP Cambrian Explosion (2017)

2016: LIME paper catalyzes the field

Ribeiro et al. introduce Local Interpretable Model-Agnostic Explanations → growing interest in model interpretability

2017: Lundberg and Lee publish SHAP at NeurIPS

“A Unified Approach to Interpreting Model Predictions”

Key contributions beyond 2010 work:

- Kernel SHAP: New estimation method using weighted linear regression

- Unification framework: Connected SHAP to LIME, DeepLIFT, and Layer-Wise Relevance Propagation

- Open-source

shappackage: Wide range of features and plotting capabilities - High-profile venue: Published at major ML conference (NIPS/NeurIPS)

Why SHAP Gained Popularity

Critical success factors:

✓ Published at prestigious venue (NeurIPS) ✓ Pioneering work in rapidly growing field ✓ Open-source Python package - enabled integration ✓ Ongoing development by original authors ✓ Strong community contributions ✓ Comprehensive visualization tools

2020 breakthrough: TreeSHAP

- Efficient computation for tree-based models

- Enabled SHAP for state-of-the-art models

- Made global interpretations possible

Naming conventions:

- Shapley values: Original game theory method (1953)

- SHAP: Application to machine learning (2017)

- SHAP values: Resulting feature importance values

shap: The Python library implementation

“SHAP” became a brand name like Post-it or Band-Aid - well-established in the community and distinguishes game theory from ML application.

Lundberg, S. M., & Lee, S. I. (2017). A unified approach to interpreting model predictions. NeurIPS. | Lundberg, S. M., et al. (2020). From local explanations to global understanding with explainable AI for trees. Nature Machine Intelligence, 2(1), 56-67.

Theory of Shapley Values

Who’s going to pay for that taxi?

Alice, Bob, and Charlie have dinner together and share a taxi ride home. The total cost is $51.

The question is: How should they divide the costs fairly?

| Passengers | Cost | Note |

|---|---|---|

| ∅ | $0 | No taxi, no costs |

| {Alice} | $15 | Standard fare |

| {Bob} | $25 | Luxury taxi |

| {Charlie} | $38 | Lives further away |

| {Alice, Bob} | $25 | Bob gets his way |

| {Alice, Charlie} | $41 | Drop Alice first |

| {Bob, Charlie} | $51 | Drop Bob first |

| {Alice, Bob, Charlie} | $51 | Full fare |

Key observations:

- Alice alone: $15

- Bob alone: $25 (insists on luxury)

- Charlie alone: $38 (lives farther)

- All three: $51

Naive approach: $51 ÷ 3 = $17 each

Problem: Is this fair? Alice is subsidizing the others!

We need a principled way to divide costs based on marginal contributions.

Calculating Marginal Contributions

Marginal Contribution = Value with player − Value without player

Example: Charlie joining Bob’s taxi = $51 - $25 = $26

| Addition | To Coalition | Cost Before | Cost After | Marginal Contribution |

|---|---|---|---|---|

| Alice | ∅ | $0 | $15 | $15 |

| Alice | {Bob} | $25 | $25 | $0 |

| Alice | {Charlie} | $38 | $41 | $3 |

| Alice | {Bob, Charlie} | $51 | $51 | $0 |

| Bob | ∅ | $0 | $25 | $25 |

| Bob | {Alice} | $15 | $25 | $10 |

| Bob | {Charlie} | $38 | $51 | $13 |

| Bob | {Alice, Charlie} | $41 | $51 | $10 |

| Charlie | ∅ | $0 | $38 | $38 |

| Charlie | {Alice} | $15 | $41 | $26 |

| Charlie | {Bob} | $25 | $51 | $26 |

| Charlie | {Alice, Bob} | $25 | $51 | $26 |

Weighted Average via Permutations

How to weight these marginal contributions?

Consider all possible permutations (orderings) of passengers joining the taxi:

All 3! = 6 permutations:

- Alice, Bob, Charlie

- Alice, Charlie, Bob

- Bob, Alice, Charlie

- Bob, Charlie, Alice

- Charlie, Alice, Bob

- Charlie, Bob, Alice

Each permutation defines which players are “already in the taxi” when each player joins.

For Alice:

- 2 times added to ∅ (empty taxi)

- 1 time added to {Bob}

- 1 time added to {Charlie}

- 2 times added to {Bob, Charlie}

Weights determined by permutation frequency!

Coalition size matters: smaller coalitions get higher weight.

Averaging Marginal Contributions

Alice’s Shapley value:

\[\phi_{Alice} = \frac{1}{6}(2 \cdot \$15 + 1 \cdot \$0 + 1 \cdot \$3 + 2 \cdot \$0) = \$5.50\]

Bob’s Shapley value:

\[\phi_{Bob} = \frac{1}{6}(2 \cdot \$25 + 1 \cdot \$10 + 1 \cdot \$13 + 2 \cdot \$10) = \$15.50\]

Charlie’s Shapley value:

\[\phi_{Charlie} = \frac{1}{6}(2 \cdot \$38 + 1 \cdot \$26 + 1 \cdot \$26 + 2 \cdot \$26) = \$30.00\]

Verification: $5.50 + $15.50 + $30.00 = $51.00 ✓

General Shapley Value Formula

Game Theory Notation:

| Term | Math | Taxi Example |

|---|---|---|

| Player | \(j \in \{1, \ldots, N\}\) | Alice, Bob, Charlie |

| Coalition | \(S \subseteq N\) | {Alice, Bob} |

| Value Function | \(v(S)\) | Cost of coalition |

| Shapley Value | \(\phi_j\) | Fair share for player \(j\) |

The Formula:

\[\phi_j = \sum_{S \subseteq N \setminus \{j\}} \frac{|S|!(N - |S| - 1)!}{N!} \left( v(S \cup \{j\}) - v(S) \right)\]

The Shapley value is the weighted average of a player’s marginal contributions to all possible coalitions.

The Four Axioms Behind Shapley Values

1. Efficiency

The contributions sum to the total payout:

\[\sum_{j \in N} \phi_j = v(N)\]

In taxi: $5.50 + $15.50 + $30 = $51 ✓

2. Symmetry

If players have identical marginal contributions, they get equal payouts:

If \(v(S \cup \{j\}) = v(S \cup \{k\})\) for all \(S\), then \(\phi_j = \phi_k\)

If Bob didn’t need luxury taxi, he’d pay same as Alice

3. Dummy (Null Player)

Players who contribute nothing get nothing:

If \(v(S \cup \{j\}) = v(S)\) for all \(S\), then \(\phi_j = 0\)

If Dora the dog rides free, her share = $0

4. Additivity

For two games with value functions \(v_1\) and \(v_2\):

\[\phi_{j,v_1+v_2} = \phi_{j,v_1} + \phi_{j,v_2}\]

Can split taxi + ice cream costs separately, then add

These four axioms uniquely determine the Shapley value formula (Shapley, 1953)

From Shapley Values to SHAP

A Machine Learning Example

You have trained a model \(f\) to predict apartment prices.

For a specific apartment \(x^{(i)}\): - Area: 50 m² (538 sq ft) - Floor: 2nd floor - Park: Nearby - Cats: Banned

Predictions: - \(f(x^{(i)}) = \text{€}300,000\) (this apartment) - \(\mathbb{E}[f(X)] = \text{€}310,000\) (average) - Difference: -€10,000

Goal: Explain how each feature value contributed to the -€10,000 difference from average.

Viewing a Prediction as a Coalitional Game

Key insight: Each feature value is a “player” in a game where the “payout” is the prediction.

| Game Theory Concept | Machine Learning Translation | Notation |

|---|---|---|

| Player | Feature index | \(j\) |

| Coalition | Set of features | \(S \subseteq \{1, \ldots, p\}\) |

| Not in coalition | Features not in \(S\) | \(C = \{1, \ldots, p\} \setminus S\) |

| Coalition size | Number of features in \(S\) | \(\|S\|\) |

| Total players | Number of features | \(p\) |

| Total payout | Prediction - Average | \(f(x^{(i)}) - \mathbb{E}[f(X)]\) |

| Value function | Prediction for coalition | \(v_{f,x^{(i)}}(S)\) |

| Shapley value | SHAP value (contribution) | \(\phi_j^{(i)}\) |

We translate concepts from game theory to machine learning predictions → SHAP

The SHAP Value Function

How do we handle “absent” features in a coalition?

\[v_{f,x^{(i)}}(S) = \int f(x_S^{(i)} \cup X_C) d\mathbb{P}_{X_C} - \mathbb{E}[f(X)]\]

Key components:

- \(x_S^{(i)}\): Known feature values (in coalition \(S\))

- \(X_C\): Unknown features (random variables)

- \(\int \ldots d\mathbb{P}_{X_C}\): Marginalization - integrate over distribution

- \(-\mathbb{E}[f(X)]\): Ensures \(v(\emptyset) = 0\)

Marginalization: Treat absent features as random variables, weight predictions by their likelihood

Apartment example:

For coalition \(S = \{\text{park}, \text{floor}\}\):

\[v(\{park, floor\}) = \int f(x_{park}, X_{cat}, X_{area}, x_{floor}) d\mathbb{P}_{cat,area}\]

- \(x_{park} = \text{nearby}\) (known)

- \(x_{floor} = 2\) (known)

- \(X_{cat}\), \(X_{area}\) (random - integrated over)

Present features input directly; absent features marginalized out

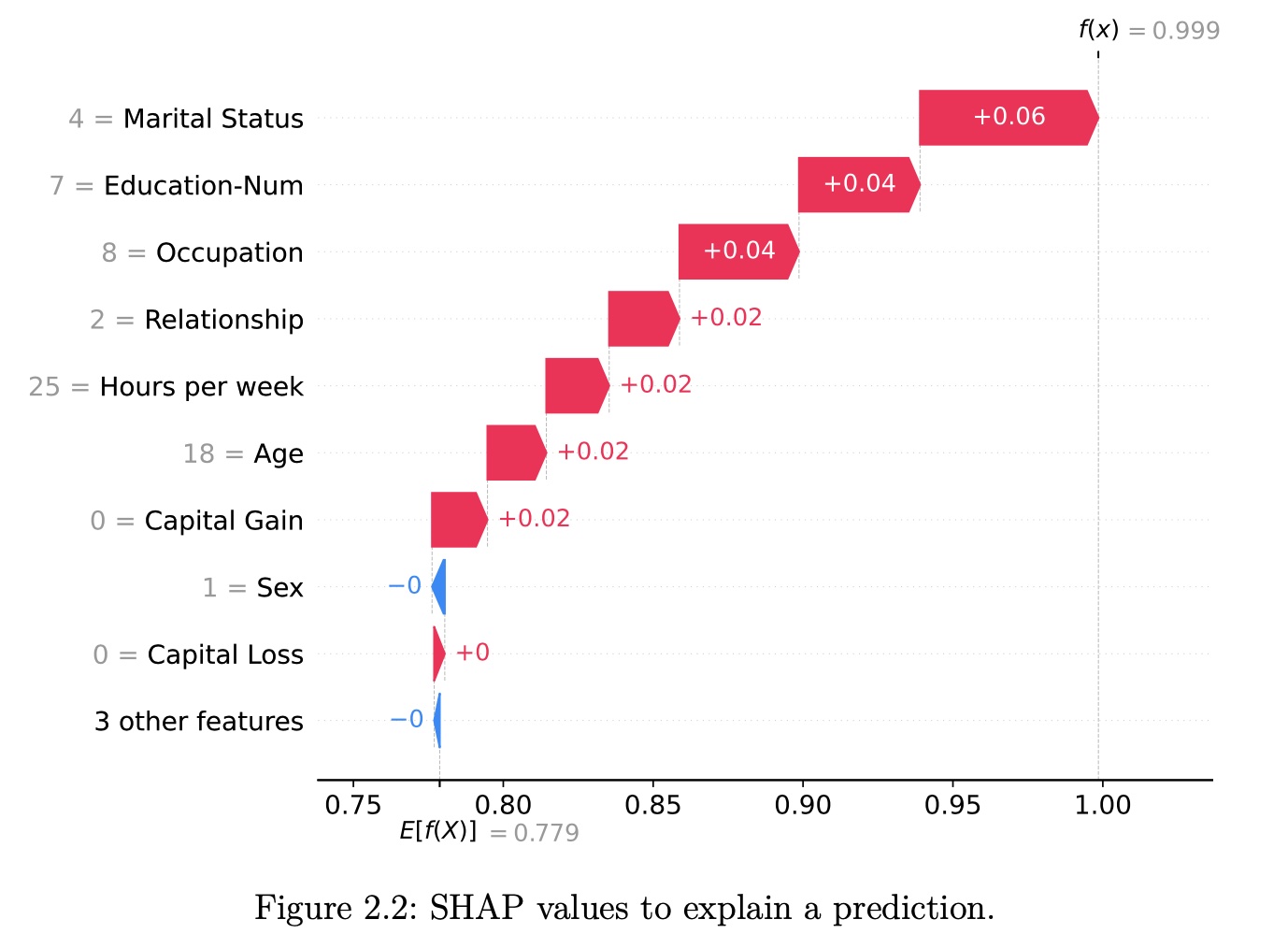

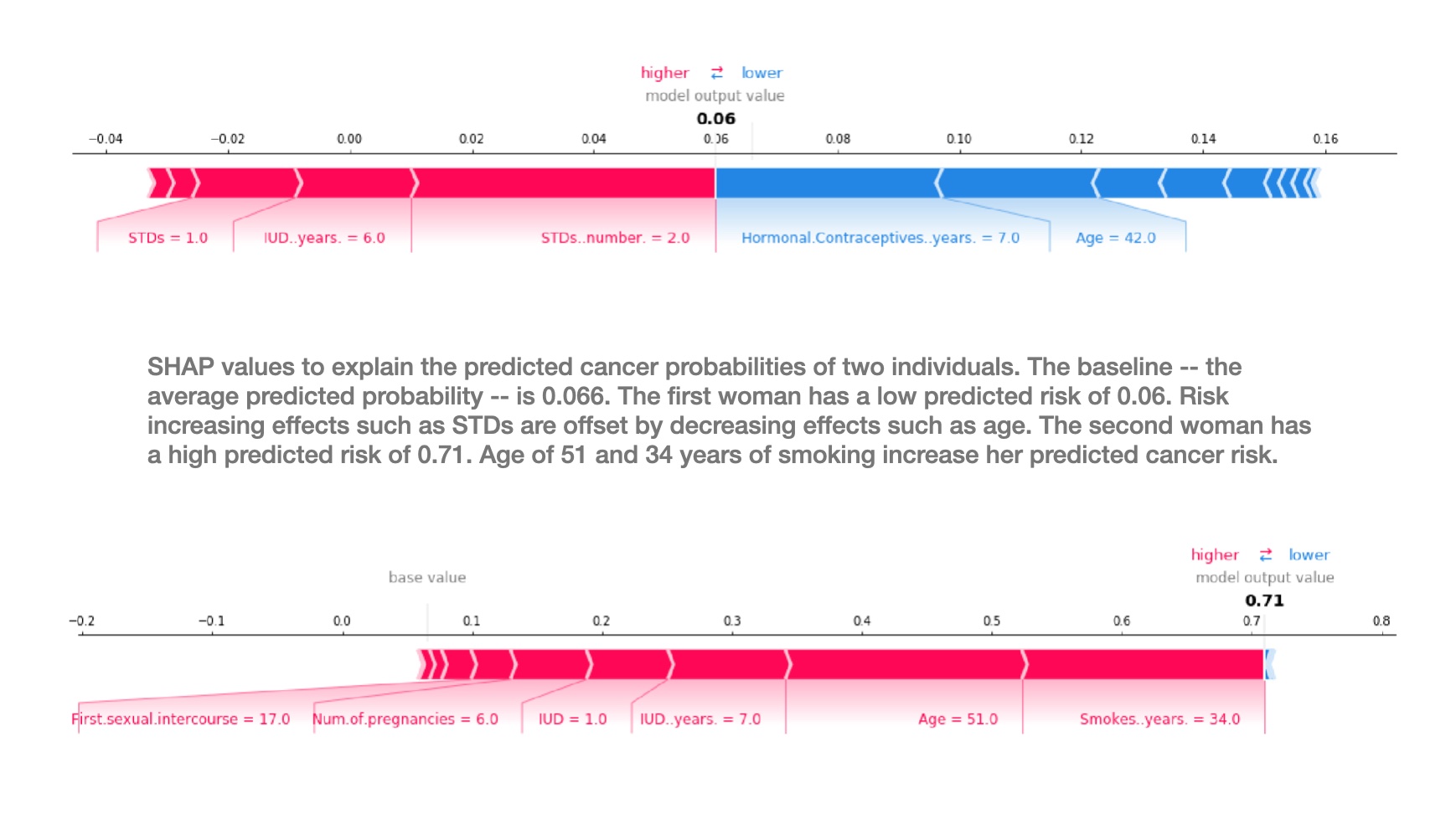

SHAP Visualization: Force Plot

Visualizing the Efficiency Axiom

Base Value (E[f(x)]) = $300K Output Value f(x) = $450K

▬▬▬▬▬▬ +$80K Area=85m² (high pushes price up)

▬▬▬▬ +$45K Location=Downtown

▬▬▬ +$30K Year=2020 (new)

▬▬ -$15K Cat-banned=True

▬ -$10K Floor=1 (ground floor)

Sum: $300K + $80K + $45K + $30K - $15K - $10K = $450K ✓

Putting It All Together: The SHAP Formula

Combining all terms into the SHAP equation:

\[\phi_j^{(i)} = \sum_{S \subseteq \{1,\ldots,p\} \setminus j} \frac{|S|! (p - |S| - 1)!}{p!} \cdot \left( \int f(x_{S \cup j}^{(i)} \cup X_{C \setminus j}) d\mathbb{P}_{X_{C \setminus j}} - \int f(x_S^{(i)} \cup X_C) d\mathbb{P}_{X_C} \right)\]

In words: The SHAP value \(\phi_j^{(i)}\) of feature \(j\) is the weighted average marginal contribution of feature value \(x_j^{(i)}\) across all possible coalitions of features.

This is Shapley values with an ML-specific value function!

Interpreting SHAP Values Through Axioms

Since SHAP values are Shapley values, they satisfy the four axioms:

1. Efficiency

\[\sum_{j=1}^p \phi_j^{(i)} = f(x^{(i)}) - \mathbb{E}[f(X)]\]

Implications:

- SHAP values sum to deviation from average

- Attributions on same scale as output

- Unlike gradients, which don’t sum to prediction

- Enables force plot visualizations

2. Symmetry

Features with equal contributions get equal SHAP values

Implications:

- Feature order doesn’t matter

- Essential for feature importance ranking

- Unlike breakdown method which depends on order

3. Dummy

Unused features get SHAP value of zero

Implications:

- If feature doesn’t affect prediction → \(\phi_j = 0\)

- Makes sense for sparse models

- E.g., Lasso with \(\beta_j = 0\) → \(\phi_j^{(i)} = 0\) for all \(i\)

4. Additivity

For ensemble models: \(\phi_j(v_1 + v_2) = \phi_j(v_1) + \phi_j(v_2)\)

Implications:

- For random forest: compute SHAP per tree, then average

- For additive ensembles: sum individual SHAP values

- Enables TreeSHAP efficiency

Štrumbelj & Kononenko (2010, 2014); Lundberg & Lee (2017)

Key Differences: LIME vs. SHAP

LIME

- Heuristic local approximation

- Sparse explanations (few features)

- Faster to compute

- Good for lay audiences

- No theoretical guarantees

Best when: You want simple, sparse explanations with only most important features highlighted

SHAP

- Rigorous game-theoretic foundation

- Always uses all features

- Computationally expensive

- Satisfies fairness axioms

- Unique solution

Best when: You need complete, theoretically grounded feature attributions with fairness guarantees

Important: SHAP values measure contribution to deviation from average, NOT the effect of removing features from training.

Source: Molnar, C. (2024). Interpretable Machine Learning.

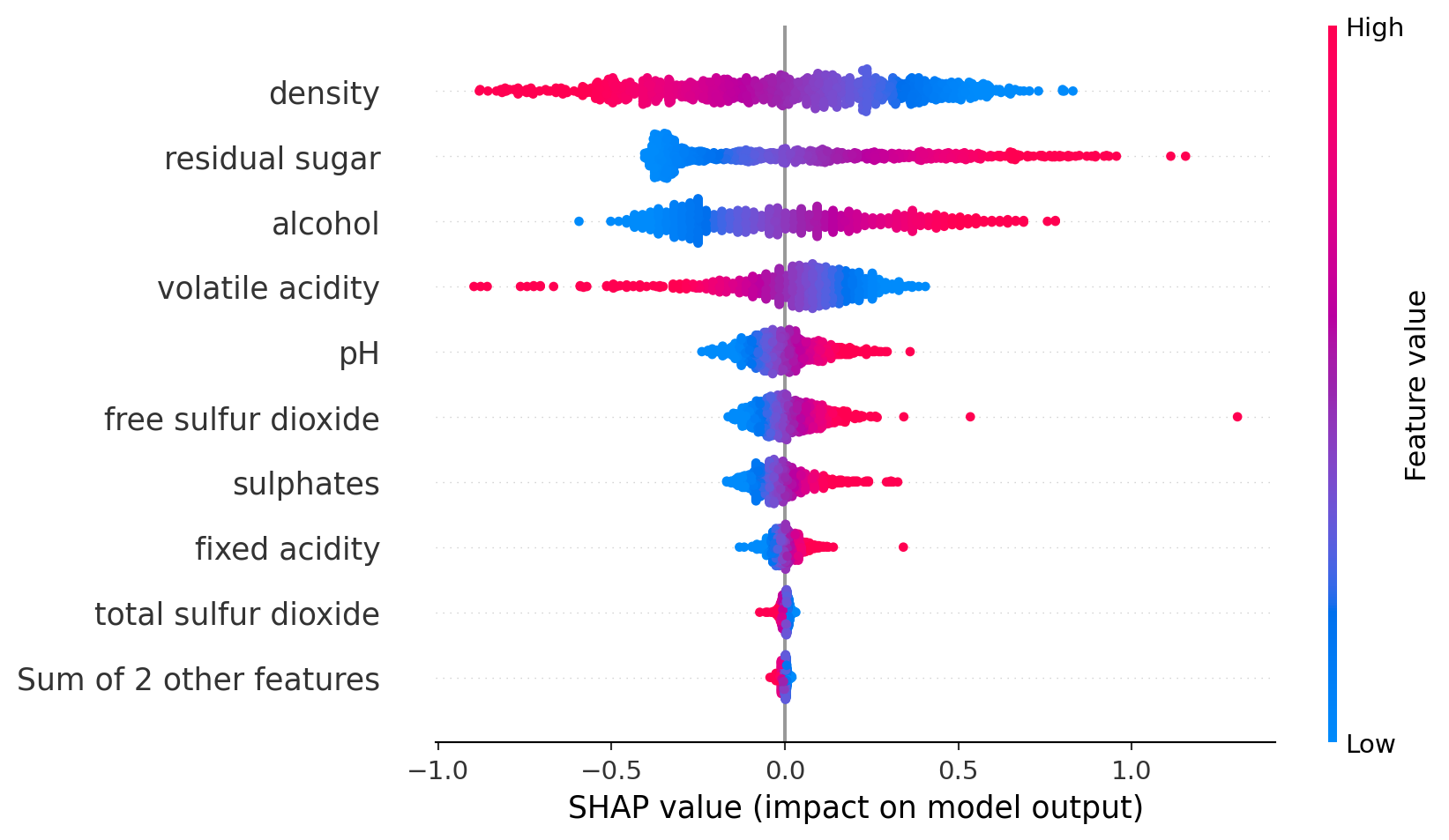

SHAP Visualization: Global Feature Analysis (Summary Plot)

From Local to Global: Understanding Feature Importance Across All Predictions

Feature Importance (Y-axis) ↓

Area (m²) ●●●●●●●●●●●●●●

Location ●●●●●●●●●●

Year Built ●●●●●●●●●

Cat-banned ●●●●●

Floor ●●●●●

← Negative Impact SHAP Value Positive Impact →

● Blue = Low feature value ● Red = High feature value

Dual Interpretation:

Feature Importance (Y-Axis vertical spread)

- Wider spread = more important

- Area has largest impact

Direction of Effect (Color)

- Red (high) on right = positive impact

- Blue (low) on left = negative impact

Example Reading:

- High area (red) → strong positive SHAP

- Low area (blue) → negative SHAP

- Clear correlation!

SHAP (SHapley Additive exPlanations)

Pros

Fairly distributed feature importance to a prediction

Contrastive explanations (can compare an instance to a subset or even to a single data point)

Solid theory

Cons

- A lot of computing time

- Not sparse explanations (every feature is important)

SHAP (SHapley Additive exPlanations)

SHAP limitations

Key Limitations:

- Computational Cost: Exponential complexity O(2^n) for exact calculation

- Feature Independence Assumption: Unrealistic in correlated datasets

- Interpretation Challenges: Values represent marginal contributions, not causal effects

- Instability: Small perturbations can lead to different explanations

Kumar, I. E., et al. (2020). Problems with Shapley-value-based explanations as feature importance measures. ICML.

SHAP Limitations: Not Always Human-Friendly

What Makes Explanations Human-Friendly? (Miller 2019)

Human-friendly explanations should be:

- Concise: Focus on key factors (2-3 features)

- Contrastive: Compare to specific alternatives

- Abnormal: Highlight unusual causes

But SHAP:

- ❌ Not concise: Always uses ALL features

- ❌ Not strongly contrastive: Averages over many coalitions, diluting contrast

- ❌ Doesn’t highlight abnormal: Treats all features equally

⚠️ Warning: Don’t present SHAP values to end-users as straightforward information. They require explanation and training to understand properly.

SHAP Doesn’t Enable User Actions

Example: Corn Yield Prediction

- Model predicts low yield for a field

- SHAP: “Fertilizer use had +0.3 positive impact”

- Farmer asks: “Should I add more fertilizer?”

- SHAP: 🤷 Cannot answer!

SHAP explains the current state vs. average, not how to change outcomes.

For Actionable Recommendations:

- Use counterfactual explanations: “If fertilizer increased by 20%, yield would increase by 5%”

- Design models with causal relationships

- Use representative training data

Miller, T. (2019). Explanation in artificial intelligence: Insights from the social sciences. Artificial Intelligence, 267, 1-38.

SHAP Limitations: Misinterpretation and Adversarial Risks

Common Misinterpretations

❌ Wrong Interpretation:

“SHAP value = difference in prediction if feature removed from training”

✓ Correct Interpretation:

“SHAP value = contribution to deviation from mean prediction, given current feature values”

SHAP Is NOT:

- ❌ A surrogate model (unlike LIME)

- Cannot predict effects of feature changes

- Example: “If salary increases €300, credit score increases 5 points” ← SHAP can’t do this!

- ❌ Training-data independent

- Requires background data for computation

- Privacy/access concerns in production

Adversarial Risk: You Can Fool SHAP (Slack et al. 2020)

Unscrupulous data scientists can create intentionally misleading SHAP explanations to conceal biases:

Attack Scenario:

- Train a biased model (e.g., using race for loan decisions)

- Add decoy features that appear important to SHAP

- Manipulate background data distribution

- Result: SHAP shows “fair” explanations for discriminatory predictions!

Philosophical Issue: No Consensus

- What should “feature importance” mean?

- Are SHAP axioms the “right” definition?

- Process is backwards: method → interpretation

“We use a mathematically coherent method that generates an ‘explanation’ and then attempt to decipher its meaning.” - Molnar (2024)

Slack, D., et al. (2020). Fooling LIME and SHAP. AIES. | Molnar, C. (2024). Interpretable Machine Learning.

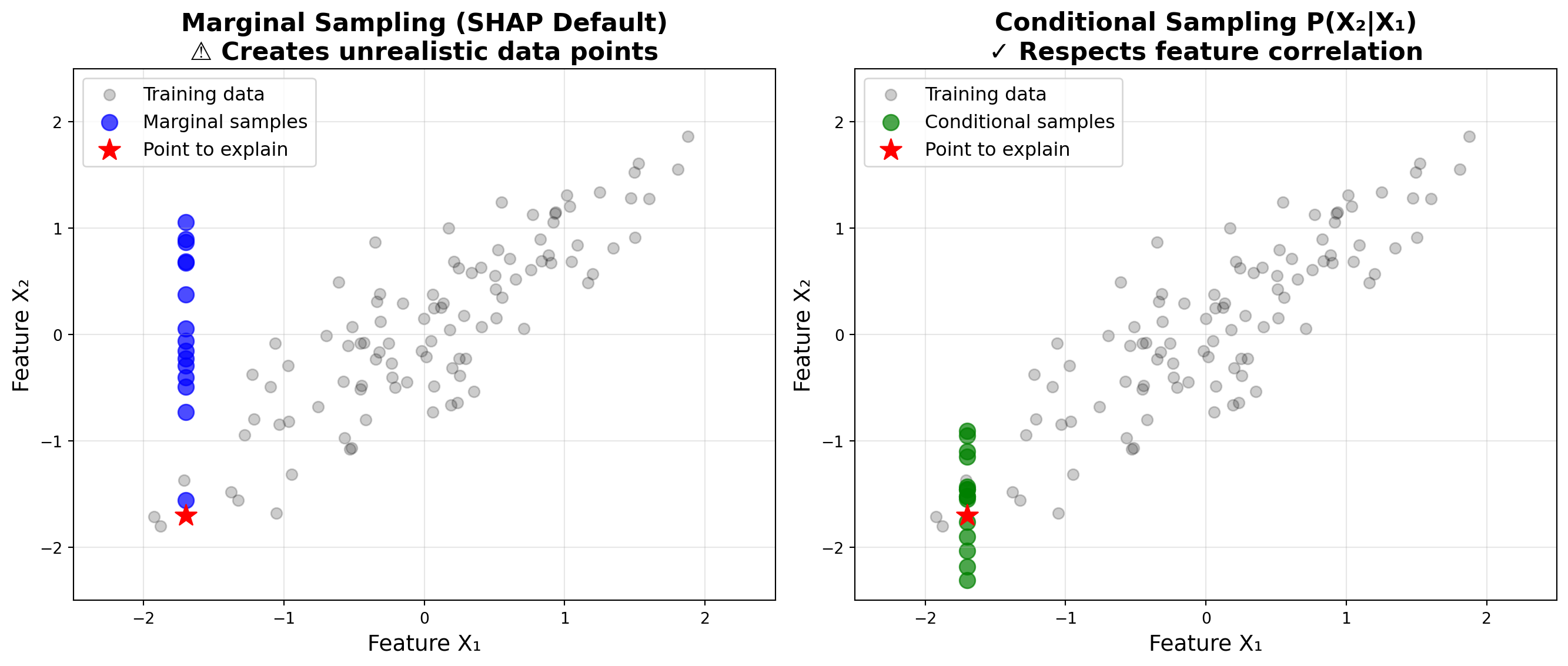

Deep Dive: The Correlation Problem

Why Correlated Features Break SHAP’s Independence Assumption

The Core Issue:

SHAP simulates feature absence by replacing them with sampled values from background data (marginal distribution)

Problems with Correlated Features:

Unrealistic data points: Combining features independently creates impossible combinations

- Example: 2-meter tall person weighing 10 kg

- Example: Rain without clouds

Extrapolation: Model evaluated on data it never saw during training

Misleading explanations: SHAP values based on unrealistic scenarios

Philosophical Question:

If two features share information (e.g., rainfall ↔︎ cloudiness), can we truly isolate the effect of one while ignoring the other?

The Trade-off:

- Marginal sampling: True to the model

- Conditional sampling: True to the data

Your choice depends on interpretation goals!

Molnar, C. (2024). Interpretable Machine Learning. Chapter 11: The Correlation Problem.

Visualizing the Correlation Problem

import numpy as np

import matplotlib.pyplot as plt

np.random.seed(42)

# Create two highly correlated features (ρ = 0.9)

p = 0.9

mean = [0, 0]

cov = [[1, p], [p, 1]]

n = 100

x1, x2 = np.random.multivariate_normal(mean, cov, n).T

# Point to explain

point = (-1.7, -1.7)

m = 15 # number of samples

# Marginal sampling: sample x2 independently (ignores correlation)

x2_marg = np.random.choice(x2, size=m)

# Conditional sampling: sample x2 given x1 (respects correlation)

x2_cond = np.random.normal(loc=p*point[0], scale=np.sqrt(1-p**2), size=m)

# Create side-by-side plots

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(14, 6))

# Left: Marginal sampling (SHAP default)

ax1.scatter(x1, x2, color='black', alpha=0.2, s=50, label='Training data')

ax1.scatter(np.repeat(point[0], m), x2_marg, color='blue', s=100, alpha=0.7, label='Marginal samples')

ax1.scatter(point[0], point[1], color='red', s=200, marker='*', label='Point to explain', zorder=10)

ax1.set_xlabel('Feature X₁', fontsize=14)

ax1.set_ylabel('Feature X₂', fontsize=14)

ax1.set_title('Marginal Sampling (SHAP Default)\n⚠️ Creates unrealistic data points', fontsize=16, fontweight='bold')

ax1.legend(fontsize=12)

ax1.grid(alpha=0.3)

ax1.set_xlim(-2.5, 2.5)

ax1.set_ylim(-2.5, 2.5)

# Right: Conditional sampling

ax2.scatter(x1, x2, color='black', alpha=0.2, s=50, label='Training data')

ax2.scatter(np.repeat(point[0], m), x2_cond, color='green', s=100, alpha=0.7, label='Conditional samples')

ax2.scatter(point[0], point[1], color='red', s=200, marker='*', label='Point to explain', zorder=10)

ax2.set_xlabel('Feature X₁', fontsize=14)

ax2.set_ylabel('Feature X₂', fontsize=14)

ax2.set_title('Conditional Sampling P(X₂|X₁)\n✓ Respects feature correlation', fontsize=16, fontweight='bold')

ax2.legend(fontsize=12)

ax2.grid(alpha=0.3)

ax2.set_xlim(-2.5, 2.5)

ax2.set_ylim(-2.5, 2.5)

plt.tight_layout()

plt.show()

Solutions to the Correlation Problem

Solution 1: Reduce Correlation in Model

- Feature selection: Remove redundant correlated features

- Feature engineering: Transform features to decorrelate

- Example: “rent” + “rooms” → “rent per m²”

- Combine features: Daily rainfall vs. morning/afternoon rain

- Dimensionality reduction: PCA (but loses interpretability)

✓ Benefit: Improves both model performance AND interpretability

Solution 2: Use Conditional Sampling

Available in SHAP library:

⚠️ Warning: Changes the value function - no longer pure Shapley values! Use when goal is to understand data rather than audit model.

Aas, K., et al. (2021). Explaining individual predictions when features are dependent. JMLR, 22(213), 1-34.

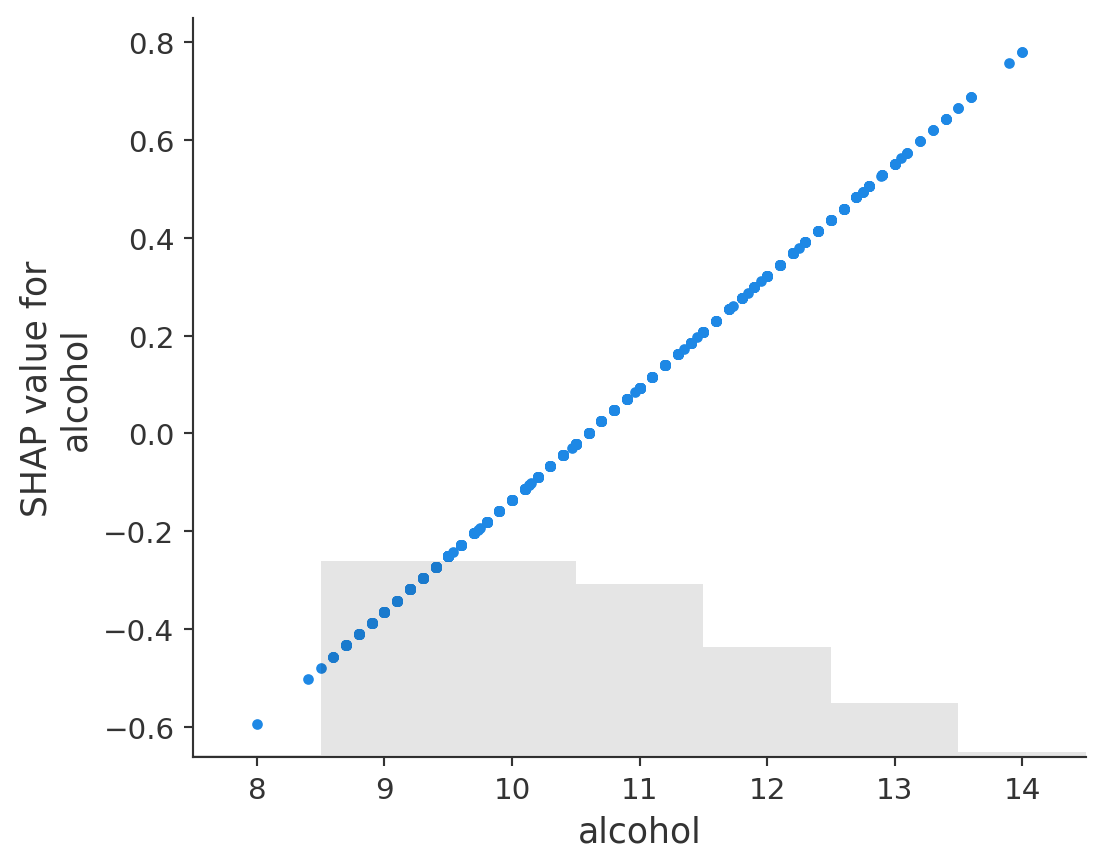

SHAP in Practice: Wine Quality Prediction

A Complete Example with Linear Models

Dataset: UCI Wine Quality

- White wine physicochemical properties

- Target: Quality score (0-10)

- 11 features: alcohol, acidity, sugar, etc.

- 4,898 samples

Why Linear Models?

- Inherently interpretable (coefficients)

- SHAP values simplify elegantly

- Perfect for teaching SHAP concepts

Learning Objectives:

- Load and explore wine dataset

- Train linear regression model

- Compute SHAP values efficiently

- Create three key visualizations:

- Waterfall plot (local)

- Summary plot (global)

- Dependence plot (relationships)

- Verify SHAP = coefficients

Cortez, P., et al. (2009). Modeling wine preferences by data mining from physicochemical properties. Decision Support Systems, 47(4), 547-553.

Loading the Wine Quality Dataset

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

import shap

# Load wine quality dataset

url = 'https://archive.ics.uci.edu/ml/machine-learning-databases/wine-quality/winequality-white.csv'

wine = pd.read_csv(url, sep=";")

print(f"Dataset shape: {wine.shape}")

print(f"\nFeatures: {list(wine.columns[:-1])}")

print(f"\nQuality distribution:\n{wine['quality'].value_counts().sort_index()}")

print(f"\nFirst few rows:\n{wine.head(3)}")Dataset shape: (4898, 12)

Features: ['fixed acidity', 'volatile acidity', 'citric acid', 'residual sugar', 'chlorides', 'free sulfur dioxide', 'total sulfur dioxide', 'density', 'pH', 'sulphates', 'alcohol']

Quality distribution:

quality

3 20

4 163

5 1457

6 2198

7 880

8 175

9 5

Name: count, dtype: int64

First few rows:

fixed acidity volatile acidity citric acid residual sugar chlorides \

0 7.0 0.27 0.36 20.7 0.045

1 6.3 0.30 0.34 1.6 0.049

2 8.1 0.28 0.40 6.9 0.050

free sulfur dioxide total sulfur dioxide density pH sulphates \

0 45.0 170.0 1.0010 3.00 0.45

1 14.0 132.0 0.9940 3.30 0.49

2 30.0 97.0 0.9951 3.26 0.44

alcohol quality

0 8.8 6

1 9.5 6

2 10.1 6 Training a Linear Regression Model

# Prepare features and target

X = wine.drop('quality', axis=1)

y = wine['quality']

# Split data

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=42

)

# Train linear regression model

model = LinearRegression()

model.fit(X_train, y_train)

# Evaluate model

train_score = model.score(X_train, y_train)

test_score = model.score(X_test, y_test)

print(f"Train R² score: {train_score:.3f}")

print(f"Test R² score: {test_score:.3f}")

print(f"\nModel coefficients:")

for feature, coef in zip(X.columns, model.coef_):

print(f" {feature:25s}: {coef:+.4f}")

print(f" Intercept: {model.intercept_:.4f}")Train R² score: 0.284

Test R² score: 0.265

Model coefficients:

fixed acidity : +0.0459

volatile acidity : -1.9149

citric acid : -0.0613

residual sugar : +0.0712

chlorides : -0.0265

free sulfur dioxide : +0.0051

total sulfur dioxide : -0.0002

density : -124.2641

pH : +0.6007

sulphates : +0.6491

alcohol : +0.2290

Intercept: 124.3939Computing SHAP Values with LinearExplainer

# Create SHAP explainer for linear models

explainer = shap.LinearExplainer(model, X_train)

# Compute SHAP values for test set

shap_values = explainer(X_test)

print(f"SHAP values shape: {shap_values.values.shape}")

print(f"Base value (average prediction): {shap_values.base_values[0]:.3f}")

print(f"\nSHAP values for first test instance:")

for feature, value in zip(X.columns, shap_values.values[0]):

print(f" {feature:25s}: {value:+.4f}")

print(f"\nPrediction for first instance: {model.predict(X_test.iloc[[0]])[0]:.3f}")

print(f"Sum: base_value + sum(shap_values) = {shap_values.base_values[0] + shap_values.values[0].sum():.3f}")SHAP values shape: (980, 11)

Base value (average prediction): 5.890

SHAP values for first test instance:

fixed acidity : -0.0348

volatile acidity : +0.0031

citric acid : -0.0055

residual sugar : +0.3151

chlorides : -0.0001

free sulfur dioxide : +0.1074

total sulfur dioxide : -0.0023

density : +0.0256

pH : -0.0654

sulphates : +0.0529

alcohol : +0.0855

Prediction for first instance: 6.372

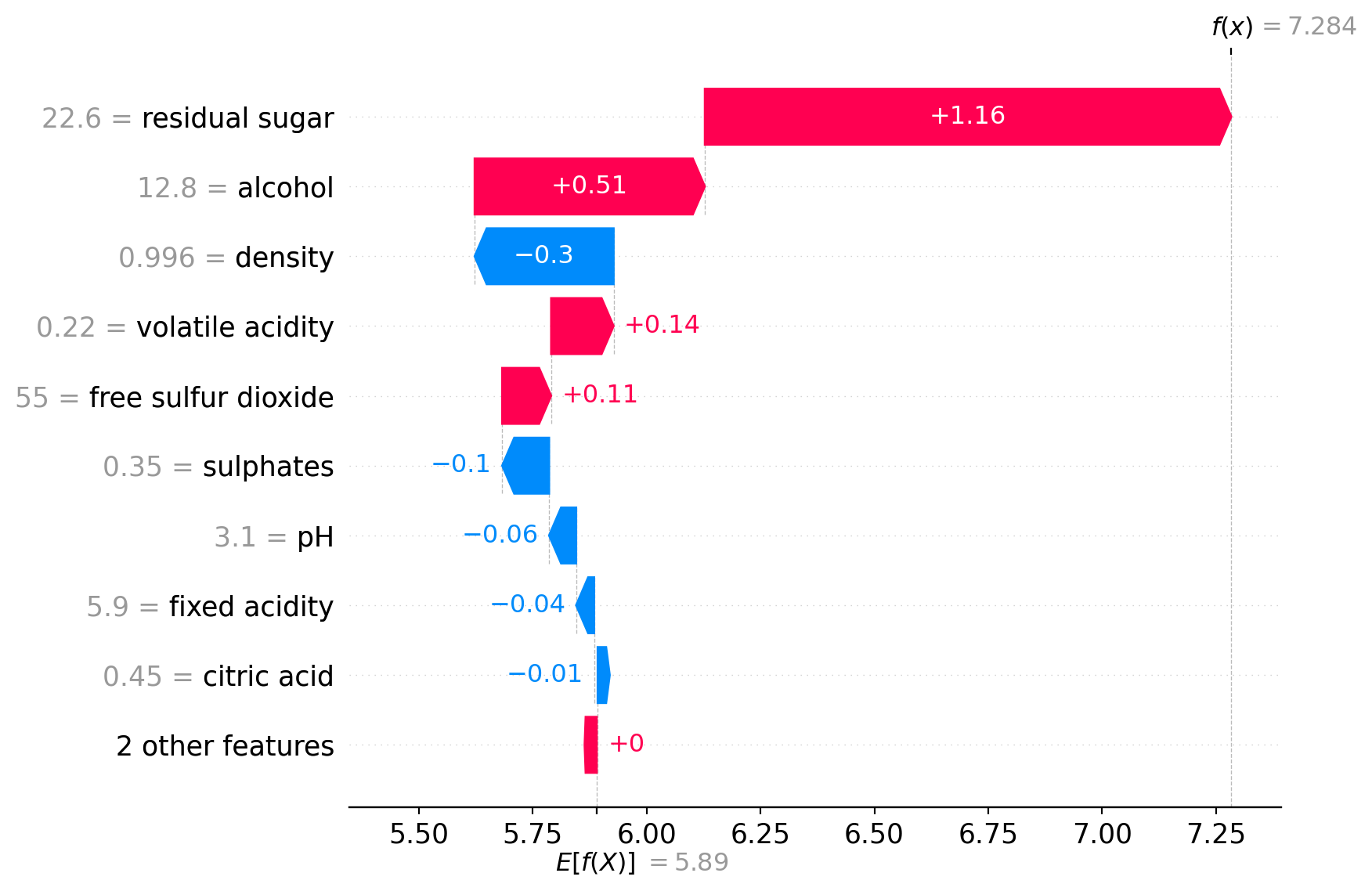

Sum: base_value + sum(shap_values) = 6.372SHAP Waterfall Plot: Local Explanation

# Select an interesting wine (high quality prediction)

idx = np.argmax(model.predict(X_test))

print(f"Selected wine index: {idx}")

print(f"Predicted quality: {model.predict(X_test.iloc[[idx]])[0]:.2f}")

print(f"Actual quality: {y_test.iloc[idx]}")

# Create waterfall plot

shap.plots.waterfall(shap_values[idx])Selected wine index: 14

Predicted quality: 7.28

Actual quality: 5

SHAP Summary Plot: Global Feature Importance

SHAP Dependence Plot: Feature Relationships

Verifying SHAP Values for Linear Models

# For linear models: SHAP = coefficient × (x - mean(x))

# Let's verify this relationship

print("Verification: SHAP values = coefficient × (feature - mean)")

print("\nFor the first test instance:")

print(f"{'Feature':<25} {'Coefficient':>12} {'Value':>10} {'Mean':>10} {'(Val-Mean)':>12} {'SHAP Value':>12} {'Match?':>8}")

print("-" * 105)

for i, feature in enumerate(X.columns):

coef = model.coef_[i]

value = X_test.iloc[0][feature]

mean = X_train[feature].mean()

expected_shap = coef * (value - mean)

actual_shap = shap_values.values[0][i]

match = "✓" if np.isclose(expected_shap, actual_shap, rtol=1e-3) else "✗"

print(f"{feature:<25} {coef:>12.4f} {value:>10.2f} {mean:>10.2f} {value-mean:>12.2f} {actual_shap:>12.4f} {match:>8}")Verification: SHAP values = coefficient × (feature - mean)

For the first test instance:

Feature Coefficient Value Mean (Val-Mean) SHAP Value Match?

---------------------------------------------------------------------------------------------------------

fixed acidity 0.0459 6.00 6.87 -0.87 -0.0348 ✗

volatile acidity -1.9149 0.29 0.28 0.01 0.0031 ✗

citric acid -0.0613 0.41 0.33 0.08 -0.0055 ✗

residual sugar 0.0712 10.80 6.45 4.35 0.3151 ✗

chlorides -0.0265 0.05 0.05 0.00 -0.0001 ✗

free sulfur dioxide 0.0051 55.00 35.09 19.91 0.1074 ✗

total sulfur dioxide -0.0002 149.00 138.00 11.00 -0.0023 ✗

density -124.2641 0.99 0.99 -0.00 0.0256 ✗

pH 0.6007 3.09 3.19 -0.10 -0.0654 ✗

sulphates 0.6491 0.59 0.49 0.10 0.0529 ✗

alcohol 0.2290 10.97 10.51 0.46 0.0855 ✗Comparative Trade-offs: Choosing the Right XAI Tool

| Criterion | PDP Partial Dependence Plot |

LIME Local Interpretable Model-agnostic Explanations |

SHAP SHapley Additive exPlanations |

|---|---|---|---|

| Scope | Global (feature-level) | Local (instance-level) | Both (local + global) |

| Primary Visualization | Line plot (2D curve) | Bar chart (sparse) | Force plot, Summary plot |

| Interpretability | ★★★★★ Intuitive | ★★★★☆ Easy to understand | ★★★☆☆ Requires training |

| Computational Cost | ★★★★☆ Moderate | ★★★☆☆ Fast sampling | ★★☆☆☆ Expensive |

| Theoretical Foundation | Statistical (marginal effects) | Heuristic (local approximation) | Game theory (Shapley values) |

| Feature Coverage | One at a time | Sparse (selected) | All features (dense) |

| Best For | Understanding global trends | Quick local explanations | Rigorous feature attribution |

| Key Limitation | Assumes independence | Unstable (sampling) | Computational cost |